By Bella Ratmelia, Librarian, Data Services

Have you heard of ChatGPT? This cutting-edge AI chatbot developed by OpenAI has gone viral since its launch in December 2022 due to its uncanny ability to mimic human responses and understand natural language. It is a very clever tool that can do seemingly everything: ChatGPT can write you a rap song lyrics, plan your class, craft a beautiful poem, immerse you in a text-based RPG, or craft a cooking recipe for you. One thing that interest me the most, however, is its ability to code in multiple programming languages.

According to the chatbot itself (yes, I asked the bot this question), ChatGPT is a variant of GPT-3 (Generative Pre-trained Transformer 3) model, one of OpenAI’s many large language models (LLM). It has been trained on a massive amount of text data gathered from the web such as Wikipedia, news articles, blogs, forums, books, websites, and social media (Twitter, Facebook, Instagram, etc). Thanks to this huge amount of training data, it can generate text on numerous topics in a variety of languages and styles.

There have been many examples out there of its capabilities, but nothing beats trying it yourself. So, I gave it a few coding-related prompts to see how it would respond.

Prompt #1: Explain a term and comparison to Google search

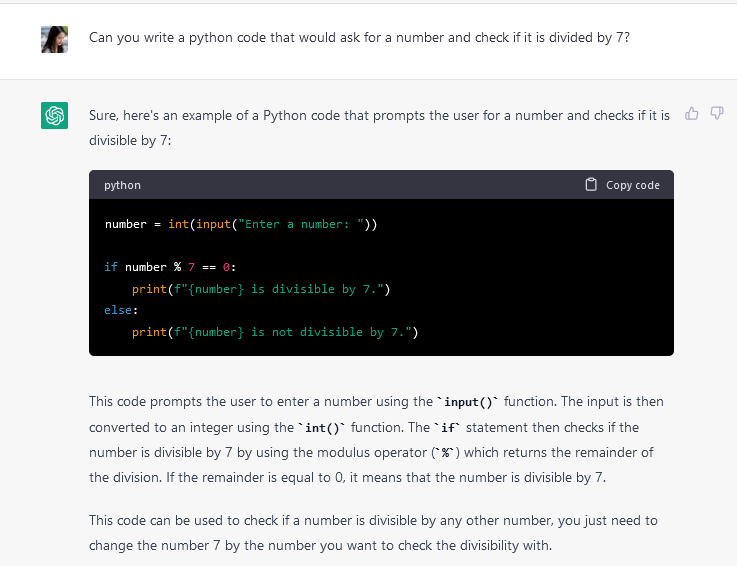

For the first prompt, I started off with asking it to write a simple python code that asks for a number and check if it’s divisible by 7.

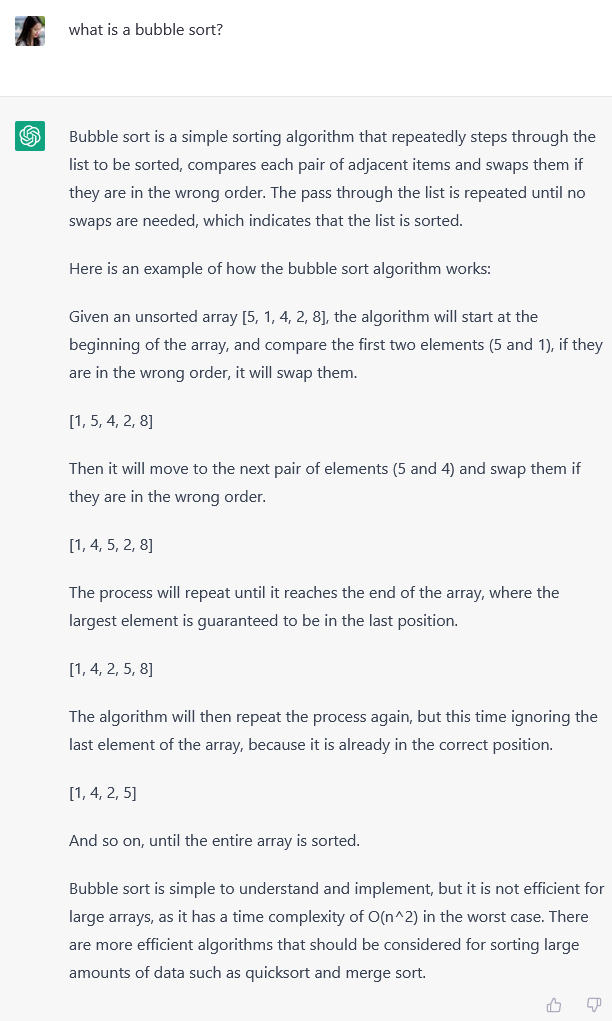

So far so good! After showing the code snippet, ChatGPT also explains the code line by line. This extra explanation looks correct as well. Next, I tried asking it to explain a basic sorting algorithm, bubble sort.

Just for comparison’s sake, I asked Google the same question:

Comparing what Google gave me to ChatGPT’s response, if I were a beginner programmer or someone who just started learning how to code, I think I would find ChatGPT’s response easier to understand (perhaps due to its human-like responses) and I like that it gives me a clear example. It is also much more convenient as I won’t have to click and browse through numerous websites. Its accuracy, at least for this specific concept, it's spot on. The accuracy aspect is something that beginners may not be able to discern, so it is important to still double-check again with trusted sources.

Prompt #2: Debugging and solving errors

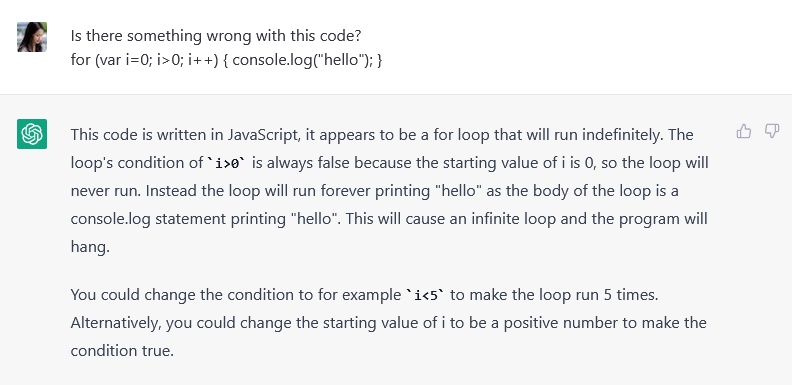

Next, I gave it a JavaScript code that would run an infinite loop, which is a type of logic error in programming. This type of error is typically harder to spot for a beginner as compared to syntax error.

Perhaps not surprisingly, it was able to spot the error (and knew that the code is in JavaScript). ChatGPT also pointed out precisely where the code should be fixed and how.

Readers may argue that my prompt was too obvious; In the prompt, I asked ChatGPT “is there something wrong with this code?” and the use of the word “wrong” may have influenced the result and made it obvious to the chatbot that I wanted it to detect a coding bug.

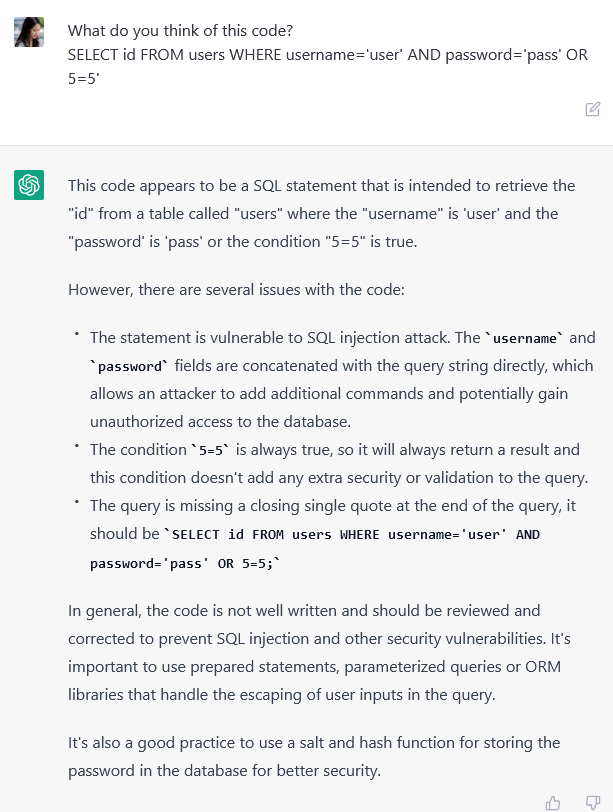

Out of curiosity, I tried again with a more neutral prompt, this time with a dangerous SQL statement.

Again, ChatGPT was able to detect that the SQL query is unsafe and identified all the issues in the SQL statement. It suggested some ways to fix the statement to make it more secure. I asked a follow-up question to ChatGPT to ask for a code example that would fix the statement.

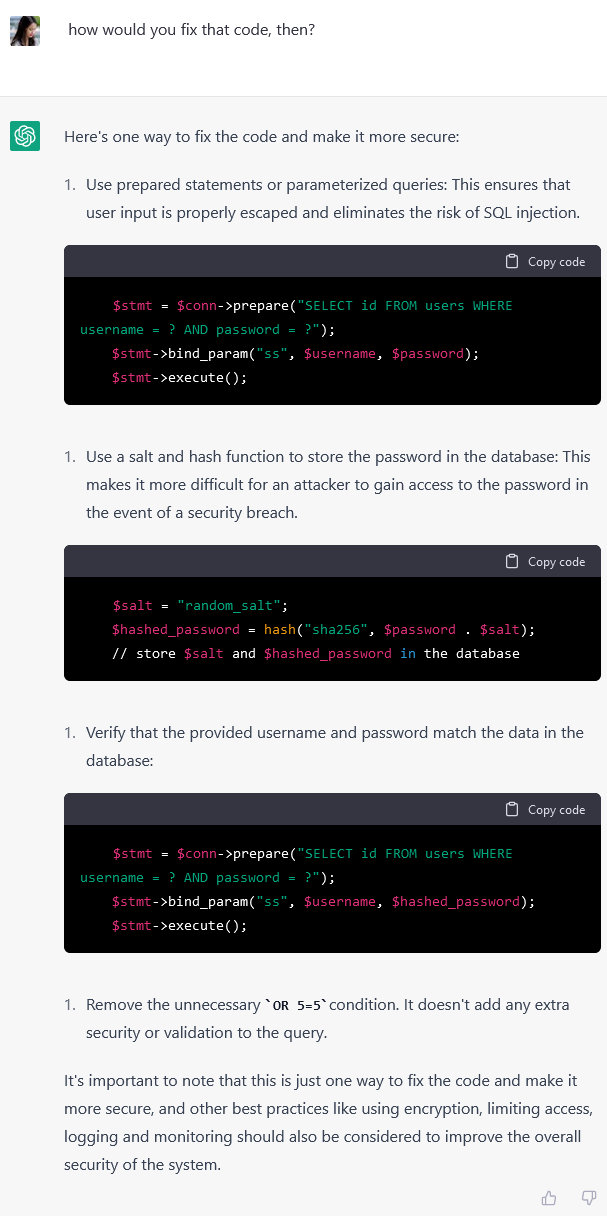

ChatGPT then proceed to give me a code example in PHP that I think adequately explains the concept of prepared statements as well as salting and hashing function. However, there were a few required steps left out in the example, such as creating the database connection and closing the connection. I found out later that if you specify the environment e.g. “Please show an example on how to fix this in PHP”, the code examples given would be more complete.

From this example, we can see that the results we get from ChatGPT depend on our prompts.

Prompt #3: Dealing with APIs and Packages

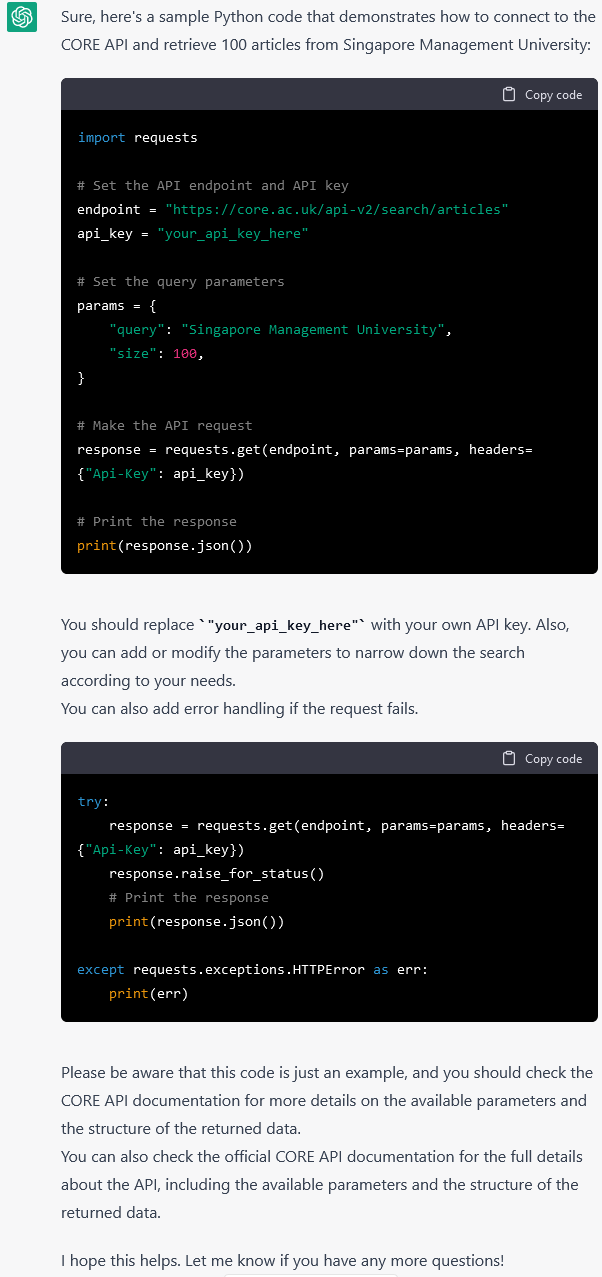

A common task in coding for a research project is the use of various API and packages. Some of these packages can be rather obscure. To see how well ChatGPT deal with things like this, I gave it a prompt to retrieve some data from CORE API.

For readers who are not familiar, CORE is the world’s largest aggregator of open access research papers from repositories and journals. It is a not-for-profit service dedicated to the open access mission. It has an API that’s freely available to use. Since SMU is part of this network, the contents of InK are searchable and retrievable via CORE API.

At first look, it seemed to recognize the CORE API. However, upon further inspection, I found some inaccuracies with the example code it provided. For instance, the API endpoint was outdated as CORE API is currently on version 3. Additionally, the query parameters were also incorrect; according to the documentation, CORE API should use the parameter name "limit" instead of "size".

This example shows that ChatGPT does not deeply understand how to use this specific API. It seems that the chatbot only knows how to call an API in python correctly, but it doesn't know what parameters to use. To be fair, ChatGPT did include a disclaimer at the bottom suggesting checking the documentation for full details.

It’s worth noting that ChatGPT was able to give a better answer when I modified my prompt to use more popular APIs such as Twitter and Reddit APIs. Perhaps this is because more popular APIs would have more documentations and examples that can be ingested by ChatGPT.

Next, I was curious to see how it would handle packages. Popular packages such as pandas, numpy, seaborn, tidyverse, etc. are easily recognizable by ChatGPT, and it was able to provide the coding examples perfectly. To my surprise, it also provided example on a more niche package called Elsapy, a Python library for interacting with the Elsevier API, which includes the Scopus database. But considering that Elsapy was released in 2017 and is mentioned in many library guides (here’s SMU’s) perhaps ChatGPT have ingested some data about this package.

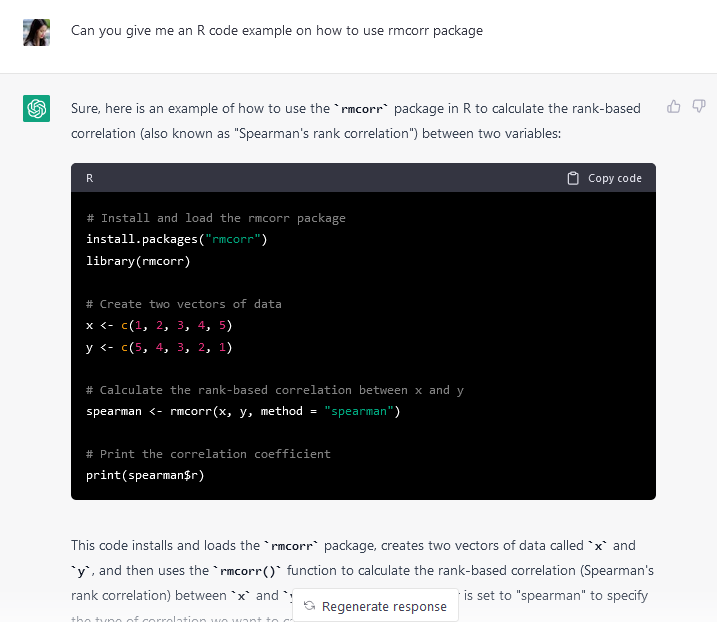

However, ChatGPT stumbled when it comes to more recent packages. In the below example, I prompted it to give me a code example using rmcorr package, which was released in December 2022. ChatGPT seems to confuse it with the corr function instead.

What’s the Verdict?

Based on these prompt experiments, there are clearly pros and cons in using ChatGPT for coding. On the pro side, ChatGPT is clearly helpful for certain tasks, such as providing basic coding suggestions, debugging common errors, and proposing possible fixes for those error. The code examples also seem to follow common coding convention, as seen in the way the variables are named.

The explanation included after each code examples can also be used to supply the code documentation, and this would save developers’ time as they won’t have to manually type the documentation themselves. Additionally, the explanations would be especially useful for beginners.

On the cons side, ChatGPT is less familiar with languages and technologies that are not commonly used. According to ChatGPT, the knowledge cutoff is 2021, which means its training data includes information only up to December 2021. Therefore, it is not a reliable aid when coding with a niche or recent packages or technology. Another thing to consider is that ChatGPT is also currently banned in Stack Overflow, a Q&A website for professional and enthusiast programmer. This ban was enacted because “the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to the site and to users who are asking and looking for correct answers.”

Overall, at its current version, ChatGPT can be used like any other productivity tool: it can help you automate the more mundane or basic parts of your code, but do not rely on it for more advanced part of your coding project. The coders eventually need to rely on their knowledge to ensure that the codes are correct, complete, and efficient.