By Aaron Tay, Lead, Data Services

The scientific literature is growing exponentially and it is getting harder to keep up. While missing a relevant paper or two isn’t usually too critical for traditional narrative reviews, it is a different story if you are doing a systematic review or meta-analysis (e.g. Cochrane review or Campbell review) or any type of review that requires you to be as thorough and comprehensive as possible.

In such reviews, authors typically will do first level screening of thousands if not tens of thousands of papers for suitable studies by reviewing their title and abstract to check for relevant items that fit their inclusion criteria.

Only articles that pass this first level of screening (which is typically a much smaller set) will have their full-text downloaded, and the reviewer will proceed to do a second level of screening before considering the article to be eligible for including in the review.

First level screening is extremely time consuming

Screening thousands of papers takes a lot of effort. For example, even a “small” systematic review that requires screening of say 3,000 articles, will at a conservative rate of screening 2 title/abstracts per minute require 25 hours! (Also consider in many systematic reviews two screeners do the screening independently to check for consistency)

Given that typically far less than 1% of papers screened are eventually included in the review, this means going through a high volume of papers just to find one relevant paper.

Can the latest machine learning techniques help with this issue?

This is where ASReview, the free open source and award winning screener tool by a team of librarians, researchers and machine learning experts at the Utrecht University comes in.

ASReview is actually a python package but comes with an easy to use web interface for screening so you do not actually need to know how to use python beyond installing the package. See installation instructions here.

Note though ASReview is usually run locally on your local computer, it can be setup to run on a web service as well and users can do screening on their smartphone browsers via the web service.

How does ASReview work?

ASReview employs a machine learning technique known as active learning to help with screening.

You begin by uploading a set of papers into ASReview in the appropriate format – either RIS or CSV with appropriate columns named for title and abstract.

Setting up and training ASReview for active learning

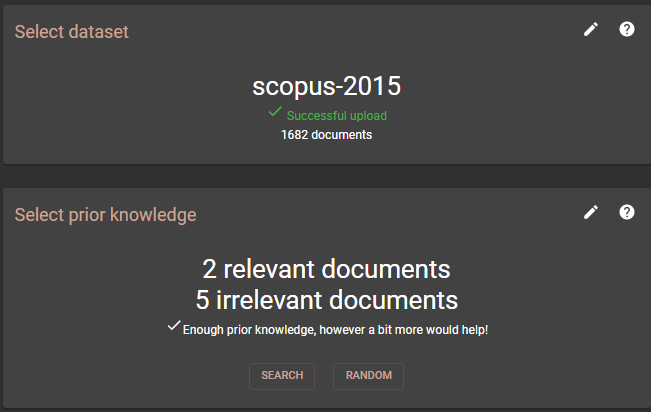

So how does this work? First you search and select a few papers that are relevant and a few papers that are not relevant to start the initial training of the classifier.

Once you have selected enough papers (typically 2 relevant and 5 irrelevant ones), ASReview will prompt to move on to the next part of the setup process.

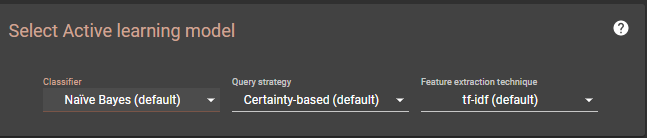

In this phase you are allowed to setup various machine learning options.

Multiple machine learning algorithms such as Naïve bayes, Random Forest, Support Vector Machine, Logistic Regression are available for use in the classifier, but if you are unsure what to choose, go with the default Naïve bayes algorithm. Similarly, the default feature extraction technique used is TF-IDF, but you can use the more advanced but slower DOC2vec method if you install the additional Python gensim package.

Click Finish, and wait a few minutes for the classifier to be trained. Once it is done, you can click on “start reviewing” to begin the screening process.

The process of Active learning begins

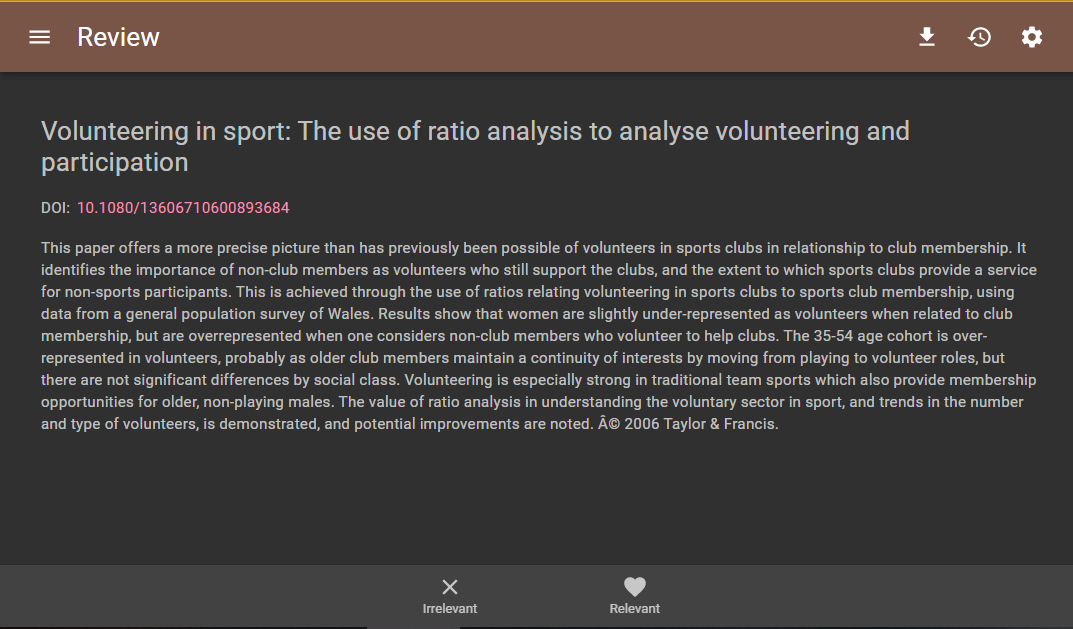

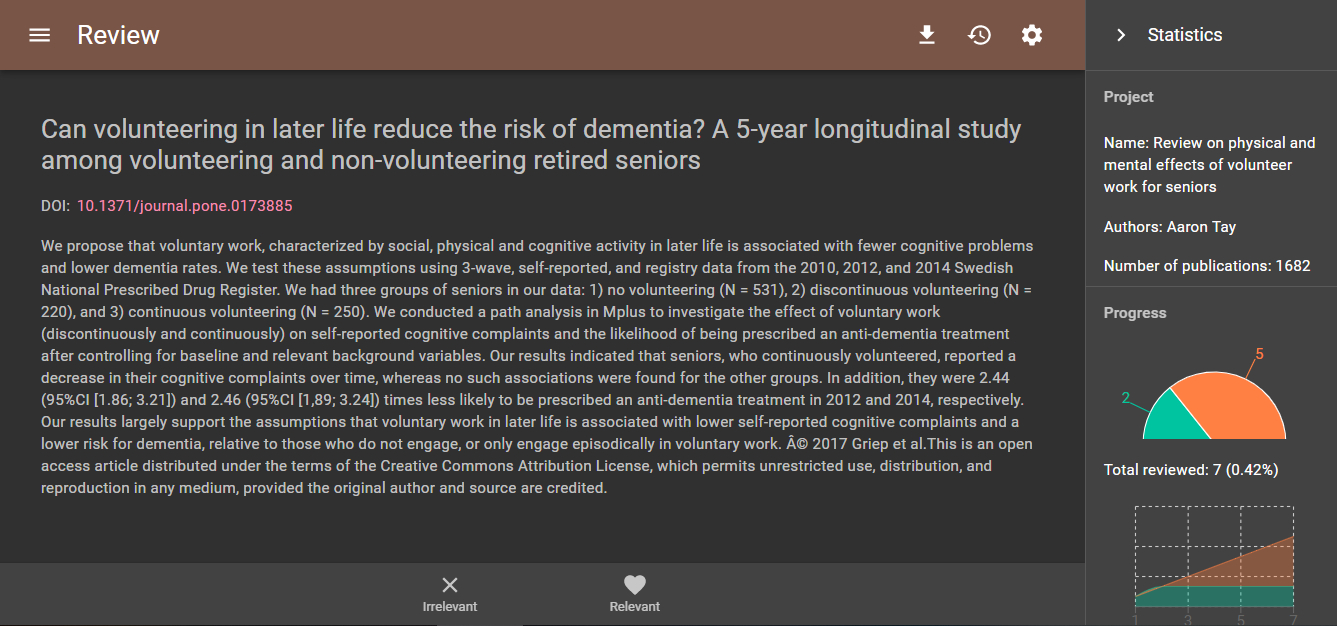

After you have properly set up ASReview, active learning begins. Instead of randomly selecting a paper for you to screen, by default, ASReview will select a paper it thinks is most likely to be relevant based on the classifier you just created, and you will be asked to screen the paper.

Given that your initial classifier, was trained only on few examples, it may get it right or wrong. In either case, the label you give the paper “relevant” or “not relevant” will be used to further train the classifier.

This improved classifier will then select yet another paper it thinks is likely to be most relevant for you to screen and once you have done that, again the process repeats.

Notice that while ASReview suggests what to screen next, it never makes the decision on whether a paper is relevant or not, it is all in the hands of the human reviewer.

How good is ASReview?

As you go through the process and label more and more papers, ASReview will get better at selecting relevant papers for screening. Eventually it will prioritise mostly relevant papers to be screened first.

But how good does it get? The answer varies depending on factors including the data using for screening and to a much lesser extent the different algorithms used.

A simulation study on 8 data sets suggests that in some cases, ASReview can be so good at prioritising papers that you may need only screen as little as 5% of the total papers and already cover 95% of the relevant papers and this was independent of which algorithm you chose.

Even for data sets that were trickier, ASReview often needed you to just screen 40% of the total papers to reach that same benchmark of covering 95% of all relevant results .

To illustrate what this means, say if you started with a set of 10,000 papers and say 100 were actually relevant. Using ASReview in the way I described earlier, you would almost always be safe to stop screening after 40%*10,0000 = 4,000 papers to ensure you got 95% of relevant papers or 95 papers.

This is a 60% time savings!

But you might argue, I want to get a 100% of relevant papers and the only way to be sure is to screen everything which defeats the purpose of ASReview.

The problem with this is that even if you do not mind the time screening when the number of papers you need to screen become large, humans will inevitably make errors as well in the range of 5-10%.

Ending screening in ASReview

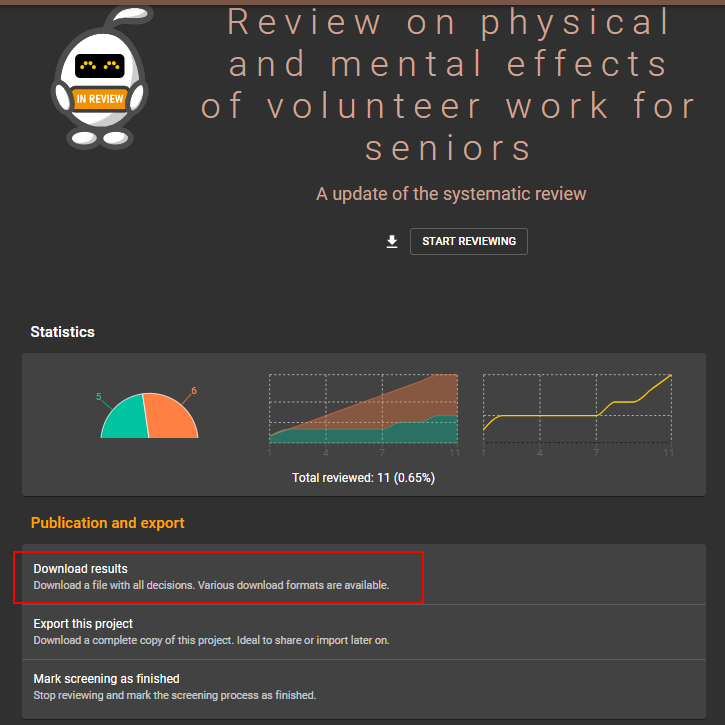

Once, you have decided to stop screening you can export ASReview's results for the project in csv with a column showing the papers you have judged to be relevant as well as an ordering of the remaining papers that it deemed to be most likely to be relevant based on its latest classifier.

Conclusion

ASReview is a powerful piece of software and has addition functions, e.g., for simulation and you can extend its capabilities further by installing new python packages and extensions.

For more help, refer to the documentation:

or contact your Research Librarian for assistance.