By Aaron Tay, Lead, Data Services

Starting from this issue of ResearchRadar, Aaron will keep you updated on some of the newest and hottest research tools of interest in a regular feature, The bleeding edge.

Elict.org - using GPT-3 for literature review

You may have read about OpenAI’s Language model GPT-3 that took the world by storm in 2020. One of the largest language models at the time created (175 billion parameters) using deep learning, it exhibited state-of-the-art results on various machine learning test suites. People were amazed by GPT3’s general ability to do code completion (e.g., OpenAI’s Codex model which is the basis for GitHub Copilot), write stories, carry out conversations and more with nothing but a few or no prompts.

Late last year, OpenAI made GPT-3 available as an API open to all without a waitlist and if you are curious, you can try it out. One month later in December 2021, they announced functionality allowing you to fine-tune the model with your own training data.

Elicit.org is one of the first in the world to take advantage of this functionality by training GPT-3 on Semantic Scholar metadata (title and abstract).

On the surface, Elicit.org looks like the run of a mill academic search engine that helps you find relevant papers, but looks can be deceiving.

First enter your question or research statement into Elict and it finds not just the paper that may have the answer but “reads” and “summarises” the abstract and generates a one-line sentence summary of the abstract that answers your question. . For full details on how Elicit works, in terms of ranking models, fine tuning and more refer to - How elicit works.

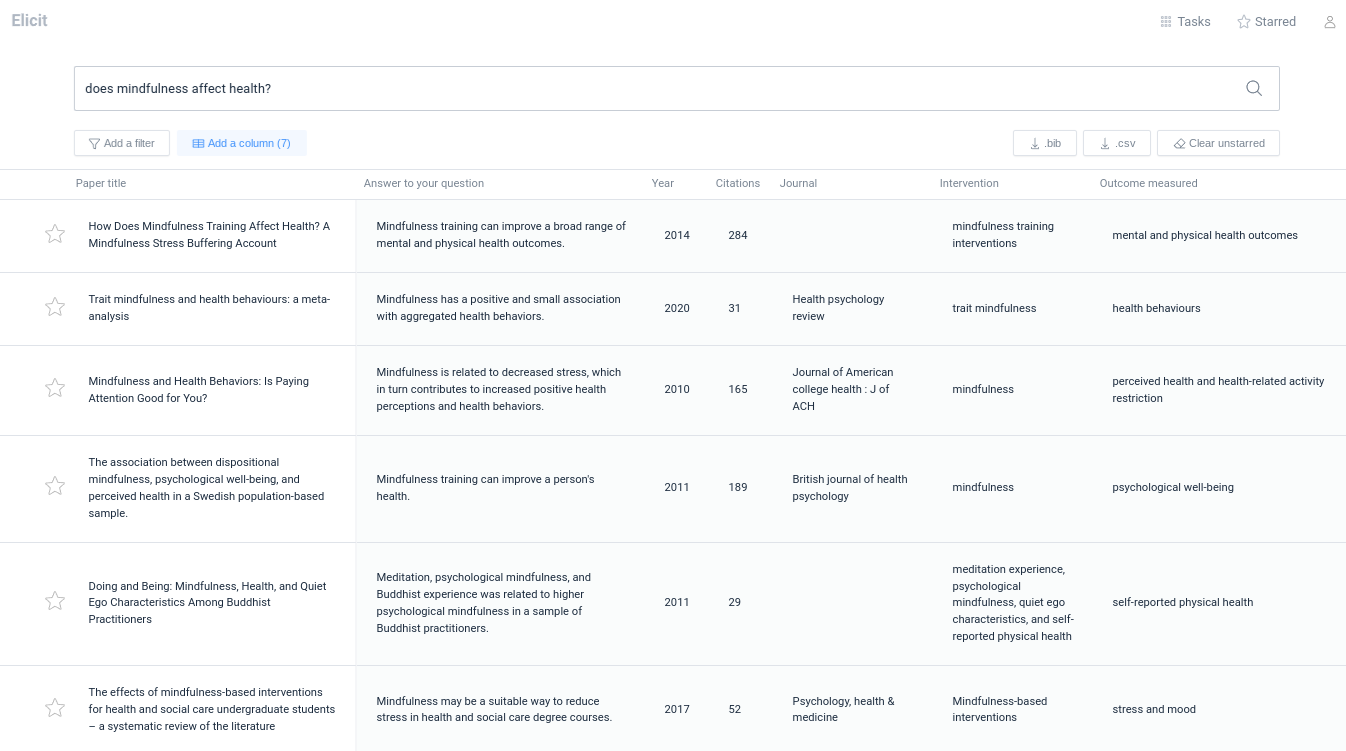

In the toy example below, I enter “Does Mindfulness affect health” and as you can see below it not just gives me papers it thinks are relevant but summarises the abstract with a sentence that should in theory answer my question.

You can narrow down your search further by clicking on the “Start” icon of each result that you find relevant and it can find other similar papers (In my example, I did not specify exactly what ‘health’ means, so you can further narrow it down using this method). You can also click on the “Filters” icon to filter by year range, type of paper (Randomised Control Trial, Review, Systematic Review, Meta-analysis) as well as keywords in the abstract.

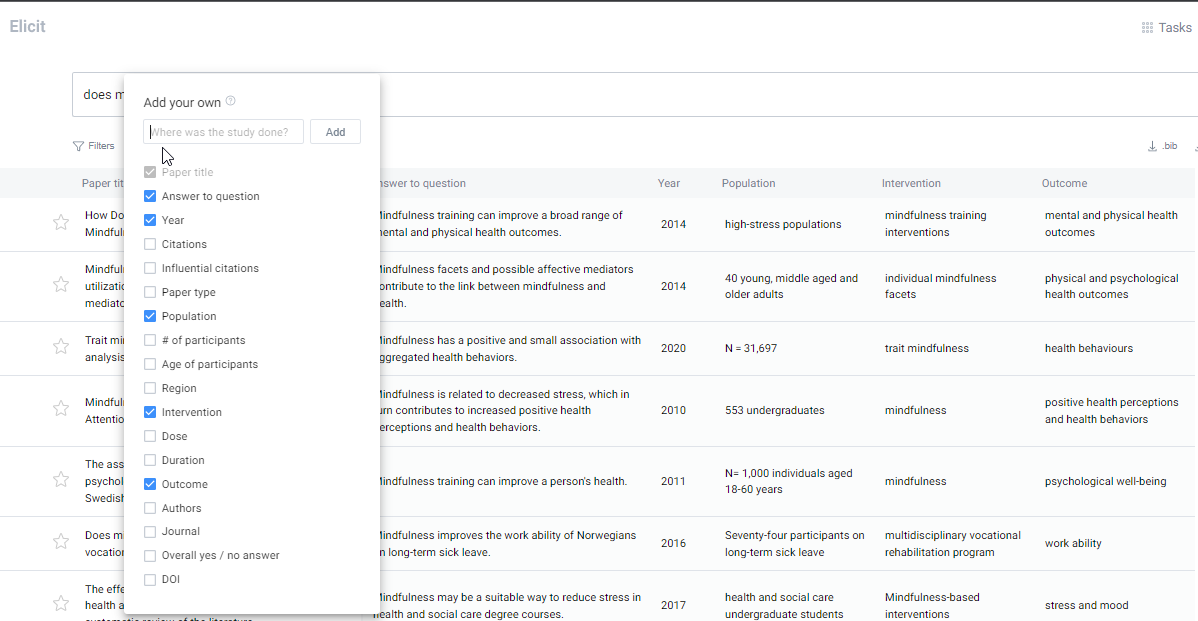

More importantly, Elicit allows you to create other columns of information and as a default, it suggests columns for not just paper related metadata like:

- Year of publication

- Total Citations

- Type of paper (Randomised Control Trial, Review, Systematic Review, Meta-analysis)

- Author

- Journal

But also characteristics of the paper itself such as:

- Population

- Outcome

- Intervention

- Region

- Dose

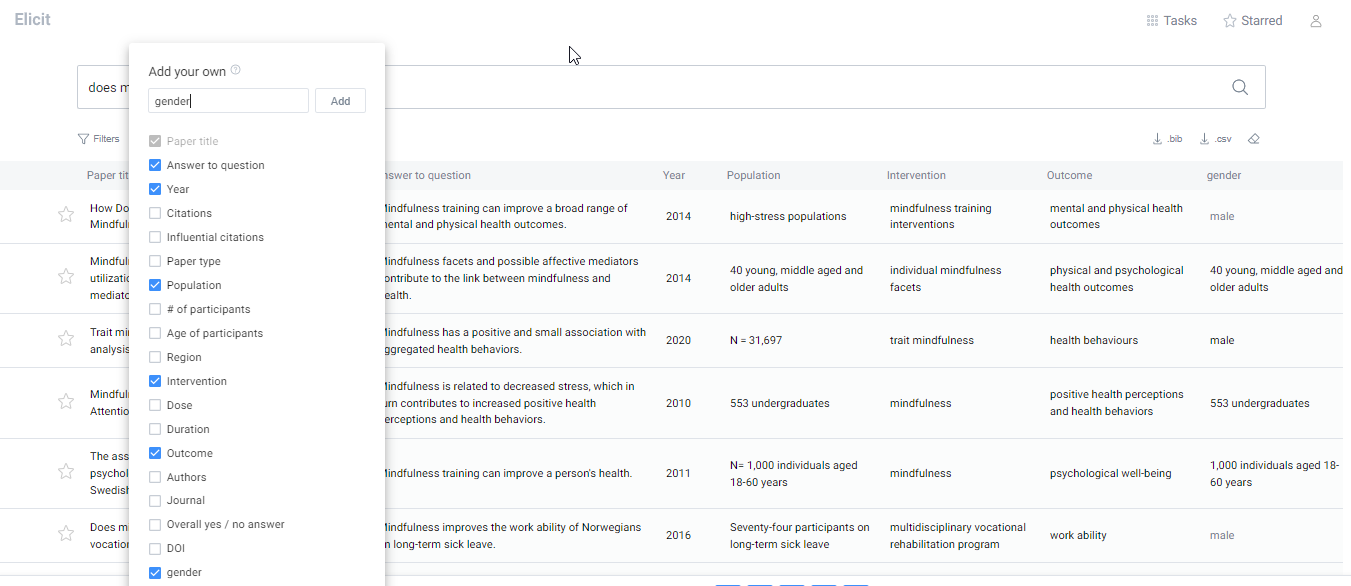

Still not impressed? You can even ask Elict to extract custom columns of data!.

In my example, I tried asking Elicit to extract the gender of the participants in a separate column.

The results are not perfect but it did manage to correctly extract the gender of participants for 3 of the 5 cases. No doubt more fine-tuning is needed here.

You can of course download this table as csv or bib format once you are happy with the result.

Librarian’s Take: Elicit is a type of search engine that is sometimes called Q&A (Question and answer) engine. Instead of just giving you documents that are highly relevant, it tries to extract from the document and give you the answer directly.

Having tried many Q&A (question & answer) academic search engines (e.g., those based on the CORD-19 dataset to answer COVID-19 questions), this is the first one to ever impress me as potentially useful. The fact that it uses GPT-3 to good effect to “read” and extract facts from the abstract to create a research matrix of papers is a compelling idea.

This is particularly so if you are engaged in systematic reviews (the pre available columns for “population” “intervention”, “outcome” hints at this).

That said this is in early-stage beta and one must be careful not to fully trust the results. Language models are often known to “hallucinate” or make up answers, but in this case, I have seldom seen it happen. The authors of the tool claim to have improved on this issue by doing a sequence of fine-tuning with high-quality datasets but they “haven’t fully solved the problem yet”.

For example, if you select a column for “Country”, the column values are often correctly left blank because the abstract does not include the country of study (at least not directly). Still, it is important to check against the abstract to be safe.

Finally, Elicit currently only has access to titles and abstracts and the obvious next step is with full text. This will allow you to extract a lot more information such as null hypothesis, methods, limitations etc at least in theory. Still, this is a highly experimental tool at this stage with a lot of questions about the appropriateness of using such "AI" assistant tools for research, so use it with caution!

You may also be interested in a detailed roadmap by Elicit as well as this piece on How to use Elicit responsibly by the team.

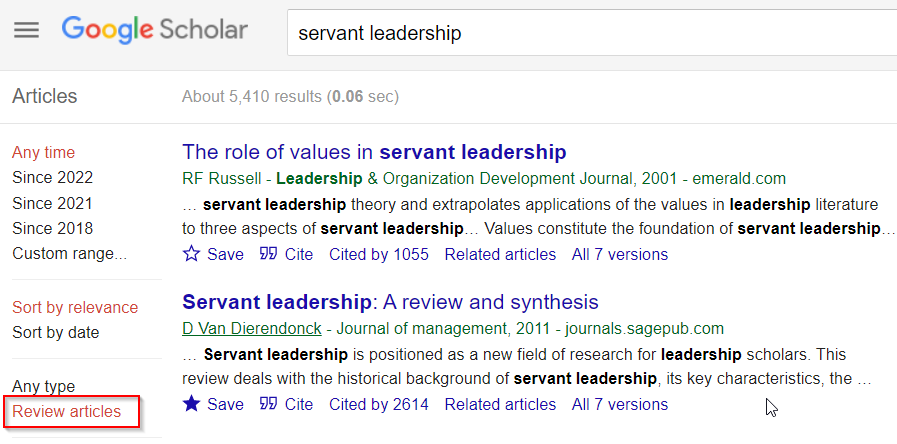

Google Scholar adds review article filter

In a past research radar piece, I wrote about 3 major ways to find review papers, systematic reviews, meta-analysis, and other rich sources of references to kick start your literature review. Back then the only way to find reviews in Google Scholar was to use a keyword search including the word “review”.

Fast forward a year later and it seems Google Scholar now has a “Review article” type facet. This seems to have been silently added in Google Scholar in the last few months as there was no announcement at all in the Google Scholar blog.

Librarian’s Take: There's no information currently on how Google Scholar identifies “Review articles”, nor is there any study comparing the effectiveness of this filter vs other methods (but I’m sure there will be some eventually) but as a quick and dirty way to identify reviews, this seems usable.

Zotero updates to 6.0 and adds built-in PDF reader

The popular open-source reference manager Zotero updates officially to 6.0. Among some feature updates is a new built-in PDF reader within the main Zotero window. This is a long-awaited feature that many Zotero users have been waiting for, allowing Zotero to match the functionality available in alternative reference managers like Mendeley.

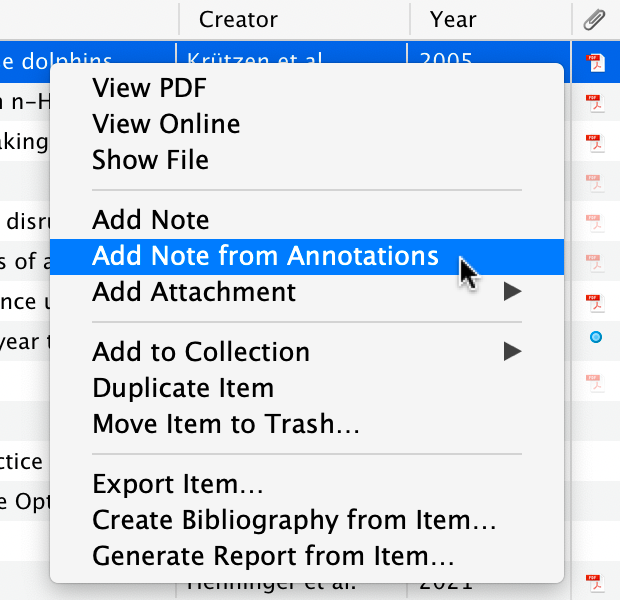

Zotero 6.0 now also allows you to annotate the PDF and convert annotations to a Zotero note with a few clicks. (This duplicates part of the functionality of the Zotero extension Zotfile). Note that the annotations added are links that are special. When you click on those links, they open the PDF and bring you to the section where the annotation was made.

A somewhat niche feature but you can also now also export your notes in markdown without the use of a separate Zotero extension such as Mdnotes for Zotero. This can be useful if you have a workflow that synchronises your Zotero annotations with a productivity tool that accepts input as markdown format like Notion, Obsidan, Logseq or Roam Research (Watch the video "How the Zotero 6 update changed my workflow" on this workflow).

Read the "Zotero Version History" for full change log.

Librarian’s Take: If you are a regular Zotero user, there is probably little risk to upgrade as 6.0 has been in beta for months. That said, if you have a very customised Zotero setup and are using specific Zotero Plugins, some of them may no longer work.

This is partly because some of them have functionalities that are mostly included in the upgraded Zotero or the developer has not updated the plugin for 6.0.

In such cases, you might want to be cautious about upgrading.