By Aaron Tay, Head, Data Services

As researchers, you are probably familiar with how the latest search engines are now leveraging AI, in particular large language models to improve their capabilities, from improving the relevance of results to generating answers based on summarization of top ranked answers via Retrieval Augmented Generation.

In past Research Radar articles and webinars, we have introduced to the SMU Community academic AI search tools, like Scopus AI (trial), Web of Science Research Assistant (trial), SciSpace, Undermind, scite.ai assistant, Elicit.com (freemium only) and more.

However, in Feb 2024, OpenAI introduced a new search feature named "Deep Research" which led to many other similar product offerings from competitors (Note at the time of writing, Deep Research is available only to ChatGPT plus and pro users).

But what is Deep Research and how does it differ from past AI search features?

What is "Deep Research" ?

| Responses time | Length of output | |

|---|---|---|

| Typical AI search engines | Fast, at most a few seconds | Short, typically a few paragraphs |

| "Deep Research" | Slow, can take minutes if not hours | Long report form, typically pages long |

OpenAI's use of the label "Deep Research" is, in fact, not unique. Google launched Gemini Deep Research in Dec 2024 before OpenAI's announcement while Perplexity launched Deep Research, two weeks later after OpenAi's announcement. Grok3, launched in the same timeframe also has a similar feature that is dubbed Deep Search.

In fact, the first "Deep Research" project is probably STORM by Stanford University, which was available early 2024 that aims to "write Wikipedia-like articles from scratch based on Internet search."

But how do "Deep Research" tools differ from typical answers generated by AI search tools like Bing Copilot, Perplexity.ai or even academic versions like Scite.ai assistant, Scopus AI etc?

While some have been sceptical of the appropriateness of using the title "Deep Research" given how many of these tools are more doing broad surveys (more breadth-first search than depth-first) there is some general consensus of what "Deep Research" means in terms of how they differ from typical AI generation tools.

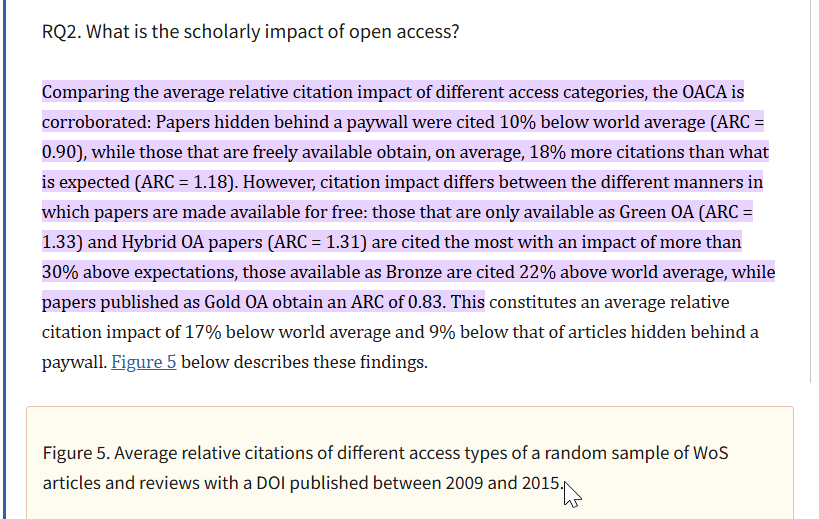

One difference is the length of the output, unlike typical AI search engines, which typically provide generated answers of a few paragraphs' length, "Deep Research" products are meant to produce report length outputs (aka Report Generation NLP Task).

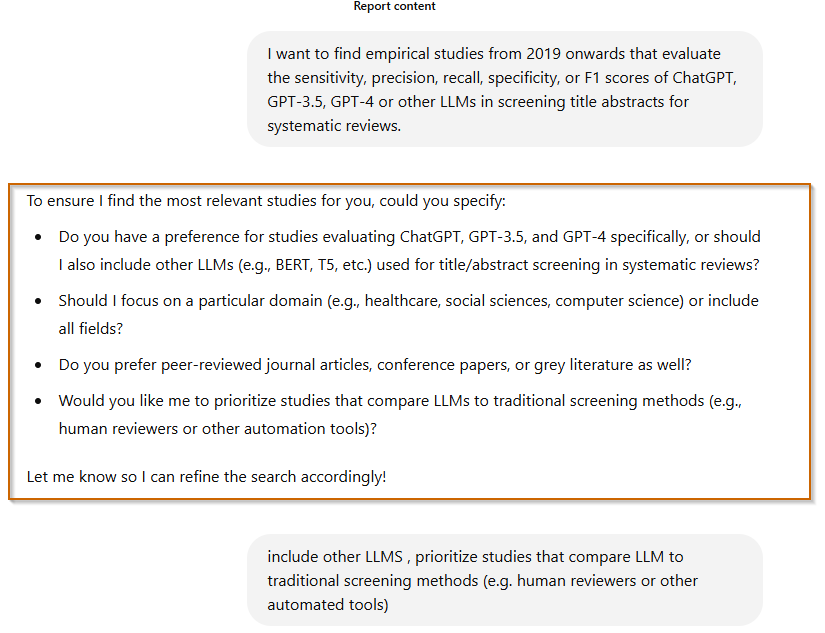

Compare the following answer from Scite assistant and the subsequent report from OpenAI's Deep Research.

This increased length comes with a cost, mainly in terms of longer response time. While typical AI search tools take at most a few seconds, "Deep Research" tools will take a few minutes, if not hours, to generate.

One way to think of "Deep Research" is that it is doing "deep search", where it is running iterative searches to try to find suitable content to cite. Another way to think about it is that it is acting as an agent (OpenAI's Deep Research using o3 a reasoning model) deciding how and what to search for.

If this sounds familiar, this is how Undermind.ai's search works which is why Undermind takes 5 minutes at least to return results.

The higher latency is, of course, also due to the fact that it needs to generate a lengthy report which includes "deeper" searching to find more as well as a more complicated structured generation method. For example, one method would be to break down the query into sub-questions, which would form sections, and the system will attempt to find answers for each section. For more technical details on how this might work, see this blog

There are, of course, many ways to do report generation, and this post tries to classify the different "Deep Research" tools among two dimensions:

- Deep/Shallow - how much or how long the system searches iteratively

- Handcrafted/trained - How much of the system is set up as agentic workflows (with handcrafted prompts) and how much is the system using LLM as agents that have been trained end to end.

How good are these Deep Research products?

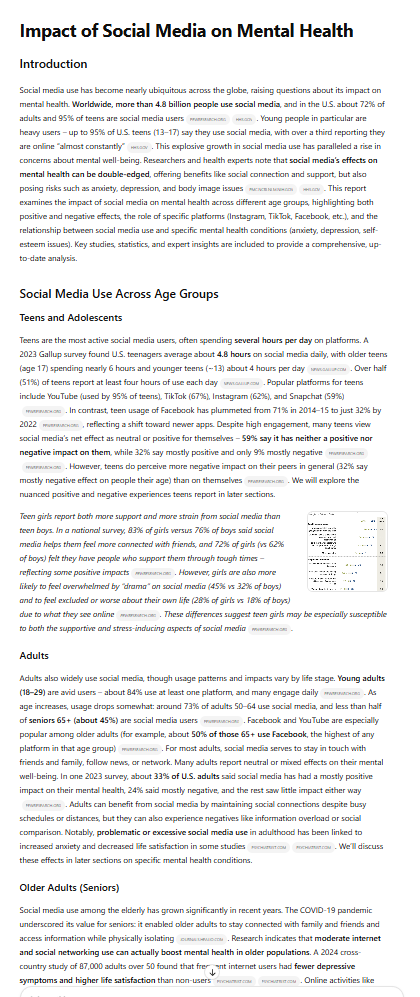

It is still early days but here are some sample reports from each of these products for you to look at:

- OpenAI Deep research (Sample Report)

- Gemini Deep Research* (Sample Report)

- Stanford University's STORM* (Sample Report)

- Perplexity Deep Research* (Sample Report)

- Grok3 Deep Search* (Sample Report)

*Free to try

In general, a common view is OpenAi's Deep Research is the best of the bunch (probably because it uses the so far unreleased o3 LLM model) and tends to generate longer and more nuanced reports and possibly lower hallucination rates than others.

However, even OpenAi's Deep Research is far from perfect and has several issues from the academic point of view.

First, all the generated in-text citations are not in standard academic citing style.

A bigger issue is that OpenAI Deep Research search is the equivalent of using a web browser to search the general web, and while it can and is often smart enough to access preprints, open access papers, Pubmed etc, it is not able to access full text of papers behind paywalls. One minor impact of this is that it will often reference a journal article as a website!

While you can try to specify that you want only scholarly or peer reviewed works to be cited in the report, this does not always work, and I have seen generated reports that cite library guides and even blogs written by librarians.

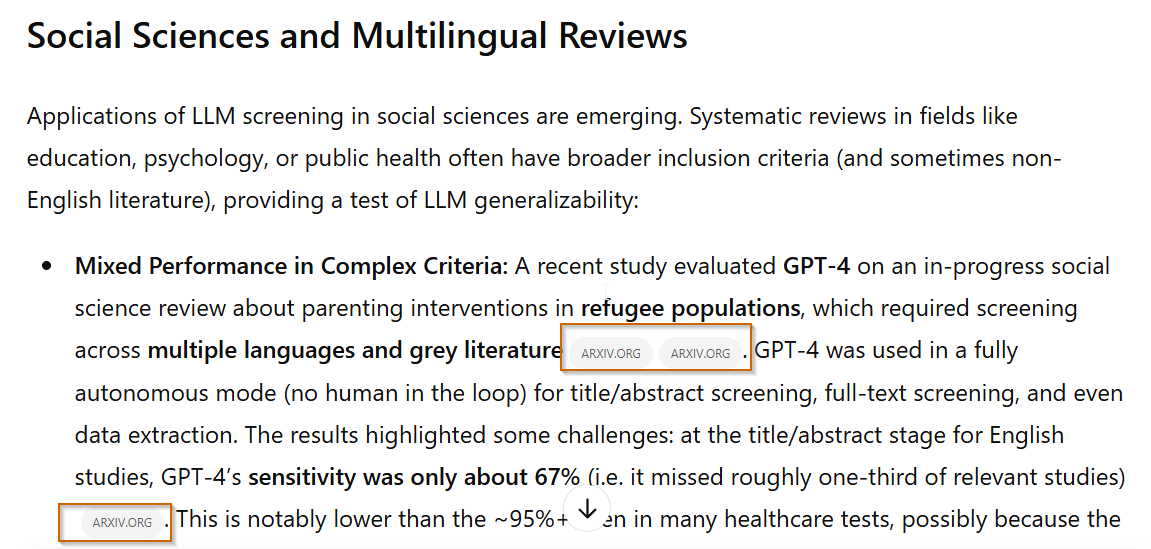

At the end of the day, "Deep Research" uses techniques that are akin to or variants of the standard Retrieval Augmented Generation technique, which means its generated output comes with all the usual issues of AI generated output. For example, for many topics, you will find despite doing "Deep search" and spending "more effort" searching, it still does not cite all of the obviously relevant papers, or it may cite papers for unusual or totally wrong reasons.

And of course, the biggest issue is of course that of hallucinations which will still persist for Deep Research generated answers.

Academic Deep Research products

The "Deep Research" products mentioned above are generally designed to search the general web and while they can be prompted to focus on academic or scholarly works, why not use the same idea of "Deep Research" (high latencies, long outputs) but focused only on searching academic indexes.

As at the time of writing there are 3 such products that have gone with this line of thinking (no doubt more will follow):

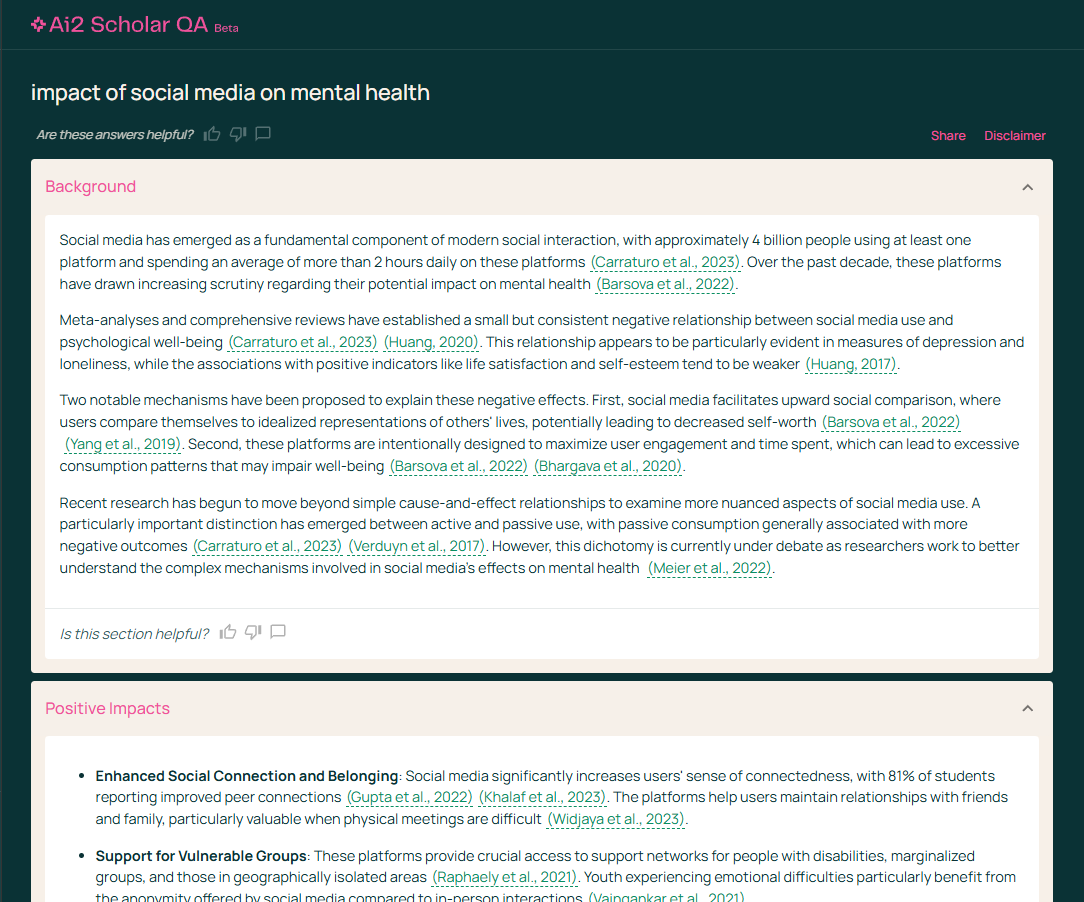

- Ai2 ScholarQA* (Sample Report)

- SciSpace Deep Review (Sample Report)

- Elicit Research Report/Systematic Report (Sample Report)

All three services search academic only indexes, ensuring all citations will be to something academic (no blogs), and often are much faster because they search one centralised academic index (e.g Semantic Scholar corpus) rather than try to search multiple web sources. As a bonus, the cite style used is standard academic style.

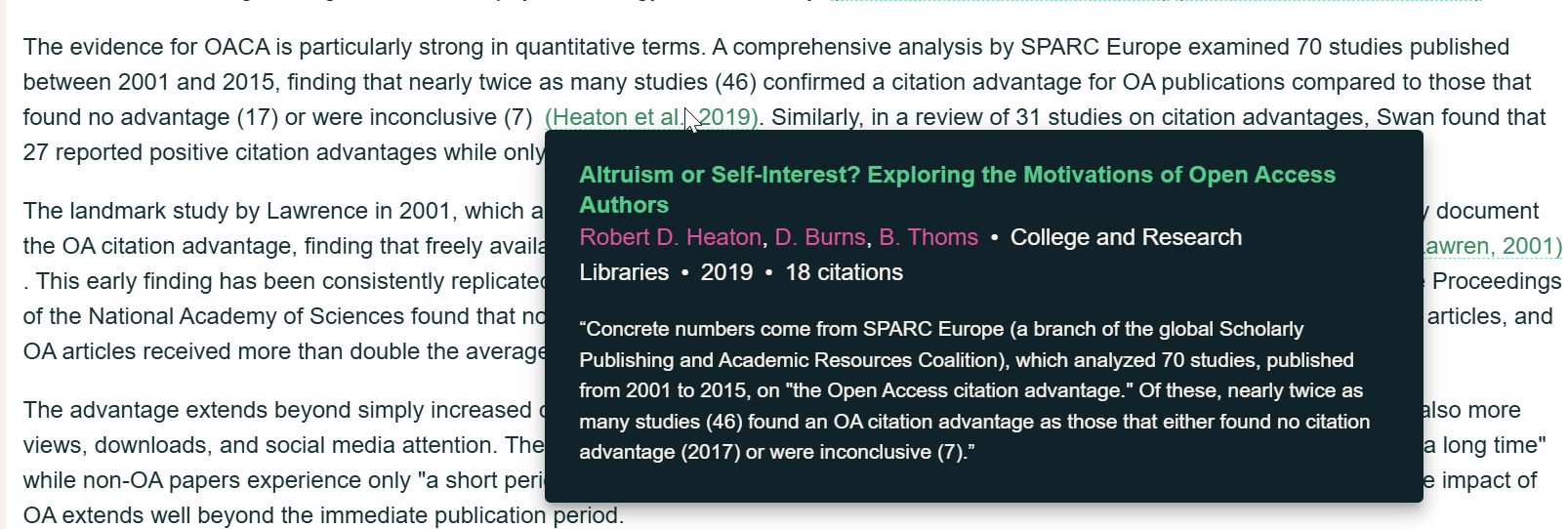

Compared to the general web Deep Research tools, these tools often make it easier to check the validity of citations made.

Take OpenAI's Deep Research, when you click on any citation, you may see a webpage with highlighted text and this allows you to check the portion of the document that was used to create the citation.

However, this feature is non-optimal because it only works for certain browsers like Chrome and it does not work if the citation goes to content that is not a webpage like a PDF.

Academic based Deep Research tools generally implement a mouse-over click feature that is more reliable and makes it easier to check. Below for example shows AI2 Scholar QA mouse over feature that allows you to see the chunk of text used by the LLM to cite, allowing easy checking for hallucinations. (Elicit Research Report has similar features).

Ai2 ScholarQA like STORM by Stanford University is a free research prototype launched recently. It searches the Semantic Scholar Corpus. The main weakness of this tool is that it is limited to 8M open access full-text (this is less than the total amount of Open Access available), and has at best the title-abstracts of the rest. It probably works better for computer science queries.

SciSpace Deep Review is an even newer feature in SciSpace. It works pretty much the same way as OpenAI's Deep Research except it searches SciSpace's own centralised index of papers.

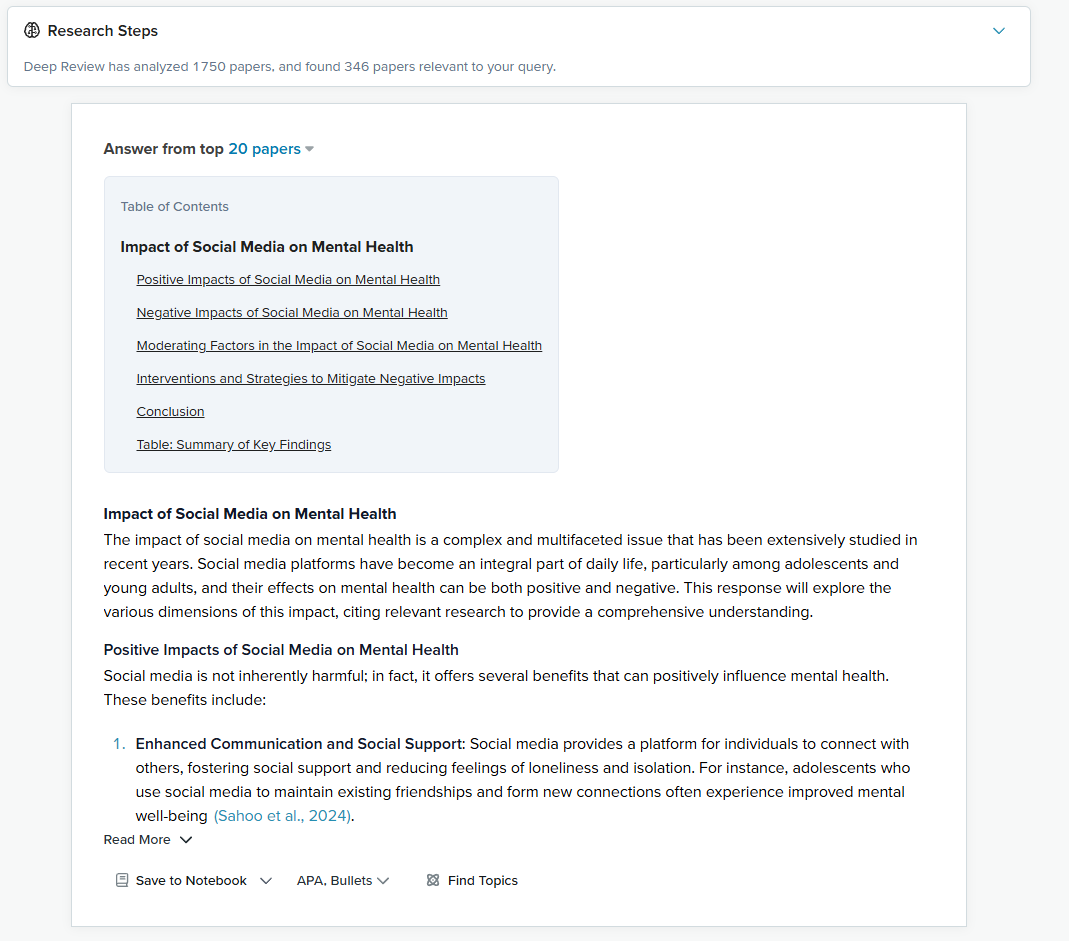

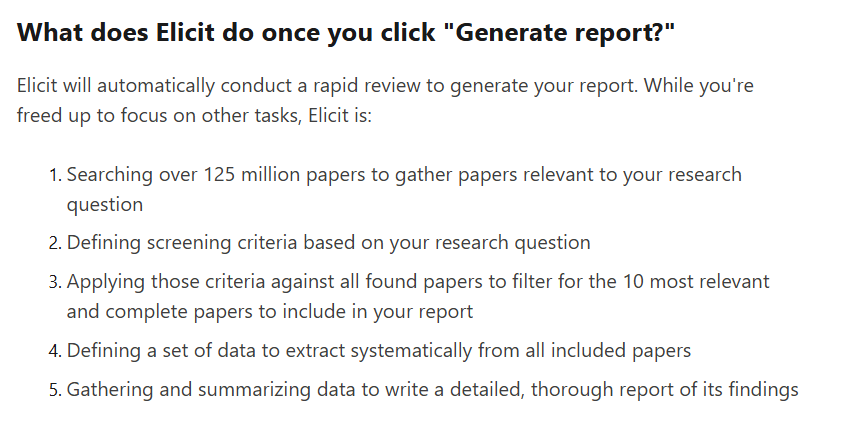

Lastly, we have Elicit.com's research reports.

On the surface, this works very much like all the other deep research services. However, it is based on a screening methodology akin to those used in systematic reviews. In fully automated mode, everything is done for you.

Below shows the process taken when you click on generate report.

Unlike other products, if you have a Elicit.com Pro account, you can edit each of the steps, such as making changes in screening criteria, screening more papers in or out, adding or removing data extraction columns, or changing column instructions, giving you unprecedented control.

This approach currently looks very promising, though access to it is not cheap and at the time of writing, the free Elicit account only allows you to generate reports with maximum of 10 references.

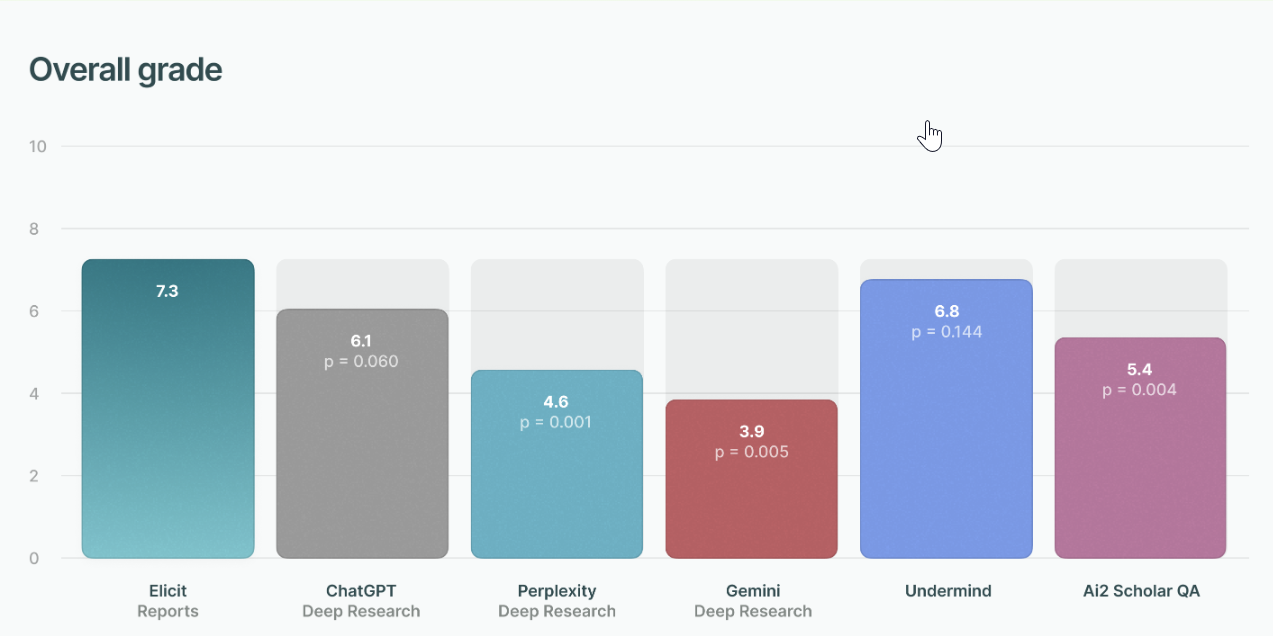

A very recent evaluation report done by 17 PhD researchers, sponsored by Elicit.com unsurprisingly ranks Elicit.com as overall first (7.3) with Undermind.ai (subscribed by SMU) coming in 2nd with overall rating of 6.8. They rate OpenAI's Deep Research 3rd (6.1) with AI2 Scholar QA next at 5.4. Bringing up the rear are Perplexity Deep Research (4.8) and Gemini Deep Research (3.9).

In a sense, these results are not surprising with the academic specific tools being clearly superior to the general ones with the exception of OpenAI's Deep Research which uses a very specially fine tuned frontier model based on the as yet unreleased o3-model.

Conclusion

We are currently in the earliest days of "Deep Research" tools, and it is too early to tell how useful and reliable such tools are. We currently advise deep caution when using these tools, testing them out in areas you have deep domain expertise as a start to get a sense of their weaknesses and strengths.

SMU Libraries with the help of the SMU community will continue to monitor and study these tools.

If you have tried any of these tools, do let us know how it worked out for you!