By Aaron Tay, Head, Data Services

With the rise of "AI" and particularly generative AI, more and more researchers are using tools enhanced with AI to help with literature review searching, writing and more.

In response, policies and guidelines for authors to submit manuscripts are everywhere (typically in statements or editorial & submission policies), here are some examples

- Sage

- Elsevier

- Wiley

- Cambridge University Press

- Taylor & Francis

- IEEE

- Springer-Nature

Some commonalities between statements

You should read carefully the policy on AI use for the journal you are submitting to but most of them share the following common features.

1. ChatGPT or AI tools should not be listed as an author or co-author

For example, Elsevier states that

"Authors should not list AI and AI-assisted technologies as an author or co-author, nor cite AI as an author. Authorship implies responsibilities and tasks that can only be attributed to and performed by humans. Each (co-) author is accountable for ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved and authorship requires the ability to approve the final version of the work and agree to its submission."

This should be simple enough.

2. Do not use AI-generated images, or videos in manuscripts (with exceptions)

This is Nature

"The fast-moving area of generative AI image creation has resulted in novel legal copyright and research integrity issues. As publishers, we strictly follow existing

copyright law and best practices regarding publication ethics. While legal issues relating to AI-generated images and videos remain broadly unresolved, Springer Nature journals are unable to permit its use for publication."

The statement goes on to state exceptions to this rule, including but not limited to cases where the research itself or research design is about use of AI

"Images and videos that are directly referenced in a piece that is specifically about AI and such cases will be reviewed on a case-by-case basis."

They also note not all AI tools are generative, and the

"use of non-generative machine learning tools to manipulate, combine or enhance existing images or figures"

are allowed but should be disclosed.

As an aside, many publishers also discourage or forbid using generative AI for peer review, particularly restricting uploading manuscripts into ChatGPT as this breaks confidentiality.

For example, Springer says

"while Springer Nature explores providing our peer reviewers with access to safe AI tools, peer reviewers do not upload manuscripts into generative AI tools."

3. Disclosure of use of AI (Generative or otherwise) is the key

What comes up clearest to me in the various guidelines is the consensus on the importance of disclosure when you use AI (not just generative AI) tools.

Many guidelines like Elsevier have exceptions for spelling and grammar check tools but it's unclear how much use and also what type of tools ("AI" is a broad term) triggers the need to disclosure with some statements suggesting that when in doubt you should probably disclose.

Some like Sage recommend that you disclose this in the methods or acknowledgements section on

use of language models in the manuscript, including which model was used and for what purpose.

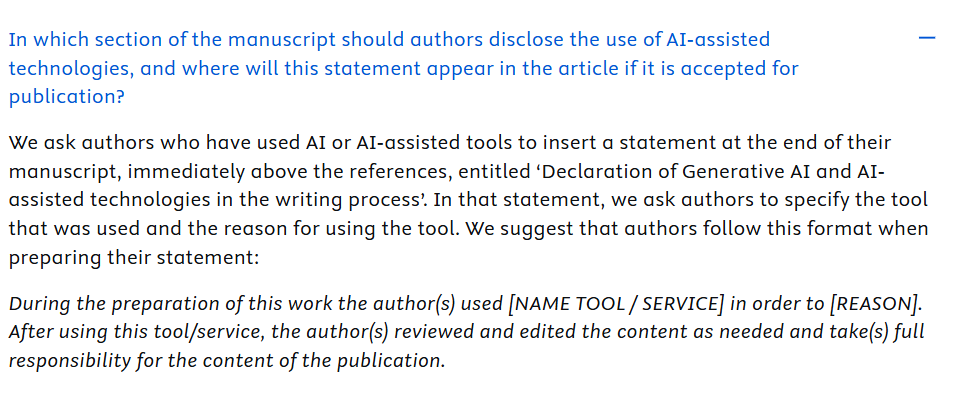

Elsevier perhaps has the clearest instructions regarding the disclosure of AI use. Authors are required to disclose their use of AI or AI-assisted tools in a section titled "Declaration of Generative AI and AI-assisted Technologies" at the end of their manuscripts, even providing a rough statement template!

Concrete examples of disclosure of AI use

Because all AI use disclosures in Elsevier Journals are always done under the same header, it is easy to find such disclosures using simple search.

Below are some examples of how other authors who have published in Elsevier journals are disclosing their use for your reference. I've chosen papers that have these disclosures, and the topic is NOT AI related. Interestingly, I have even seen the equivalent of "null returns" where the authors disclose under the section they have not used any AI or AI assisted tools.

First, we start off with some simple common and standard uses of Generative AI to help with rewriting and paraphrasing.

"During the preparation of this work the authors used Copilot (Microsoft) to reword and rephrase text. After using this tool/service, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication" (citation)

"During the preparation of this work, the authors used Chat Generative Pre-Trained Transformer (ChatGPT; OpenAI, San Francisco, CA, USA) to enhance readability and language, aiding in formulating and structuring content. After using this tool, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication." (citation)

Next, we move on to disclosure of use of "AI search" tools.

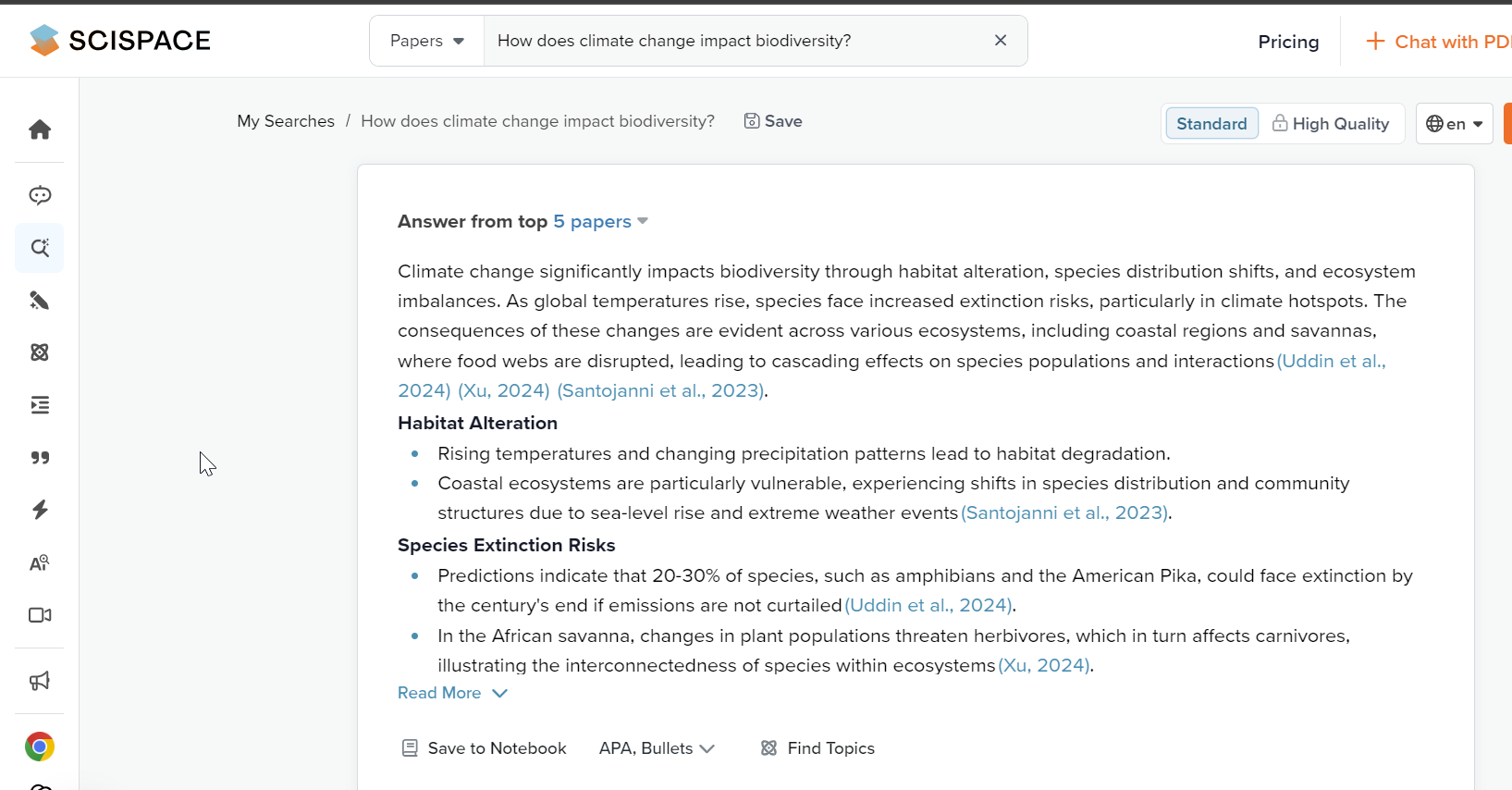

"The author used Grammarly to enhance the writing quality during the revision process. Additionally, the author utilized other AI tools, such as SCISPACE and Elicit, to search for relevant literature while revising the manuscript. After using these tools, the author reviewed, edited, and took full responsibility for publishing the content." (citation)

Disclosing the use of search tools is in my opinion somewhat a grey area, particularly if all you do with "AI tools" like Elicit, SciSpace, Undermind.ai is to look through the results that are surfaced which is no different from the use of Google Scholar or traditional databases which typically are not disclosed.

But what if you use the summarized or paraphrased generated text of papers in search engines like Undermind, SciSpace etc to decide what to read further? That's definitely an application of generative AI! It's unclear but again, to play safe you may just want to disclose.

But what if you rely on the generated answer from the search engine like Elicit, SciSpace or Undermind? I would recommend against just cutting and pasting the exact generated text or answers from such tools into the manuscript without checking and editing (see warning from Elicit.com below) but if you do use the generated text even after editing and checking, you should definitely disclose!

The exception to all this is if you are doing a review type paper of course. In such cases, particularly systematic reviews, disclosure of all the search tools used is standard practice.

We end with an extra-long elaborate disclosure statement

"During the preparation of this work the authors used SCISPACE (https://typeset.io/) and ResearchRabbit (https://researchrabbitapp.com/home) to search for relevant literature. For SCISPACE, we used prompts related to "Good Modelling Practices in Ecology", "Good Modelling Practices in Science", "Reproducibility in Science", and "Reusability in Science". The manuscripts to be read were then selected from the suggested ones by reading the title and abstract similar to what is done when searching through Google Scholar. To ensure we found all important literature related to the theme, two fundamental manuscripts (Ihle et al., 2017;Powers and Hampton, 2019) were added to ResearchRabbit. The network of cross-referenced manuscripts created by identifying "similar work" was then used as a starting point for literature review. ChatGPT 4.0, ChatGPT 4.0o, DeepL Translator, and DeepL Write were used for edits and improvements of some sentences. After using these tools, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication" (citation)

There is an extra focus on searching tools used here because while this paper is not a systematic review, this paper is mostly a narrative literature review.

Conclusion

Most journals have started grappling with the challenge of using and disclosing generative AI and AI use in general in late 2023/2024, so we are still very early in this journey with a lot of uncertainty of when and what to disclose as norms and practices around use of such tools are still forming.

As always if you are unclear, you should check directly with the journal editors.