By Aaron Tay, Head, Data Services

From 3–5 June 2026, Singapore Management University will host FORCE2026, the annual conference of FORCE11—for the first time in Asia. It will bring ~200 researchers, publishers, librarians, funders, and technologists to campus.

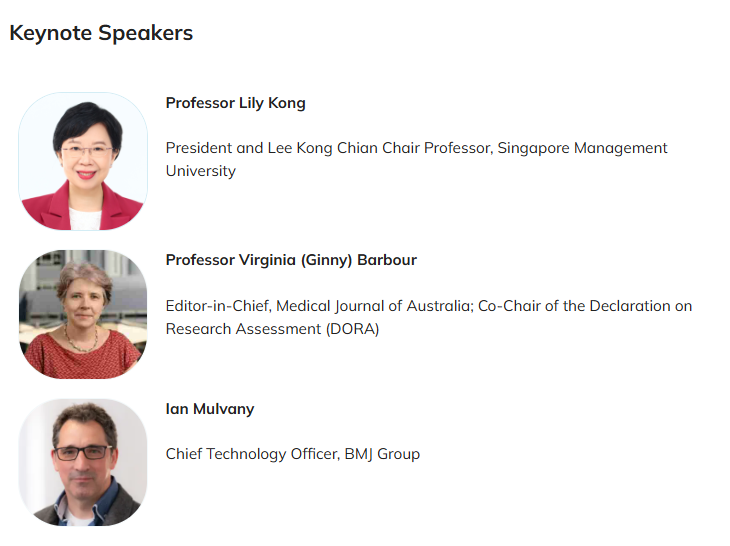

President Lily Kong will deliver the opening keynote, offering a perspective on research assessment and impact from an institutional leadership lens.

She is joined by closing keynote speaker Professor Virginia Barbour , Co-Chair of DORA (Declaration on Research Assessment), who will address how research quality should be measured in the 21st century.

A third keynote comes from Ian Mulvany, Chief Technology Officer of BMJ Group. Mulvany brings an unusual depth of industry perspective. He's a recognized voice on AI and digital transformation in scholarly publishing and will address how major publishers are navigating technological disruption.

Because it's happening "on our doorstep", this is a rare chance for SMU faculty and researchers to (a) hear what's changing in scholarly publishing and assessment before it hits policy, (b) meet the people (researchers, funders, journal editors and vendors) shaping those changes, and (c) bring back practical ideas you can use in grant, promotion, and impact conversations.

Tentative Programme is now available on the conference website.

Interested in joining us?

- Dates: 3–5 June 2026 (pre-conference 2 June)

- Venue: SMU campus

- Early bird rate: USD 275 until 28 February

- Registration site: https://event.fourwaves.com/force2026/

Why should SMU researchers care more broadly?

The FORCE11 community—the organization behind this conference—has quietly shaped practices that now affect most researchers. If you've encountered requirements to make your data "FAIR" (findable, accessible, interoperable, reusable) in a grant application, that framework emerged from early discussions from this community. The same goes for Data Citation Principles and Software Citation Principles that determine how to properly cite research data and software.

Meet international experts and innovators and hear their thoughts on the evolving landscape of academia and research.

Who should attend?

The 3-day programme is broad, but three groups will find directly relevant content:

- Researchers / PIs / University administrators concerned with how research is evaluated (assessment reform, rankings, and evidence of impact beyond citations)

- Researchers using AI tools across the research cycle (reliability, reproducibility, and critical evaluation of AI-powered tools)

- Faculty who are journal editors (Generative AI policies, integrity infrastructure, and other pressures reshaping publishing)

This ResearchRadar pieces focuses on the audience interested in (1) research assessment & impact. The next two instalments will cover audiences interested in (2) AI in research and those with (3) journal editorial responsibilities.

Researchers / PIs / University administrators concerned with how research is evaluated

Globally research assessment has come to the understanding that we should move slowly away from "where you published" toward "what you contributed and what changed because of it." But this is simpler said than done. FORCE2026 has multiple sessions that grapple with the practical version of that shift: what evidence counts, how to build credible narratives, and how institutions should (and shouldn't) use metrics and rankings.

Here are some selected sessions that might be of interest:

- Pre-Conference Highlight (2 June):

- The discussion begins early with a dedicated joint event by DORA (Declaration on Research Assessment) and CoARA (Coalition for Advancing Research Assessment).

CoARA is one of the major international efforts to coordinate assessment reform and this is a specialized session focused entirely on sharing on the practical implementation of responsible research assessment.

- The discussion begins early with a dedicated joint event by DORA (Declaration on Research Assessment) and CoARA (Coalition for Advancing Research Assessment).

- Keynotes:

- Professor Lily Kong (Day 1) — perspectives on research assessment from an institutional leadership lens

- Professor Virginia Barbour (Day 3) — the DORA Co-Chair on responsible assessment from her dual perspective as a journal editor and reform advocate

- Selected Sessions on building impact narratives:

- Researcher Impact Framework: Building Audience-Focused Evidence-Based Impact Narratives — DORA's Giovanna Lima on constructing compelling cases for your research contributions that go beyond citation counts

- Recognizing and Rewarding Peer Review — How and assessment frameworks (developed across 16 European organizations) can account for the invisible labour of reviewing

- Selected Sessions on data and diverse outputs:

- Recognizing Research Data in Tenure & Promotion: Turning Policy Principles into Practice — a panel from Make Data Count/Datacite addressing the gap between policy statements ("we value data sharing") and actual hiring/promotion decisions

- How can scholarly publishing be part of a solution for assessment reform rather than part of the problem? — perspectives from PLOS on what publishers can do differently

- Bridging Metrics and Meaning: Integrating Traditional and Alternative Indicators for

- Responsible Research Assessment – Experience from University of Bath in the UK

- Selected Sessions on rankings:

- Beyond League Tables: Open Evidence and Fair Use of Rankings in Universities — a panel with senior university leadership, Citation Metric Provider, a University Ranking organization examining how rankings data should (and shouldn't) inform institutional decisions, including questions about transparency and methodology

Interested in joining us?

- Dates: 3–5 June 2026 (pre-conference 2 June)

- Venue: SMU campus

- Early bird rate: USD 275 until 28 February

- Registration site: https://event.fourwaves.com/force2026/

Coming up in ResearchRadar

In the next two instalments, we'll highlight sessions relevant to two specific audiences:

- Researchers using AI tools: A substantial track examines the reliability, reproducibility, and critical evaluation of AI-powered tools—essential reading if you're integrating these tools into your workflow.

- Faculty with editorial responsibilities: Sessions address generative AI policies, integrity infrastructure, and the other pressures that are reshaping scholarly publishing.