By Aaron Tay, Head, Data Services

After months—if not years—of wrestling with an interesting, novel research question, wrangling data and analysis, and finally writing up the results, what's next?

For some researchers, the next step is publicising their work on a preprint server such as SSRN or arXiv; others also post on social media like LinkedIn or X.

But a PDF is a static, often uninspiring artefact. Can AI help you convert your working paper into something more engaging or eye-catching?

This preliminary guide explores you ideas:

- Audio & Video Overviews — services such as Google NotebookLM transform a paper into a short podcast-style conversation or narrated micro-lecture with slides

- Visual Abstracts & Posters — Modern large language models and slide generators can condense core findings into a one-slide HTML panel or share-ready graphic.

- AI-Generated Slide Decks — tools like Z.ai's Magic Slide and Microsoft 365 Copilot draft ten to forty editable slides, complete with figures.

Each method starts with nothing more than your existing PDF and a prompt, yet opens different doors to visibility: headphones on a commute, a poster wall at a conference, or the projector in a seminar room. The pages ahead show how—and when—to use each option, how to steer the AI for precision, and why you should still fact-check everything as these new and novel tools evolve.

Note: In the examples below I use a working paper I wrote in 2016 that was never published in a journal, so the rights to the content remain with me (the author). If you're using published versions of papers- especially if you transferred rights to publishers, do check their policies on AI use.

1. Create Audio and Video Overviews (Google NotebookLM) from Your Paper

Earlier this year, we wrote about Google's NotebookLM which had gone viral for its Audio Overview feature.

If you have never tried it, Audio Overviews generates an uncannily human conversation between two hosts who discuss the content you've added to NotebookLM.

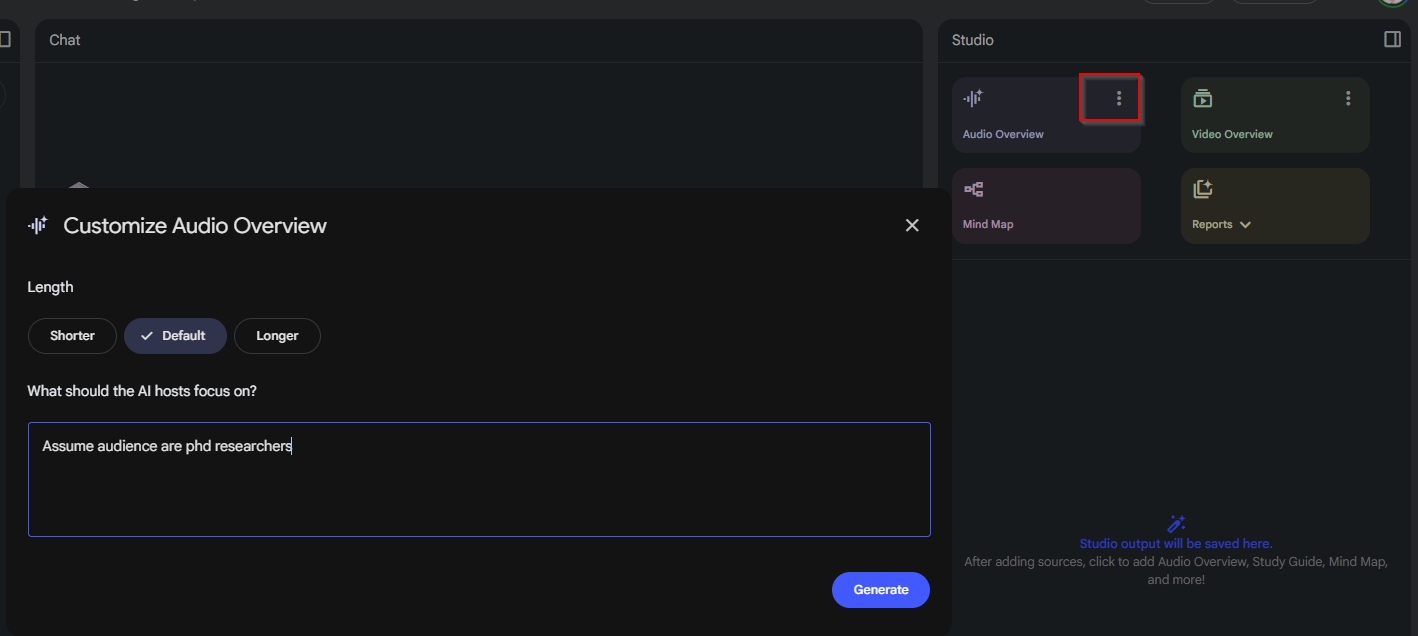

As I noted in my piece at the time, by default Audio Overview presents the content at a very high, lay-level. You should consider customising it by specifying the target audience (e.g., PhDs) and by asking it to stick closely to the source; otherwise it may drift into related—but not identical—topics.

Here's an example of an Audio Overview of my 2016 working paper/preprint.

NotebookLM has since added several features, including:

- Interactive mode, which lets you converse with the "hosts" in the middle of the Audio Overview

- Mind-map and quiz generation.

- An interface overhaul that allows you to generate multiple overviews in parallel.

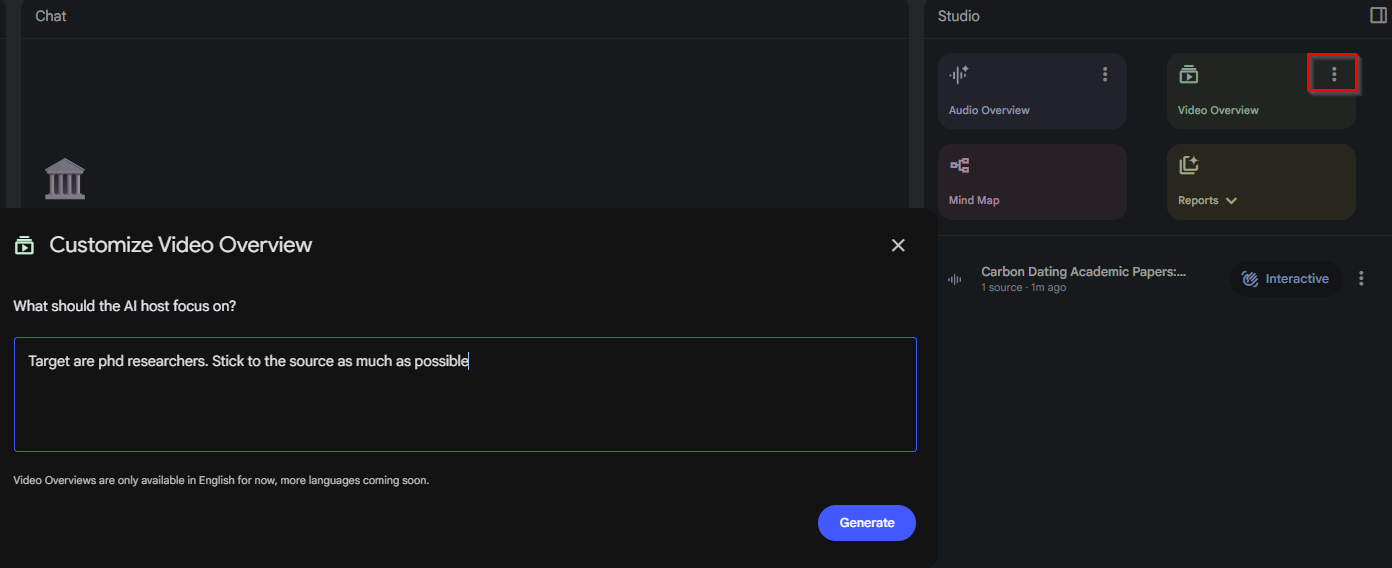

The most notable addition is the Video Overview feature. It works similarly to Audio Overviews with custom instructions, but produces a short "video" instead of just audio.

How well does it work? Here's one example using the same preprint.

Tip: Specify the exact presentation title in your customized prompt. Otherwise NotebookLM may choose its own title. In one run it titled the video "The Library Paradox: When Free Isn't Free," which while isn't wrong but isn't exactly in my preprint. Instead, I regenerated it with the title I preferred.

Notes on Video Overviews

- Length. Video Overviews appear capped at around 7–8 minutes regardless of prompting. For dense papers, specify which sections to emphasise or they may be glossed over. This is unlike Audio Overviews where you can adjust the length of the discussion and I have seen Audio Overviews as long as 45-50 minutes.

- "Video" is generous. It's essentially a templated slide-style video with narration. So far I've not been able to make it include figures, images or tables from the source.

- Limits. On the free tier there are daily caps on Audio and Video Overviews, so think carefully before you generate

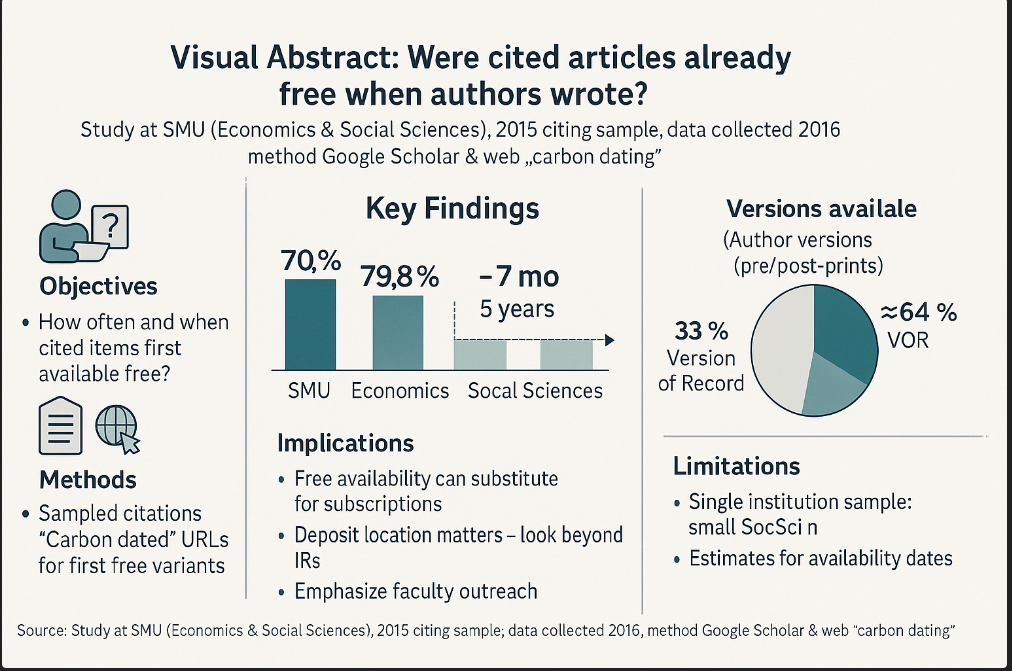

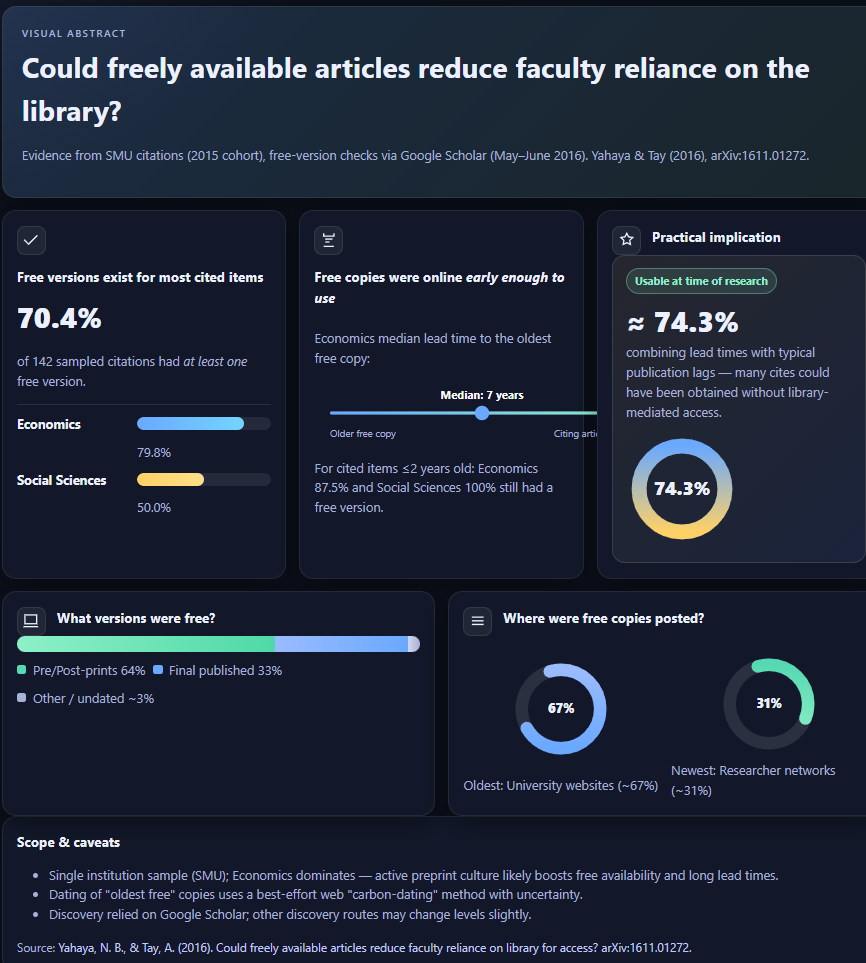

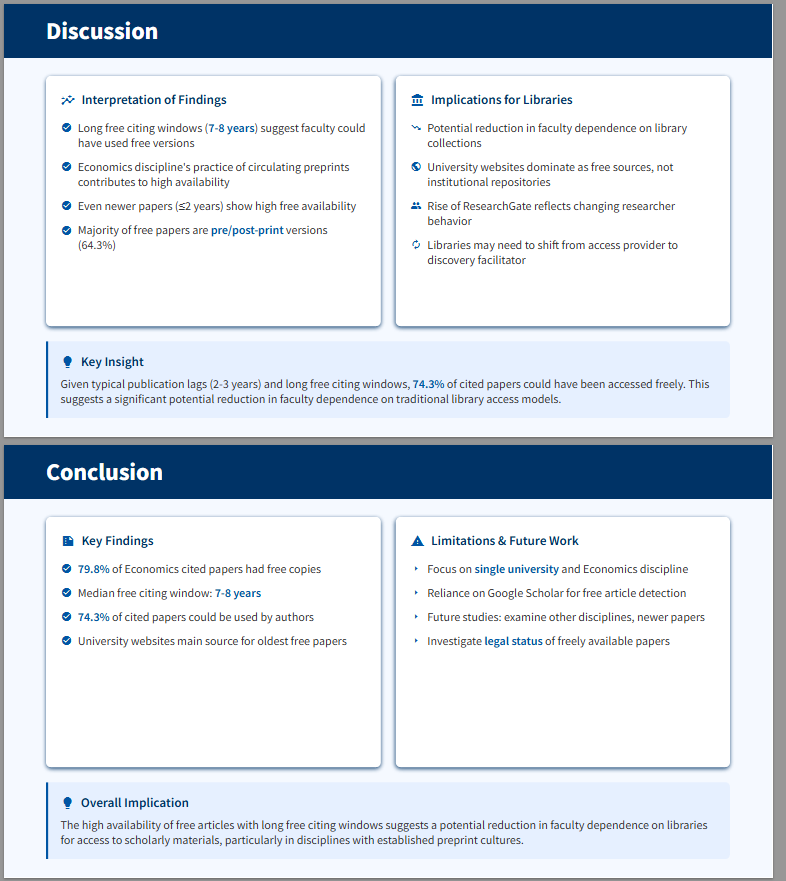

2. Creating Visual Abstracts from Your Paper

Once your paper is written, you'll often need a poster, or graphical/visual abstract to promote the work.

At SMU Libraries, we've long offered (free) support to faculty who want to generate visual abstracts (conditions apply). Can AI help you do this yourself with just a prompt? The answer is: partly.

2.1 Use a state-of-the-art LLM such as ChatGPT to generate even as an image or HTML

In my example, I fed my paper to OpenAI's GPT-5 Thinking. I get better results with a two-step prompt: first ask the model to analyse the paper and extract the main concepts; then either:

- Ask ChatGPT to generate the visual abstract from the main findings as an image (turn on image generation and ask ChatGPT to produce a visual abstract)

or less obviously

- Ask ChatGPT to generate a visual abstract in HTML from the main findings.

You can see the results here.

The image-generation route can be hit-or-miss and may require several attempts.

By contrast, asking ChatGPT to "use the main findings to generate a visual abstract in HTML" often produces a surprisingly decent result that's easy to refine as this exploits the coding capabilities of LLMs which are all top notch today.

The results look surprisingly decent compared to using image generation

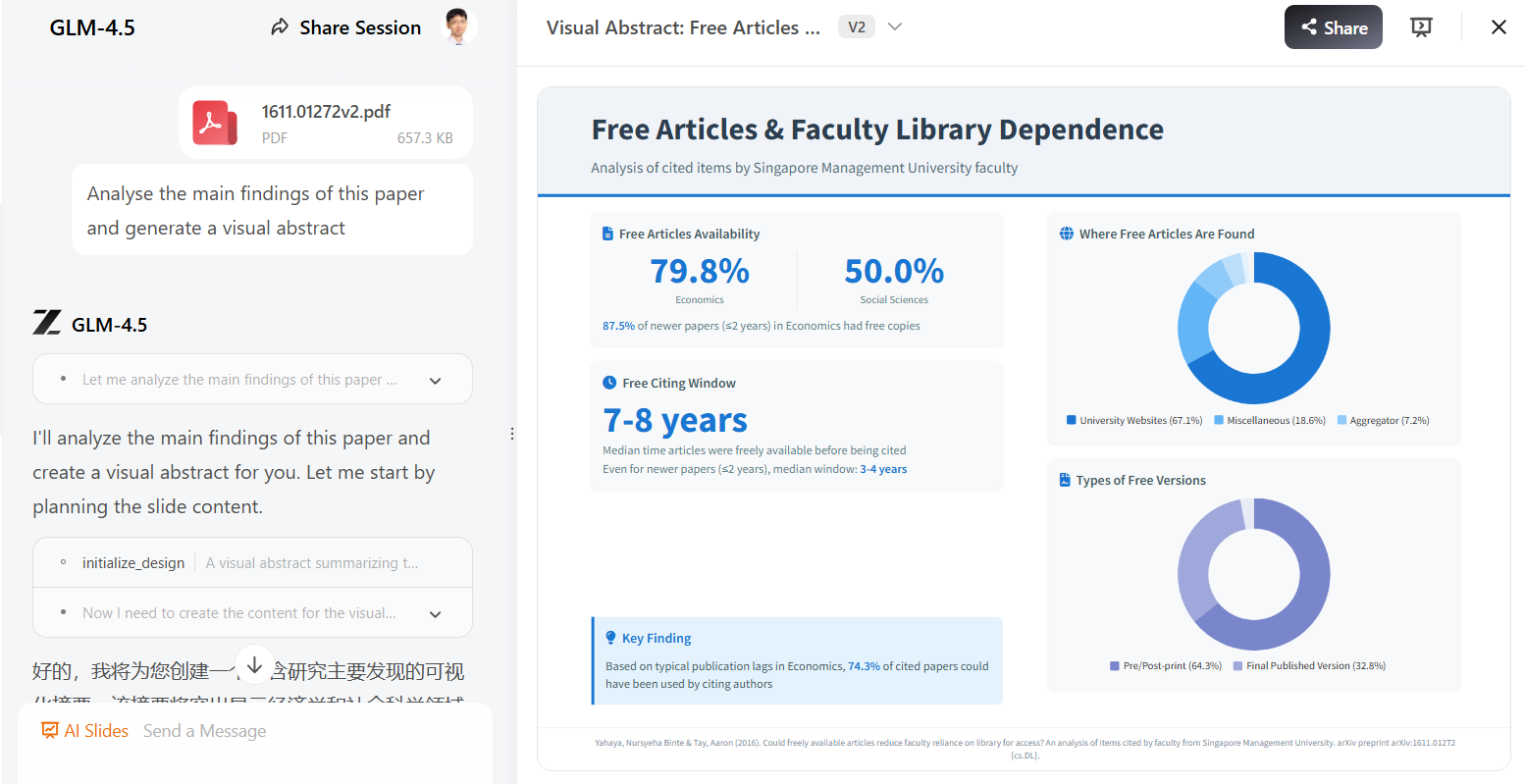

2.2 Use Z.ai "AI slide" feature to create a single HTML slide/visual abstract

A second and possibly better option is to use Z.ai. As you will see, compared to using ChatGPT to generate output, this makes it easier to edit the result.

Open-weights large language models from China are currently strong contenders; three of the best at the time of writing are:

All three offer modern chat features (deep-research modes, code writing, etc.), but Z.ai's "AI Slide" mode stood out.

I attached my preprint, turned on Magic Slide, and provided a suitable prompt. It generated a one-slide visual abstract in HTML.

If you're not happy with the first pass, iterate. In my example, I asked it to add a citation at the bottom of the slide.

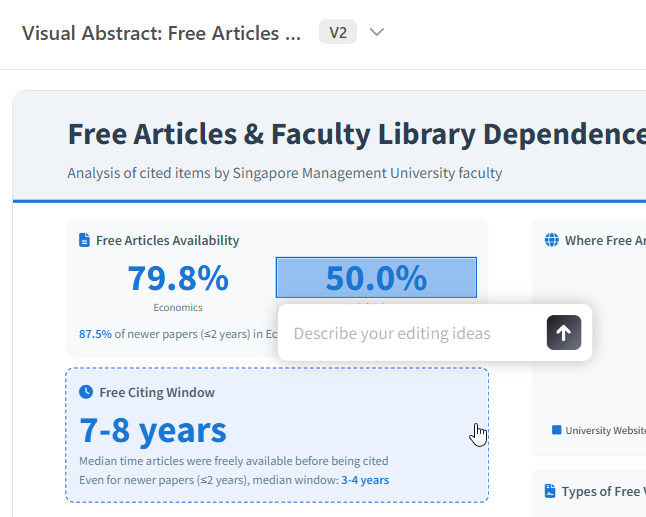

As you know when using AI to generate content, you need to guard against hallucinations—please check everything. If you spot an error, you can:

- Click Edit to overwrite text directly, or

- Switch to HTML mode to make more powerful edits.

Secondly, you can go into HTML mode if you really want to edit the whole slide/visual abstract in even more powerful ways.

The ease of post-generation editing is why I favour this method over the other methods already mentioned.

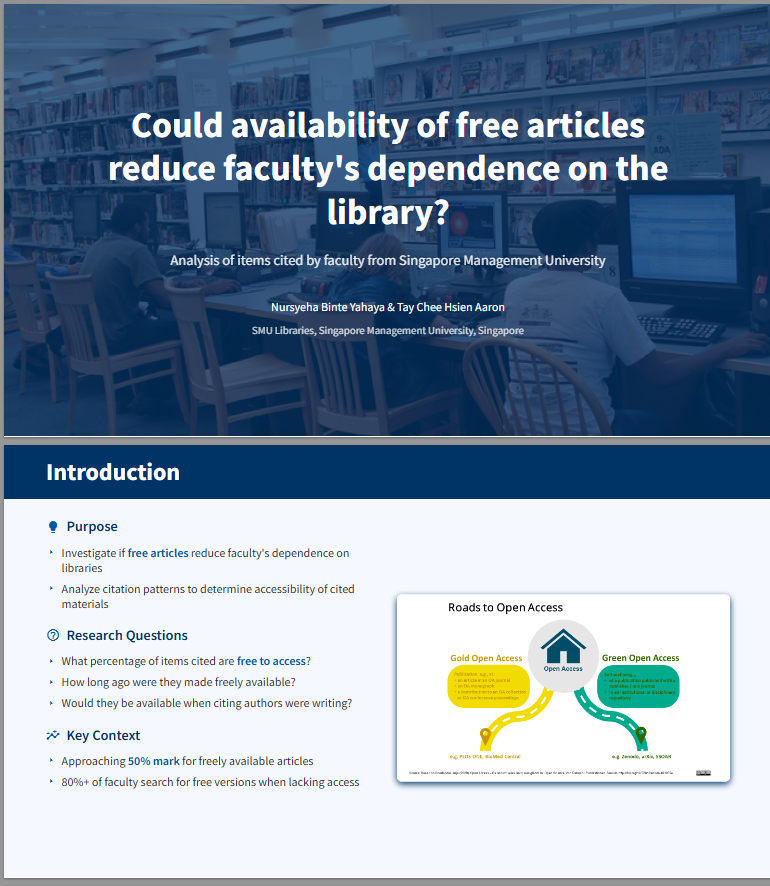

3. Creating Slide Decks from Your Paper

3.1 Z.ai (AI Slide mode)

Given Z.ai can produce a visual abstract as a single HTML slide, it can also generate an entire deck (up to 10 slides) from a PDF.

Samples: First two slides (PDF) and Last two slides (PDF)

Similarly, you can edit the generated slides by:

- Providing iterative instructions,

- Clicking Edit to overwrite text, or

- Editing the HTML directly.

Once you have finalized the slides, you can present from the website or export to PDF. It also exports to PPT for GLM4.6.

The major drawback of this method is that the 10-slide limit may be restrictive for detailed decks. The next method allows generation of up to 40-slides.

3.2 Microsoft 365 Copilot (PowerPoint) to generate native PPT slides

Z.ai exports to PDF only; as such converting to .pptx is possible but clunky. Note as of GLM-4.6, you can now export into pptx.

If you want to generate directly to a native PowerPoint (.pptx) with direct editing, use Microsoft 365 Copilot in PowerPoint (not the free "Microsoft Copilot").

By default, SMU staff (including Admin, Faculty, and Research staff) do not have access to Microsoft 365 Copilot. At the time of writing, you'll need to submit a request to enable it.

Once enabled, in PowerPoint choose Create a new presentation with a file.

You'll then add a file the powerpoint is made on by searching for it. There's currently no way to upload a local PDF at this step, so make sure your PDF is on SharePoint or OneDrive first.

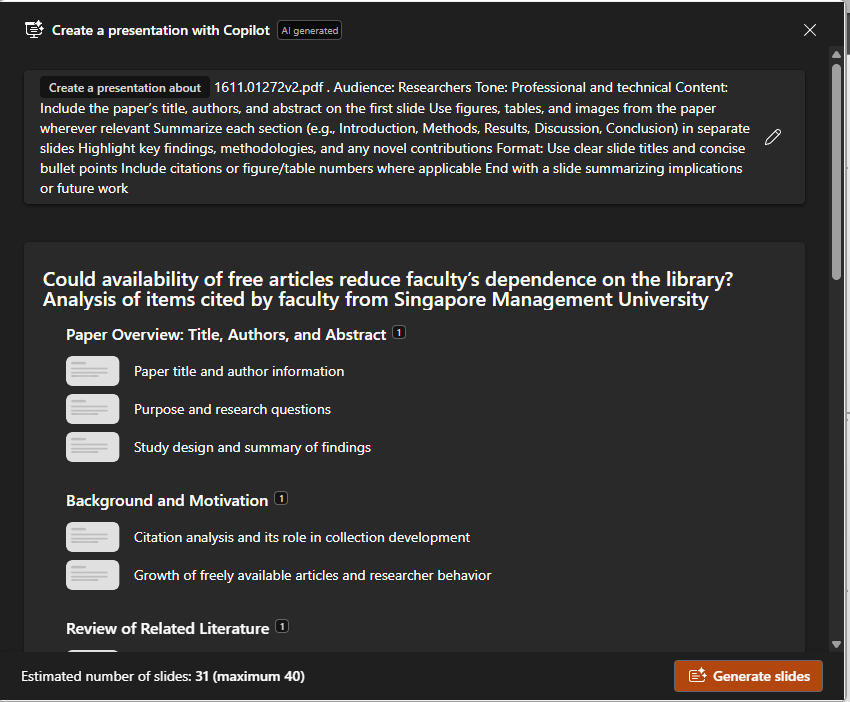

Any reasonable prompt should work; I used Microsoft 365 Copilot's Prompt Buddy to draft one.

Unlike the other tools mentioned, this method can use figures and images from your PDF. It helps to prompt explicitly for figure use where appropriate if this is what you desire.

Copilot does a two part process to generate slides, first it generates an outline for your deck. You can edit, add, or remove sections. When ready, click Generate slides.

Here's a sample of the slides that might be generated. From there, you can keep or tweak the slides. You'll still need to edit text and sometimes swap images, but it's a time-saver with structure and key points already laid out.

An alternative to Microsoft 365 Copilot is Kim.ai's Kim Slide features that allow you to edit and save in ppt format. (See sample)

Conclusion

AI won't make your paper speak for itself, but it can dramatically shorten the path from dense PDF to clear, engaging story. The tools and methods above are new and novel—features, limits, and output quality are evolving—so treat results as drafts: check rights to use and accuracy of output, and iterate. Used judiciously, audio/video overviews, HTML visual abstracts, and AI-assisted slide builders turn solid research into formats that travel further without diluting substance.