By Heng Su Li, Co-founder of SMU Researcher Club, Research Associate (Cooling Singapore 2.0, WP-B)

On 2 April 2024, the SMU Researcher Club rolled out the first session of its Publication Series. The series convenes experts and fellow research colleagues to share their tips and insights on topics relating to the process of academic publication.

The first session, titled Evaluating Journals and Conference Proceedings! What makes a good journal and proceeding to reference and/or submit to? featured perspectives from both SMU Libraries and SMU researchers. The speakers shared different ways of evaluating venues (journals, conferences, proceedings, preprints) where they reference from and publish. This in-person session drew 37 people.

This session comprised a sharing on bibliometrics by Yeo Pin Pin (Head, Research Services, SMU Libraries), a panel discussion featuring Dr Jude Kurniawan (Postdoctoral Fellow, Sustainability and Governance, SOSS), Yang Zhou (Research Engineer, SCIS), Pin Pin and Su Li (Moderator), as well as a Q&A segment with the crowd. If you're interested in the session’s takeaways, keep reading!

SMU Libraries’ Perspectives: Bibliometrics for journal evaluation

The study of bibliometrics refers broadly to the quantitative evaluation of scientific articles and other published works, including the article’s authors, the publishing journals, and the number of times these works are later cited. The word bibliometrics was coined by Pritchard (1969), who defined it as follows 1:

To shed light on the process of written communication and of the nature and course of a discipline (in so far as this is displayed through written communication), by means of counting and analyzing the various facets of written communication.

Historically, bibliometric methods have been used to trace relationships among academic journal citations. Indices leveraging bibliometric methods first emerged in 1955, with the establishment of the Science Citation Index by Eugene Garfield. He then later introduced the Journal Impact Factor (JIF) and Journal Citation Report (JCR) in 1975 2.

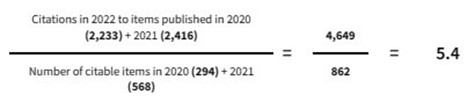

The concept undergirding JIF is straightforward; JIF is a ratio between citations and citable items. For JIF, only articles and reviews are considered citation items while editorials and letters are not. Other indices may define citation items differently. A 3-year JIF takes citations in the current year to items published in the two preceding years. However, in response to feedback that concepts in disciplines such as the social sciences take longer to gain traction and hence the papers cited much later, a 5-year JIF is also made available.

JCR has other features such as sorting journals by JIF and dividing the journals in its database into quartiles, thereby allowing researchers to identify and aim for journals that are ‘ranked’ higher.

As the oldest index, the JIF is heavily utilised by both journals and researchers when determining the impact/influence of their works. Nonetheless, newer indices such as Scimago Journal Rank (SJR) (2007) and Citescore (2016; revised methodology in 2020) have emerged and gained significant traction, as they offer slightly different advantages to JIF. Notably, JIF uses data from Web of Science while the SJR and CiteScore use data from Scopus 3.

SJR takes into account the number of citable items and their citations over a three-year calendar period (one year’s worth of data more than JIF) and is calculated through an iterative process that computes the transfer of prestige from one journal to another journal, similar to the Google PageRank algorithm. SJR citations are weighted by how prestigious the journal it is from, whereas for JIF, one citation counts as one regardless of which journal the citation comes from. You may read more about the iterative process of calculating SJR here.

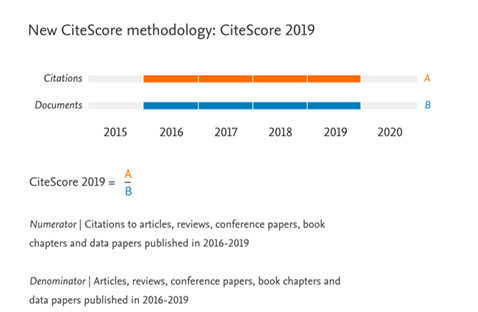

CiteScore counts the citations received by the items in four years (e.g. 2019-2022) and divides this by the number of citable items published in the same window. Citable items include articles, reviews, conference papers, book chapters and data papers which are different from JIF. Similar to JIF, citations are not weighted.

Journals have been observed to publicise their JIF, SJR or CiteScore performance on their websites.

Besides using the indices to compare the performance of journals within similar fields, researchers can leverage the indices when exploring new areas. For researchers working on interdisciplinary projects or exploring new methods or perspectives, the usual go-to journals for submission may not be appropriate. In these situations, researchers can use the indices to identify appropriate journals to aim for when publishing.

Considerations when evaluating conferences

Pin Pin also shared various questions a researcher can use as guidance when deciding which conferences to apply for. Briefly, they are:

- Scope of conference: Do the topics in the conference interest you, and are they aligned to your work?

- Organiser: Is the event organiser an accredited entity, in terms of affiliation with a larger academic society, higher institution and journal, etc?

- For instance, the International Conference of Biometeorology is organised by the International Society of Biometeorology (an academic society). And in 2023, it was hosted by Arizona State University, US (higher institution).

- Keynote speakers: Are the keynote speakers familiar names/faces in your field? If you know a keynote speaker but are unfamiliar with the rest, the organiser or the conference (first edition), you may want to investigate further.

- Editorial committee: Are the names on the editorial committee familiar to you and in the discipline?

- Rejection rates:How high are the rejection rates? You may not want to attend a conference where the bar is set too low.

In the field of computer science, several resources rank conferences. They are the CORE Conference Portal, CSRankings and the CCF Catalogue of Conferences.

Panelists perspectives: Disciplinary knowledge for journal/ conference/ proceeding evaluation

Our panelists – Jude, Yang Zhou and Pin Pin – also shared their valuable insights on considerations they account for when deciding which journals to publish in and conferences to attend. The considerations are wide-ranging, and they cover academic rigor and alignment, career prospects, time and cost, as well as fun! Our panelists also shared the red flags that signal dubious journals and conferences.

The key consideration when deciding which journals to publish in and conferences to attend is largely decided by the academic rigor of the research work and its alignment with the requirements of prestigious journals and conferences. In Jude’s experience and observations in his field of work, publishing one article in the most prestigious journals such as Nature, Science and Cells, catapults a researcher ahead in his/her research career in universities compared to a counterpart who has several articles in the lower tier, but still good journals. This is because prestigious journals tend to have higher rejection rates and are more rigorous in their reviewing process, which in turn makes their articles more highly cited and counts towards a university’s ranking.

For those who require guidance on which journals to publish in, Jude also recommends using Journal Finder provided by publishers like Elsevier and Wiley. Pin Pin added that Clarivate has a Master Journal List with this functionality.

For Yang Zhou, while top-tier conferences are harder to get into than second or even lower-tier ones, they open doors to better opportunities once your paper is accepted. Some conferences are also designed such that a researcher’s work will be published in the conference proceedings, which counts towards their publication record. For computer science, where ideas and research move very quickly, publishing or presenting at conferences allows the researcher to put their name onto the novel idea much faster than if they did so through traditional journal articles. The first-mover advantage, in this case, who is first recognised for a novel idea, is a tricky issue. An idea can appear around the same time; formalising the idea through a proceeding or clinching an award for it in a more accessible, timely second-tier conference may be better than launching it in a first-tier conference that requires a longer time to get accepted.

Works are also increasingly published on pre-print platforms4 and exchange platforms like GitHub. Pin Pin cautioned that some journals do not accept works that have previously been made accessible as pre-prints and researchers should be aware of the requirements of journals they are aiming for. Pin Pin noted that pre-prints are widely accepted for some disciplines, e.g. arXiv for computer science and SSRN for social sciences. Pin Pin reminded authors to retain their author-final and original manuscript versions of their papers as the copyright generally belongs to them. The published version is generally under the publisher’s copyright. There are a few publishers that will not allow any version of the paper to be made available on any platform except that of the publisher.

While proceedings may not be a common publishing venue for certain disciplines, Jude highlighted that researchers should not discount it entirely. For ideas that fall on the intersection of various disciplines, some journals may not see the research value or see a fit to their journal scope. Conference proceedings can be a good venue to get such work published.

The stage at which the researchers’ career is also has an important influence on the journals they aim for. For younger researchers such as those doing their PhDs or first postdoctoral role, the journals that they aim for may be influenced by legacy/culture. In Jude’s case, he set a goal during his PhD to publish an article in Geography Compass, a journal that is known in his discipline as the ‘rite-of-passage’ and first-timer journal. The journal commands respect within human geography and articles are required to explain concepts in simple and clear language, targeting a wide audience that includes students and policymakers.

As researchers climb their career ladders in the university, they can aim for mid and higher-tier journals, to build their track record. Pin Pin noted that universities or departments may have identified specific journals their faculty and researchers should aim to publish in. Researchers will have to do their due diligence on understanding their discipline and the university/department’s requirements on where they publish their research.

As Assistant Managing Editor and Editorial member for the International Journal for Smart and Sustainable Cities (JSSC), Jude shared that he invites well-established professors in the field to submit to this fledging journal, which has yet to receive its impact factor5. Well-established professors have enough track records to explore newer journals that they deem reliable.

Some universities have boycotted or blacklisted certain journals or publishers. Recently, MDPI has been boycotted amongst researchers based in Malaysia and Canada due to national legislation. We caveat that there are more factors that universities account for when reviewing a researcher’s performance, which journals you publish in is just one facet.

Cost is a practical consideration for deciding which journals to publish gold open access in, as the charges can range from 2,000 SGD to 14,000 SGD6. Yang Zhou leveraged heavily on SMU Libraries’ agreement with ACM where he could publish open access without charges. Jude aims only for cost-free journals. Journals that command higher fees can have the resources to incentivise their reviewers and thus expedite the publishing process, but the flip side is that the reviewing process may be perceived as rushed. For interdisciplinary or large-scale collaborative projects, the issue of cost splitting amongst PIs/authors should also be discussed beforehand during the budgeting process. SMU Libraries pays the full fee if the corresponding researcher is based in SMU for the selected publishers where they have an agreement.

Researchers can also treat conferences as opportunities to explore new places and have fun. As the research project budget is limited, researchers should be cautious about where they want to travel to for conferences. Always check with your supervisors, project managers and department on the rules and regulations when it comes to traveling overseas for conferences and extending your trip.

Red flags

Unfortunately, scams happen in the research world. You may get email invites to publish in certain journals or to participate in conferences. The deals and discounts may be highly attractive, but researchers need to verify the content before paying any registration or publication fees.

According to Yang Zhou, a straightforward way of filtering out dubious invites would be to ignore those sent into the spam folder in SMU Outlook. A clear indication is when the organising/editorial committee and keynote speakers are unfamiliar; new legitimate conferences (e.g., first iterations or once-off) would still be fronted by relatively established researchers.

Bibliometric tools are great ways to complement your disciplinary knowledge or to give you an indication of a journal’s performance if you are relatively new to a field. It is important to cross-check the results and seek advice from more experienced peers and your advisor/PIs.

Deciding which journal/proceeding to publish in and conference to attend requires a balance of academic rigor, career strategisation, first-mover advantage, time and cost, as well as fun! If you are unsure, seek guidance from peers and PIs.

| JIF | SJR | CiteScore | |

|---|---|---|---|

| Access | Paid with access via SMU subscription | Free | Free |

| Provider | Clarivate Analytics | Scimago | Elsevier |

| The database used to obtain citations | Web of Science | Scopus | Scopus |

| Method | 2022 Journal Impact Factor Equation: Cites in 2022 to items published in 2020 and 2021 / Number of citable items published in 2020 and 2021. 3-year period, 2 years’ worth of data. |

Iterative process. 2 years' worth of data. |

2022 CiteScore 4 year period |

| Citable items | Articles and reviews Non-citable items: Other document types, including editorial material, letter, and meeting abstract |

Articles, reviews, short surveys and conference papers | Articles, reviews, conference papers, book chapters and data papers |

We’d like to thank our speakers for their time and insights!

1 Pitchard, A. (1969). Statistical bibliography or bibliometrics. Journal of Documentation, 24, 348-349.

2 Garfield, E. (1975). Journal Citation Reports: Introduction. Philadelphia: Institute of Scientific Information. http://garfield.library.upenn.edu/papers/jcr1975introduction.pdf

3 Started in 2024.

4 Preprints are early-stage research papers that have not been peer-reviewed. Source: https://www.ssrn.com/index.cfm/en/the-lancet/#:~:text=These%20preprints%20are%20early%20stage,have%20not%20been%20peer%2Dreviewed.

5 With less than 3-years to its launch, the JSSC has yet to receive its impact factor.