By Bella Ratmelia, Senior Librarian, Research & Data Services

SMU Libraries are excited to announce that SMU now offers premium access to Undermind.ai, an AI-powered research assistant that scans through hundreds of academic papers to help you find exactly what you need, regardless of complexity.

Unlike other conventional or even "AI search" tools, Undermind.ai adopts an agent-like approach where it does iterative searching (including citation searching) much like a real researcher. It also uses GPT-4 directly to evaluate relevancy of candidate papers. This is computationally very costly and as a result takes about 3-5 minutes to complete.

In return, you do get higher quality results. Depending on your topic, it might find 30-80% of all relevant papers (as benchmarked by a gold standard set of papers found by a systematic review or survey paper).

What are the key features?

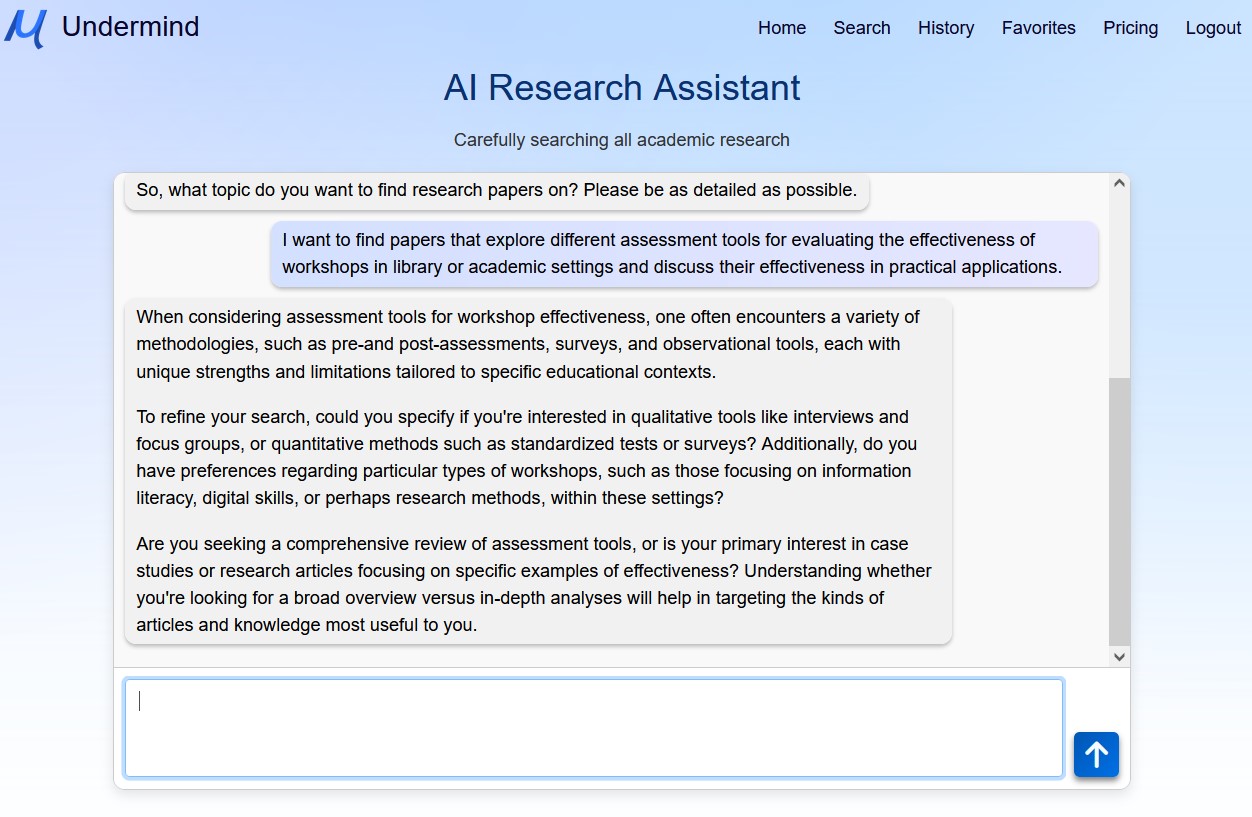

Undermind.ai offers a range of powerful features to streamline your research process. First, the intuitive chat-based search allows you to specify your queries in natural language. The chat will prompt you on the specifics of your search, giving you opportunities to define your scope more clearly. Once you've defined your query, Undermind.ai conducts a comprehensive "deep search" across titles, abstracts, and, when available, full texts within the vast scholarly database of Semantic Scholar.

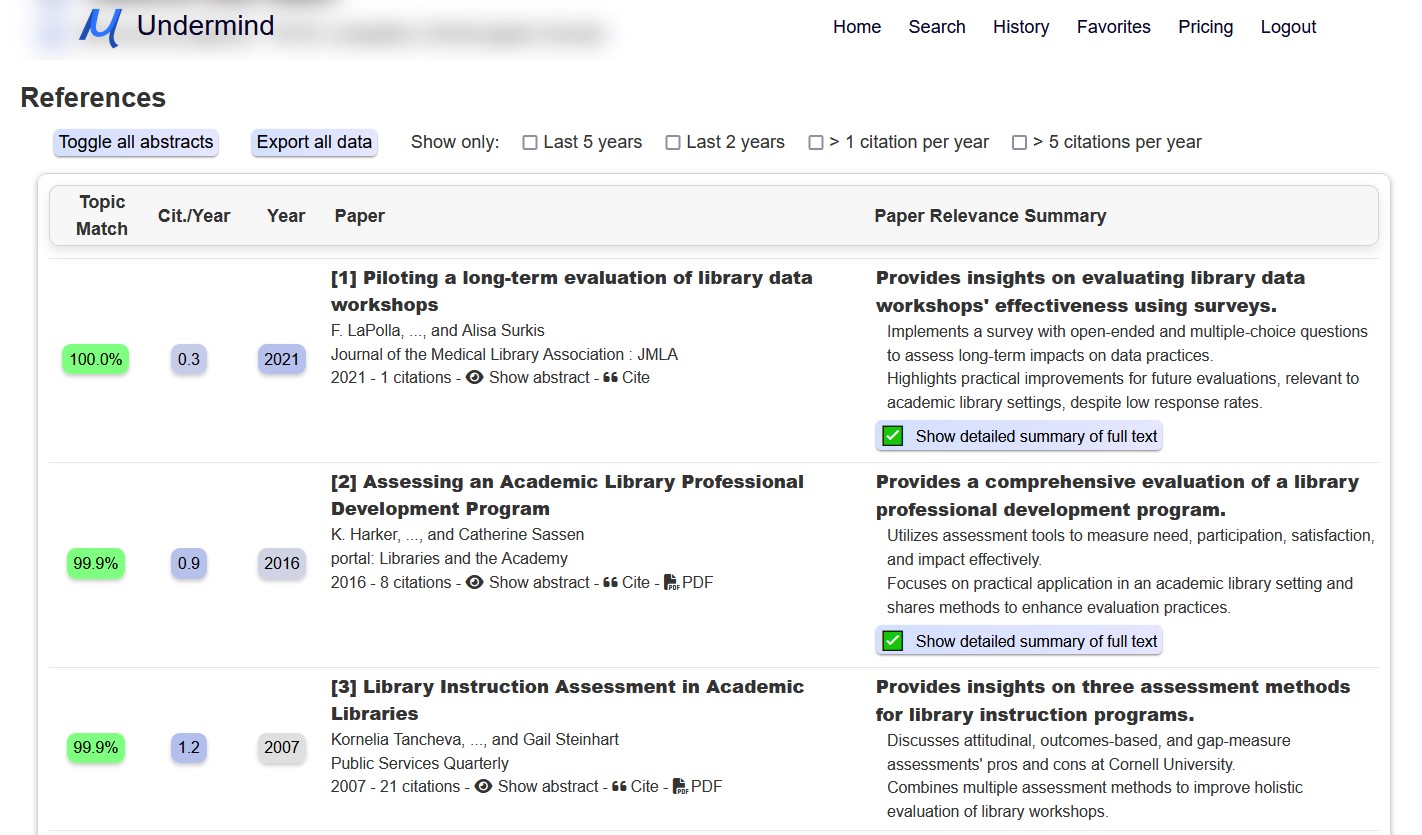

On the results page, under "References" section, each paper includes an LLM-generated summary, along with useful details such as the citation count, publication year, and a percentage indicating topic match—allowing you to quickly assess the relevance of each paper.

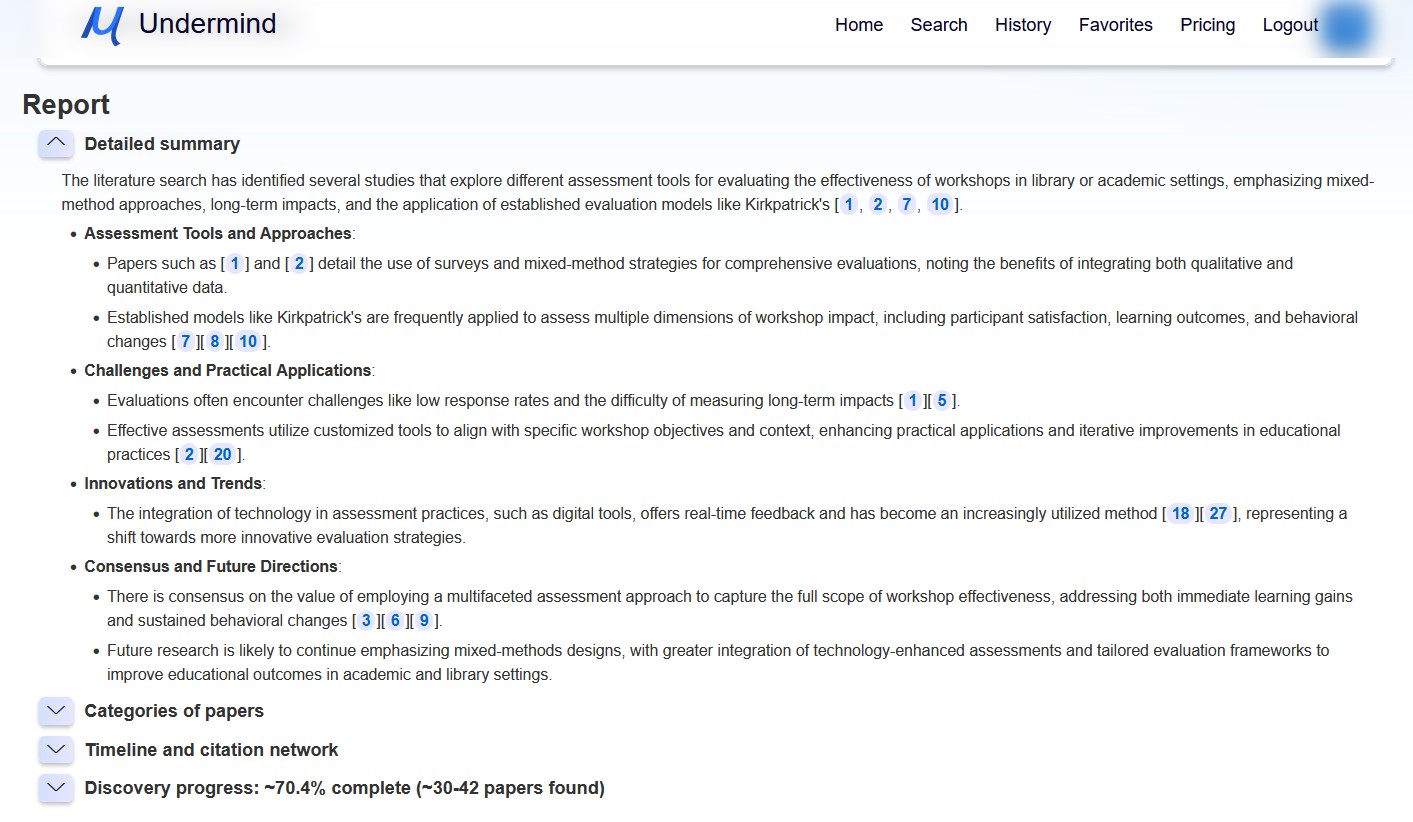

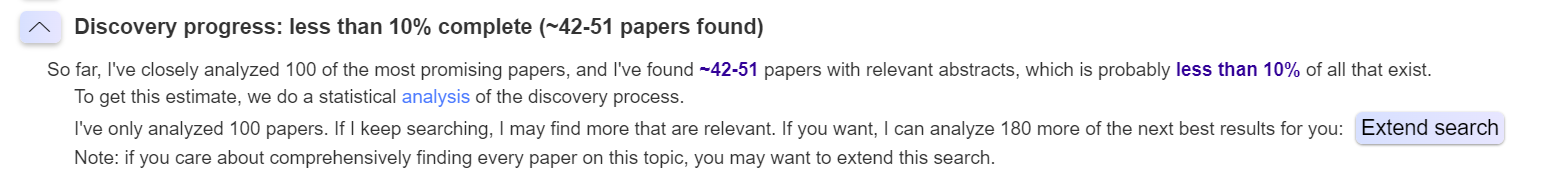

The "Report" section offers organized categories, a timeline, and a citation network to help you see connections across the literature. It also includes an estimated "discovery progress" score, indicating how many related papers have likely been identified within the corpus.

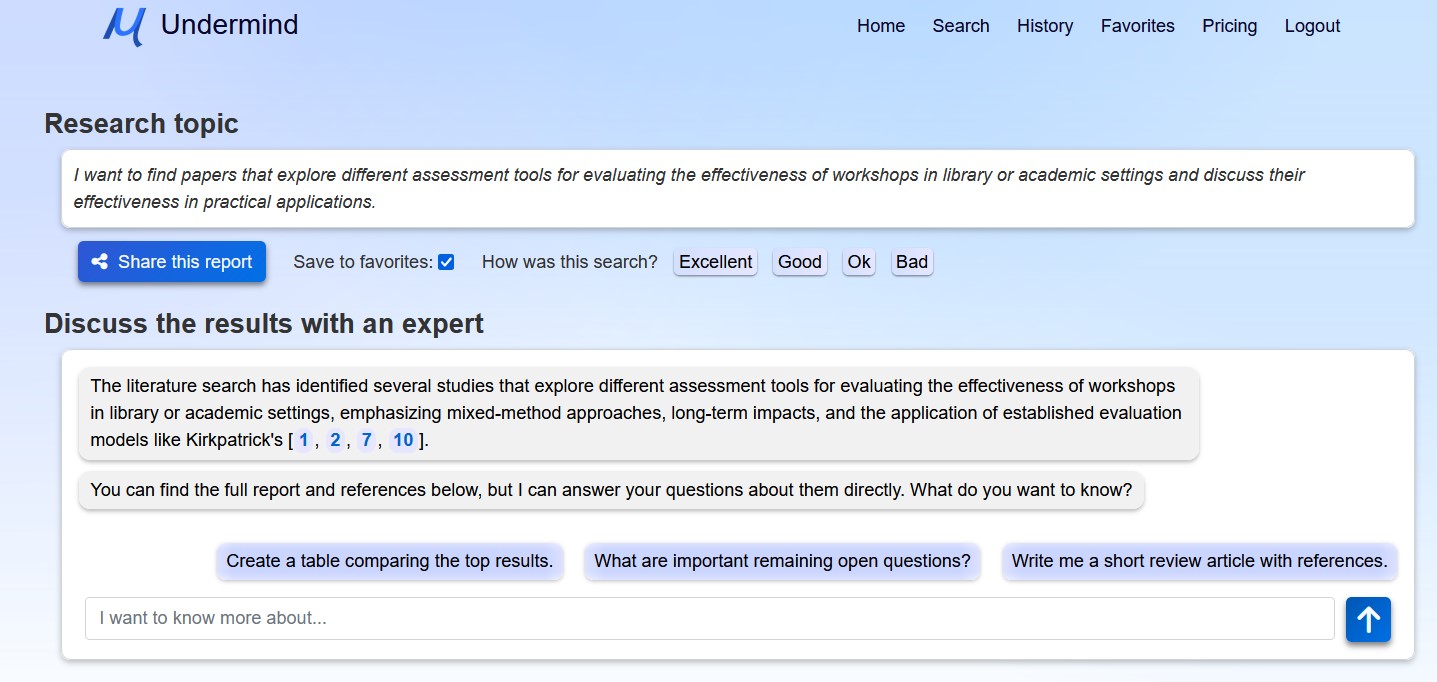

The "Discuss Results with an Expert" feature allows you to interact with the AI in a chat-like interface for enhanced insights. This feature can even help you generate a brief review article based on your search results.

While Undermind.ai can save time by acting as an initial screening tool, as with other AI-powered tools out there, it is essential to review the papers directly to confirm their relevance.

How to get access?

To get access, simply follow these steps:

- Visit https://www.undermind.ai/home/

- Log in or create an account with your SMU email address to start using Undermind.ai. If you use any other email, you will not get premium access.

- Please note that Undermind.ai access is available only to postgraduates, faculty, and staff.

If you'd like to find out more about the inner workings of Undermind.ai before trying it out, check out these resources:

- Check out this webinar by Undermind.ai co-founders Joshua Ramette and Thomas Hartke for SMU community. In this webinar, they presented tips and best practices for using Undermind.ai effectively.

- Review of Undermind in Katina Magazine 'New AI Tool Shows the Power of Successive Search' by Aaron Tay, Head, Data Services, SMU Libraries

Tips on using Undermind.ai

Undermind.ai is a simple and powerful tool to use and it helpfully guides you on how to get the best out of it, but here are some additional tips and tricks

1. Try not to ask for papers by author, journal title, or publication type (e.g. Review papers) or citation count - it may not be fully reliable

For example, queries like this do not work

"Find me papers from Journal Y on topic ..."

"Find me papers that are cited more than Z times on topic ..."

Currently Undermind.ai does not yet do a hard filter on metadata like publication year, author, journal or citation.

That said when evaluating relevancy, besides title and abstract (and now full text from open access papers) the LLM like GPT4 is also fed metadata like author, journal, citation count (even which articles cite which) but not publication type (e.g. reviews) explicitly into the context to help make its decision.

As such it may pick out and rank papers highly that match your criteria (e.g. if you ask for papers published after 2020 most of the top ranked papers will meet that criteria), however this is not guaranteed as it was not designed explicitly to do such searches. In some cases, it might work reasonably well, e.g. this search by author, or asking by publication year, but in other cases it might fail.

2. Check the discovery progress section

Always check the discovery progress section which shows Undermind's estimate of the % of relevant articles that have been found so far. If this is a low figure say 10%, you may want to the extend the search.

3. Break down your query into more specific queries

Undermind tells you to "tell me exactly what you want, like a colleague," and says you it is good at finding very specific and complex ideas.

This is good advice. To supplement this, I would say you will often get much better results if you split your broad queries into smaller specific queries to run individually.

For example, I am currently looking for empirical papers that compare metadata completeness in OpenAlex vs other citation index sources like Scopus or Web of Science.

I made it even more specific by stating which metadata fields exactly I was looking to compare and came up with this.

I want to find empirical papers comparing the completeness or accuracy of metadata in OpenAlex with other citation indexes or scholarly data sources, focusing on at least one aspect: availability or correctness of references, citations and their impact on citation searching, affiliations, or abstracts.

While such a search gives reasonable results, often you can get even better results by spliting this query to focus specifically on one aspect. For example, this query focuses only on completeness of references/citations in OpenAlex

I want to find empirical research papers comparing the metadata completeness and accuracy of OpenAlex with Scopus or Web of Science, specifically focusing on their effectiveness for citation searching.

For any questions or assistance, please reach out to us at library@smu.edu.sg

Happy researching!