By Yeo Pin Pin, Head, Research Services

One way to measure the research impact of your work is to look up your paper’s total citation from citation indexes like Google Scholar, Scopus or Web of Science. You can use different metrics like FWCI (field weight citation index) to try to normalise by subject, year of publication and type of publication.

But at the end of the day, these indicators are probably just measuring one aspect of impact - academic impact.

But in “Disambiguating Impact”, Professor Anne-Wil Harzing classifies impact into different types of impact by role.

These include:

- Academic impact

- Societal and industry impact

- Educational impact

What are some tools and measures that allow you to measure this? Below shows some examples and the tools you can use.

| Target audience | Measure | Tool |

|---|---|---|

| Academic researchers | Total citations in traditional citation indexes | |

| Citation sentiment/context (e.g., “Supporting cite”, “influential cite”, “cite for method” | ||

| Industry & practitioners in the field | Cites by patents | |

| Downloads |

| |

| Education | Cites from reading list/syllabus | |

| Policy makers | Cites from policy papers | |

| General audience | Other altmetrics indicators like mentions in tweets, blogs, news |

*Available only via SMU subscription

More nuanced academic impact

Total citations are nice, but citation volume does not measure why a paper is cited. It is possible a cite could just be a perfunctory cite or worse a cite critiquing or falsifying the cited paper!

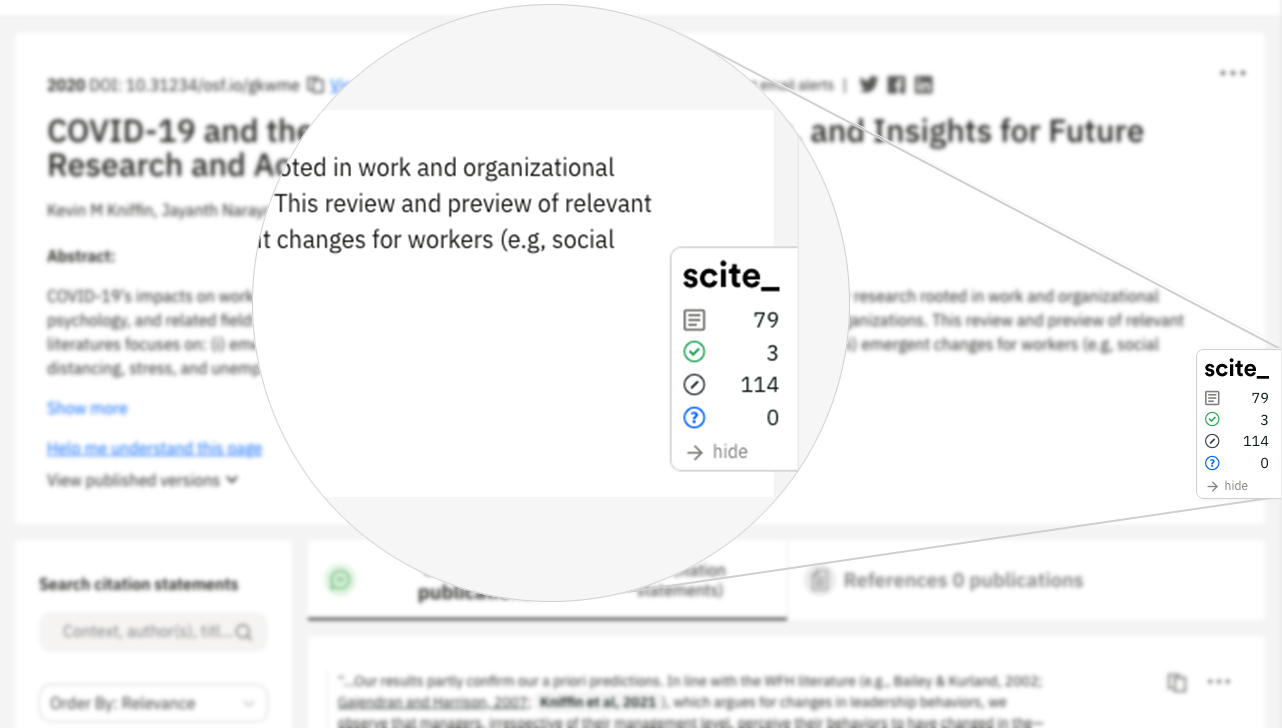

With tools like scite (subscribed by SMU), you can check the citation context of each citation and tell whether a cite is “supportive” (findings of citing papers agree with cited papers) or “contrasting” (findings of citing papers disagrees).

For example, “COVID-19 and the Workplace: Implications, Issues, and Insights for Future Research and Action” a paper that includes Devasheesh Bhave and Nina Sirola as co-authors, had three supporting cites and 114 mentioning cites.

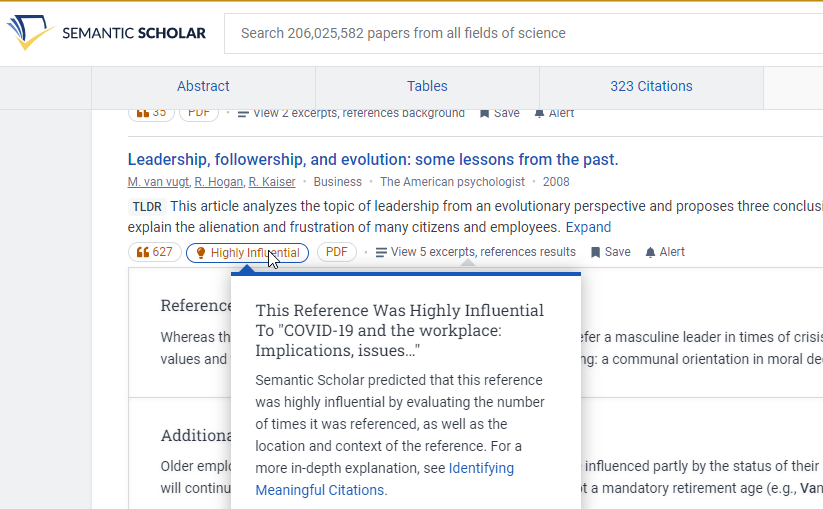

Similarly, with the use of Semantic Scholar, you can identify which cites to your paper are being “cited for methods”, showing how the method used in your paper might have influenced the methodology of other paper. Semantic Scholar can even identify influential cites.

In the example below (using the same cited paper), we can see that when using Semantic Scholar, we identify a paper that not only cited that paper but was identified as highly influential to that citing paper because it cites the paper five times for a variety of reasons.

Societal and industry impact – policy cites, downloads and other altmetrics

Counting only academic citations from traditional citation indexes like Scopus or Web of Science even with context means you typically count citations only from peer-reviewed papers.

However, cites from other peer-reviewed papers are not the be-all and end-all, and likely measure academic impact only. But what about other forms of impact including impact on society and industry? This is where the idea of altmetrics comes into play.

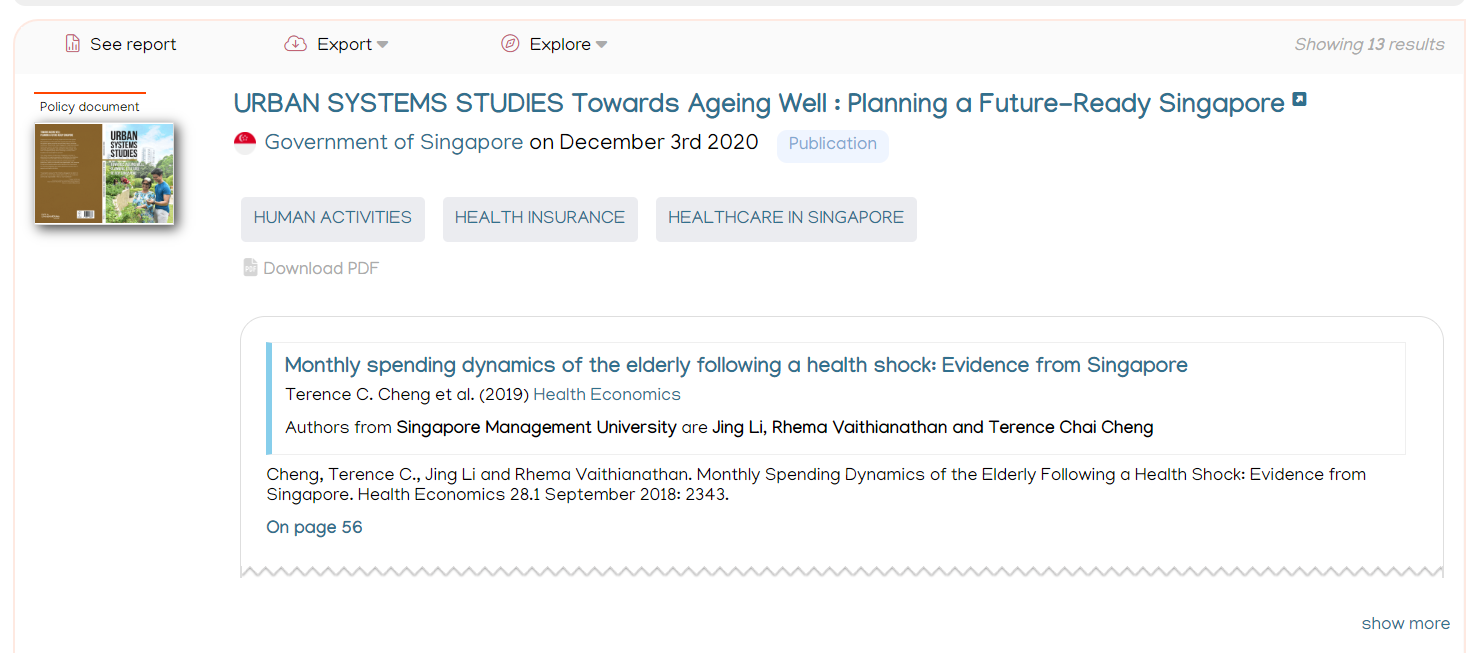

Imagine your work is being mentioned or cited in the Singapore Parliament debate or by a WHO report on COVID-19. While such cites from policy papers and reports are usually not captured in traditional citation indexes (often not even Google Scholar), you can use Overton (subscribed by SMU) to find such cites and get a sense of the possible societal impact your research might be having.

For example, in Overton* we found that the paper on “Monthly spending dynamics of the elderly following a health shock: Evidence from Singapore” authored by T. Cheng, Jing Li and Rhema Vaithianathan was cited in a policy document “Towards ageing well” by the Centre for Liveable Cities. This typically won’t be found in a traditional citation index.

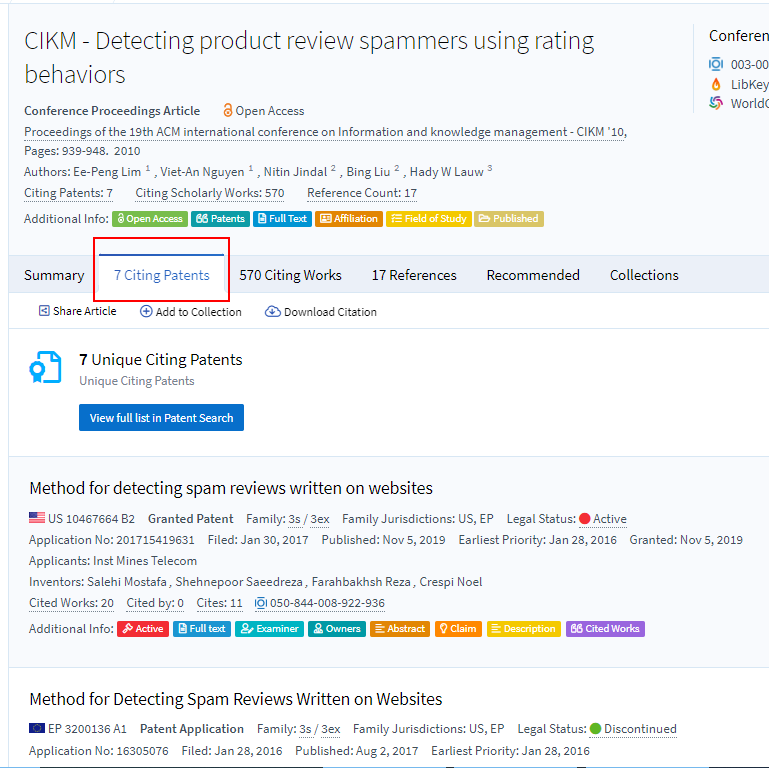

Another possible measure of impact on the industry is cites from patents, which is obtainable from Lens.org.

For example, in Lens.org, we found that the conference paper on “CIKM - Detecting product review spammers using rating behaviors” authored by Ee-Peng Lim, Viet-An Nguyen , Nitin Jindal, Bing Liu , Hady W Lauw was cited by seven patents including patents filed by organisations like Microsoft, IBM and Google showing some impact on the industry.

Other possible measures of impact that fall under the umbrella of altmetrics include:

- Mentions in social media such as Twitter

- Mentions in blogs

- Citations in Wikipedia

- Readership in Mendeley

There are a couple of ways to get altmetrics either via providers like Altmetrics.com (this is a company and not to be confused with the concept of altmetrics), the free altmetrics.com bookmarklet or Plumx badges available on our institutional Repository – INK.

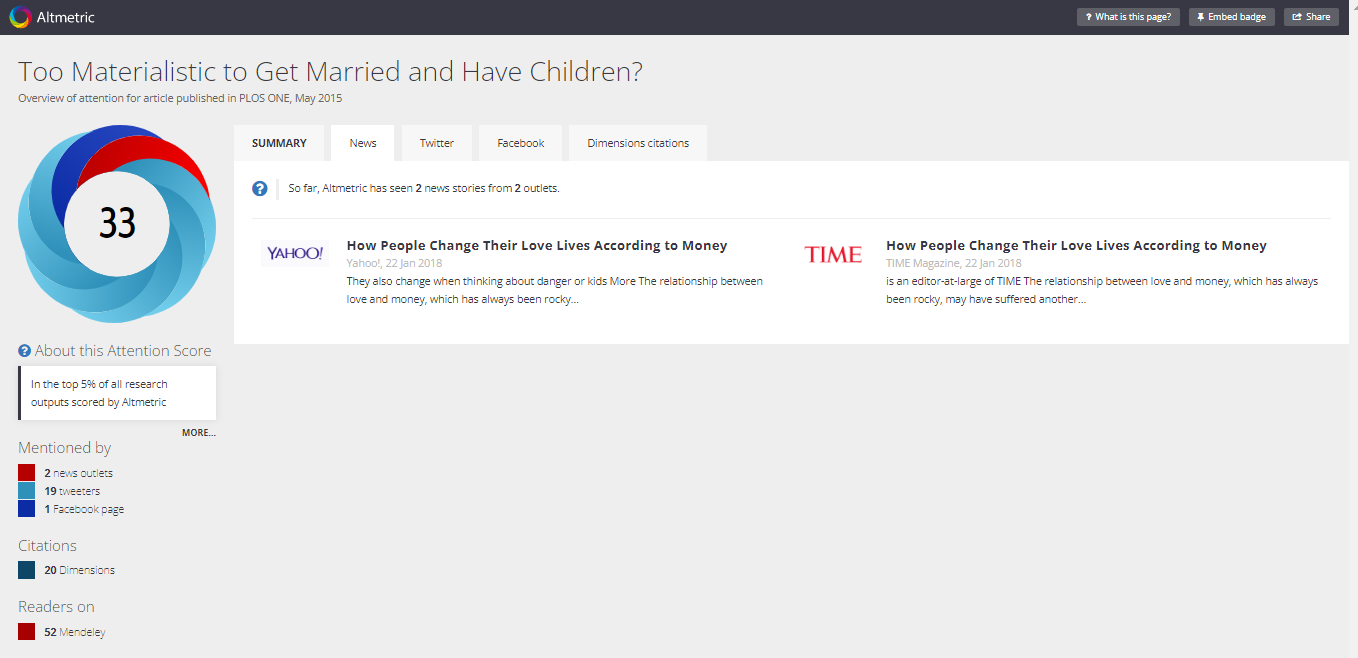

Using the free altmetrics bookmarklet, we can see that Norman Li’s Too Materialistic to Get Married and Have Children? paper is mentioned by 19 tweeters, one Facebook page and has 52 Mendeley readers (in 52 Mendeley reference libraries). It’s even covered in two News outlets – Yahoo and Time Magazine, showing that the findings are interesting enough that it was covered/mentioned briefly in mainstream media.

That's quite a lot of attention, which is why this paper has an altmetric score of 33 and is in the top 5% of all research outputs scored by altmetric.com.

In fact, your paper does not need to be cited to have an impact. Authoring an insightful paper in education pedagogy that is downloaded heavily and read by practitioners in the field might be a sign of impact even though they may not be cited much.

For the same paper, Plumx, an alternative altmetrics provider shows even more data including download data from popular platforms like Ebsco, PubMedCentral, and institutional repositories. Overall studies on these altmetrics have shown that all of the above except readership in Mendeley are generally uncorrelated with traditional academic citations, and hence definitely measure different dimensions of impact as traditional citations.

Educational impact

As a scholar and researcher, you may also wonder if your work has an impact in the classroom. Are their works being assigned as course reading in classes whether it be textbooks, books, book chapters or even the articles they have written?

This is somewhat more difficult to measure as covering all reading lists is hard to achieve. An abnormally high download rate could be an indicator.

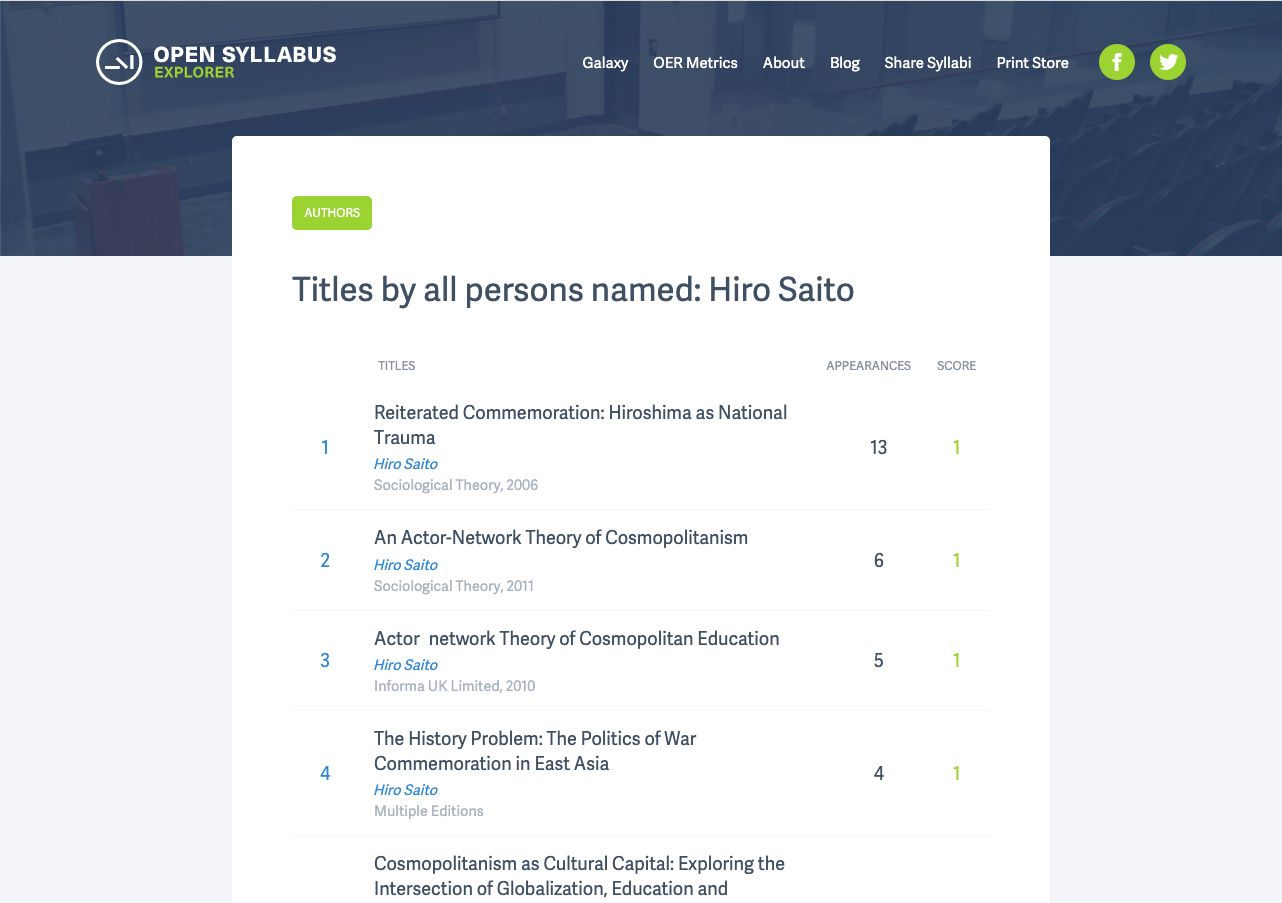

The Open Syllabus Project has mined over 9 million syllabi from over 140 countries and tracked over 4.8 million unique items, allowing you get a sense of how popular your works are in classrooms. For example, in Open Syllabus we found that the papers by Hiro Saito has been assigned as reading material in syllabus/reading listings of other Universities.

Conclusion

Showing the impact of your work is no longer as straightforward as it used to be, given the various dimensions of “impact”. As such, if you need help with any of these tools or just want a consultation on showing research impact? We are here to help.

Related articles

- Find out more about Overton from a ResearchRadar article “Measuring societal impact: Who is citing SMU in policy documents?”

- Fid out more about scite from a ResearchRadar’s article “scite- a new generation discovery citation index- an interview with scite co-founder and CEO Josh Nicholson” to find out more about scite.