By Aaron Tay, Lead, Data Services

What is the CWTS Leiden Ranking?

Many people are aware of the big 3 University rankings, THE (Time Higher Education) rankings, QS rankings and the Shanghai or Academic Ranking of World Universities (ARWU) rankings.

But are you aware of the Leiden Rankings that has been released annually by the Centre for Science and Technology Studies (CWTS) since 2003 using Web of Science data?

is an interdisciplinary research institute at Leiden University that studies scientific research and its connections to technology, innovation, and society... With a team of over 40 researchers and other professionals with an internationally recognised expertise in research assessment, science policy, scientometrics, and other related areas.

For example, the team includes members who are internationally renowned for expertise in bibliometrics including Professor Ludo Waltman who is among other things founding Editor-in-Chief of the journal Quantitative Science Studies, winner of the 2021 Derek John de Solla Price Medal and together with Nees Jan van Eck, co-author of the popular bibliometric science mapping tool – VOSviewer (see our writeup on the tool).

Leiden Ranking compared

The CWTS Leiden ranking as a university ranking was first established in 2003, as a response to ARWU

aiming to demonstrate more appropriate ways to use bibliometric data for comparing universities.

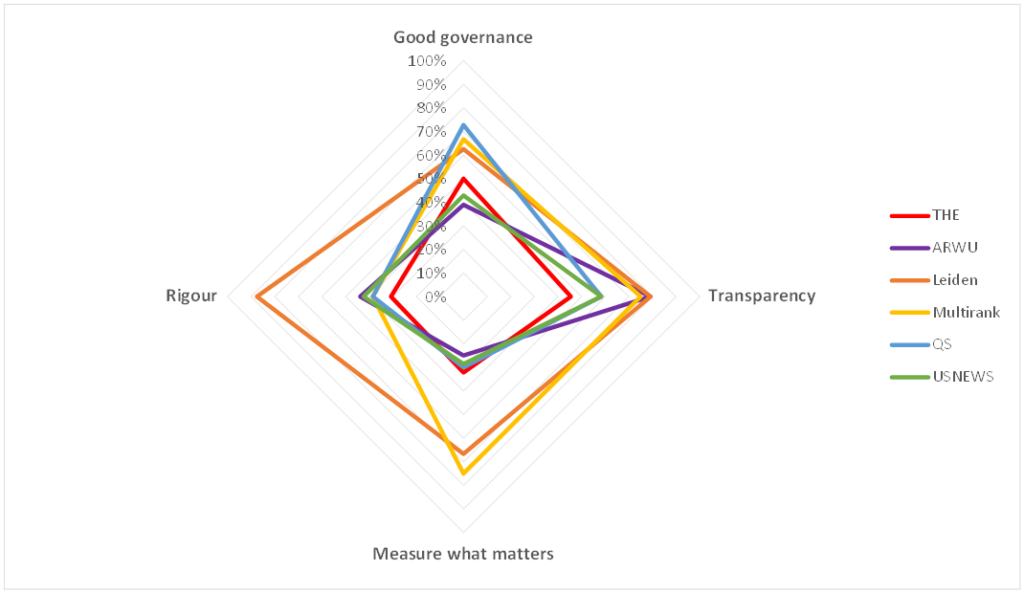

While the CTWS Leiden ranking is less well known, some have rated it highly for rigor and robustness compared to other university rankings.

For example, in an evaluation of university rankings by the INORMS Research Evaluation Working Group (REWG), that compared 6 university rankings:

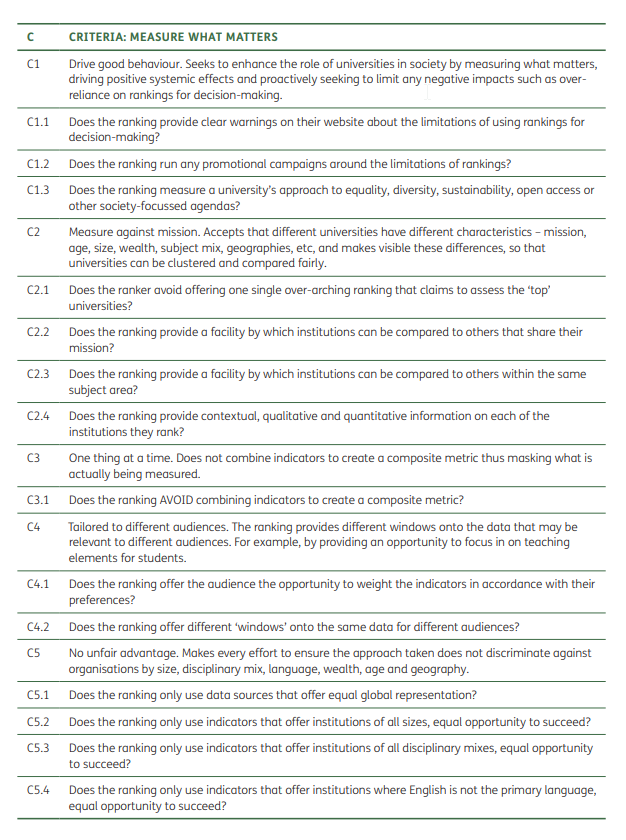

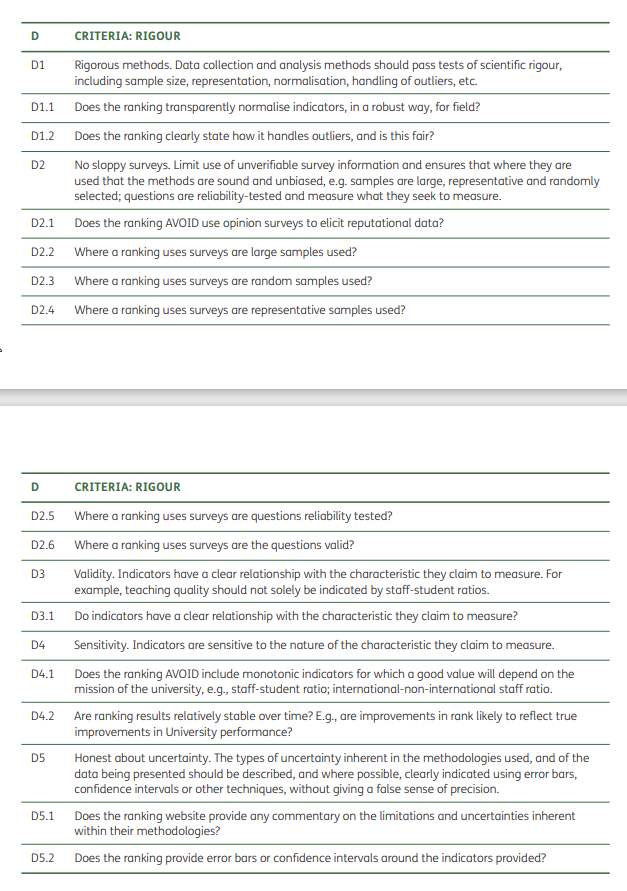

Among four dimensions of “governance”, “rigor”, “measure what matters” and “transparency”, the Leiden ranking scored first by “a mile” in Rigor (88% out of 100%) and was scored highest (tied with U-Multirank) in “Measure what matters”, it also came second (to ARWU) in “transparency”.

See full report with criteria for the four dimensions evaluated and individual evaluator scores and comments.

What the Leiden Ranking measures

The Leiden ranking ranks over a thousand universities based on publications over a 4-year period (for example, the Leiden ranking 2023 covers publications from 2018-2021) with data drawn from the Science Citation Index Expanded, the Social Sciences Citation Index, and the Arts & Humanities Citation Index.

- Scientific impact indicators

- Collaboration indicators

- Open access indicators

- Gender indicators

The Leiden ranking, as mentioned earlier, scores particularly highly in “rigor” and “measures what matters”. For example, even though they have a default ranking sort, multiple ways to sort the ranking by four major types of indicators.

They avoid subjective reputational surveys, normalise indicators when appropriate and represent uncertainties with Stability intervals.

The indicators allow segmenting by fields, using an algorithm to assign individual publications to over 4000 micro-level fields of science (as of 2023) based on the citation relationships between millions of papers before mapping to the five main fields.

- Biomedical and health sciences

- Life and earth sciences

- Mathematics and computer science

- Physical sciences and engineering

- Social sciences and humanities

The Scientific impact indicators are mostly percentile based as opposed to mean-based which removes the impact of outliers

MNCS (mean normalised citation score) or the mean number of citations of the publications of a university, normalised for field and publication year, is sensitive to outliers. However, it is not available on the ranking page but the scores for each individual University’s MNCS and other indicators can be accessed by clicking on the individual University.

On their indicator page, they stress the importance of distinguishing and providing size-dependent indicators vs size-independent indicators.

So, for example, they provide rankings by both the size-dependent P(top 1%) metric and the size-independent PP(top 1%) metric where

- P(top 1%) - The number of a university’s publications that, compared with other publications in the same field and in the same year, belong to the top 1% most frequently cited

- PP(top 1%) - The proportion of a university’s publications that, compared with other publications in the same field and in the same year, belong to the top 1% most frequently cited

Similar variants of size-dependent and size-independent indicators exist for Collaboration, open access and gender indicators.

Why SMU is not represented in the Leiden ranking

Unlike some other University rankings, universities do not need to submit data to be included in the ranking. However, for manpower reasons, the rankings understandably do not include every institution.

What about SMU? SMU has never been listed in the Leiden ranking. This is because our output does not make the minimum cut-off for being listed.

For the 2023 list, Universities need to have at least 800 Web of Science indexed publications (only publications classified as “research articles” and “review articles”) in the period 2018–2021.”

Also, not every article listed in the Web of Science Core collection is included in the Leiden ranking, the subset of articles that is used in the ranking is referred to as “Core publications”. Core publications are publications in international scientific journals in fields that meet certain criteria such as being in English, not being retracted and being in a “Core journal” (list for 2023 Core journal here) which itself is being defined as of “international scope” with “a sufficiently large number of references to other core journals”

CWTS Leiden Ranking 2023 – two, not one ranking!

The traditional CWT Leiden ranking has many strengths in terms of rigor and transparency, from publishing full details of the ranking method, clear definitions of indicators and details of sources used.

However, because many of its indicators are based on proprietary Web of Science data, it still doesn’t have the highest transparency scores, as it cannot provide data for third parties to replicate.

But in 2024, on top of the traditional Leiden Ranking based on Web of Science data, they also launched in parallel an “open edition” University ranking based on the fully open OpenAlex data (CC0 License). (See our coverage of the OpenAlex launch in Jan 2022)

How does the “open edition” of the Leiden Ranking compare to the traditional rankings based on Web of Science? Find out in the next issue?