By Aaron Tay, Lead, Data Services

One of the challenges of doing literature review is that you are constantly switching between different modes of searching and browsing. You may find some relevant papers via keyword searching or stumble upon some via Wikipedia or at a conference, which leads you to look at the references or citations of that paper, which may lead you to spot interesting authors that you decide to check out their publications, which leads to more citation mining…. and before you know it you might be lost.

It often feels like you are tumbling down the rabbit hole, as each relevant paper you find opens up yet more avenues to explore and the possibilities seem endless.

What if a tool existed that helped you with doing all this in a seamless way and as a bonus provided quick visualisations to help you decide which direction you might want to go when exploring the literature “forest”?

A new literature mapping tool - ResearchRabbit aims to do this.

Adding relevant papers to start into ResearchRabbit

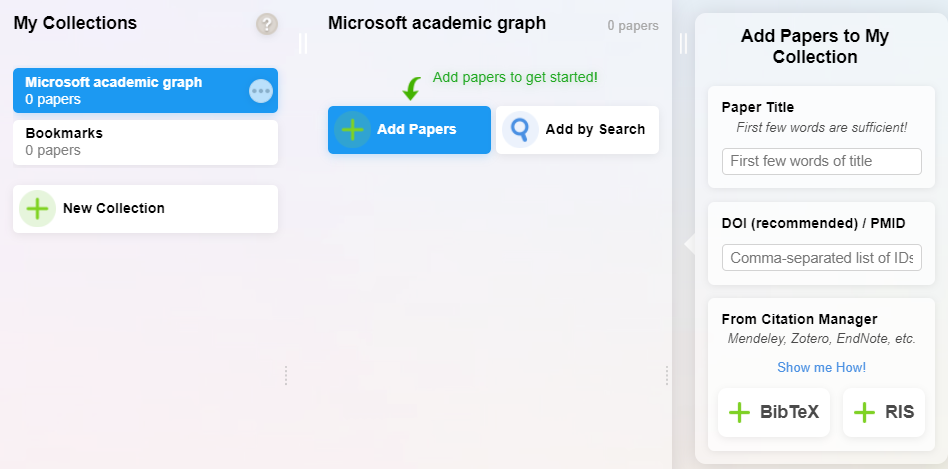

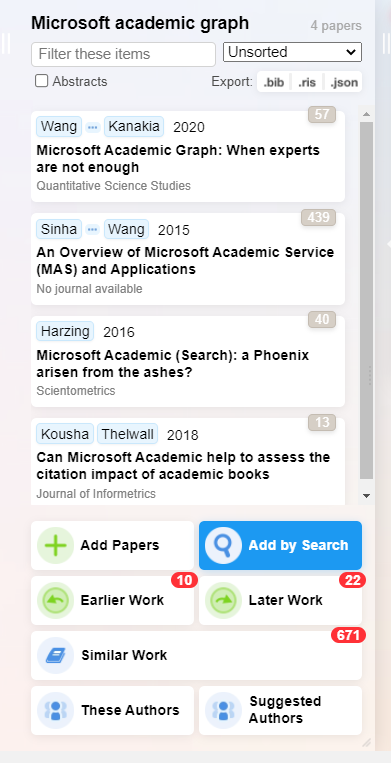

Like other tools similar to this (see earlier Research Radar piece), you start off by adding one or several relevant papers you already know into a “collection”. You can add specific papers by title or unique IDs (DOIS/PMIDs) or even import references from most reference managers using BibReX or RIS format.

Don’t have any papers to start off with? You can also search by keywords by clicking “Add by Search” using different search engines.

Once you have added one or more papers into the collection, this is where the fun starts.

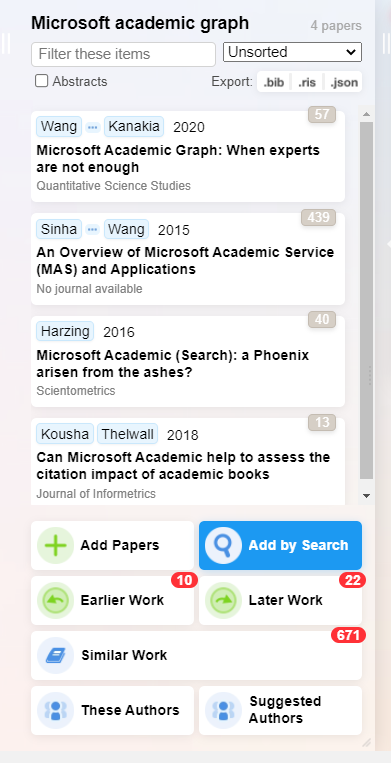

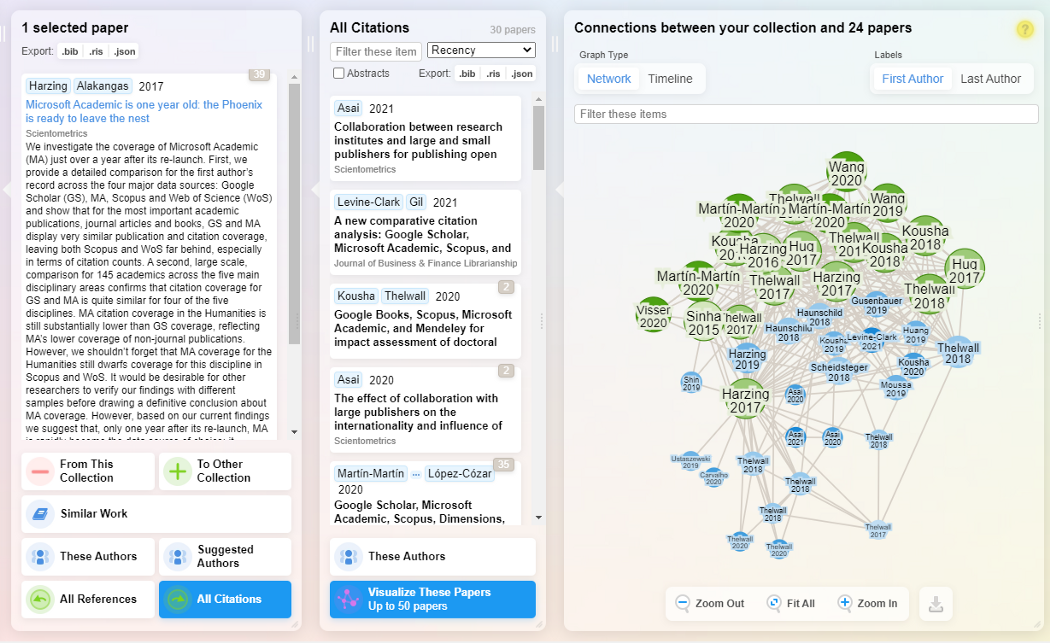

Say we pick one paper - Hazing & Alakangas (2017) to focus on, clicking on it gives us options including

- “All References” (show all papers that reference selected paper)

- “All citations” (show all papers that cite selected paper)

- “Similar Work” (show “similar papers” to selected paper that you might be interested in)

- “These Authors” (show all authors of selected paper) “Suggested Authors”(show suggested authors that you might be interested in)

Let’s say we want to look for potential papers to add to our collection by looking at references of Hazing & Alakangas (2017). Clicking on “All citations” will generate 30 papers that are referenced by that paper known to Research Rabbit in a new column titled “All Citations”.

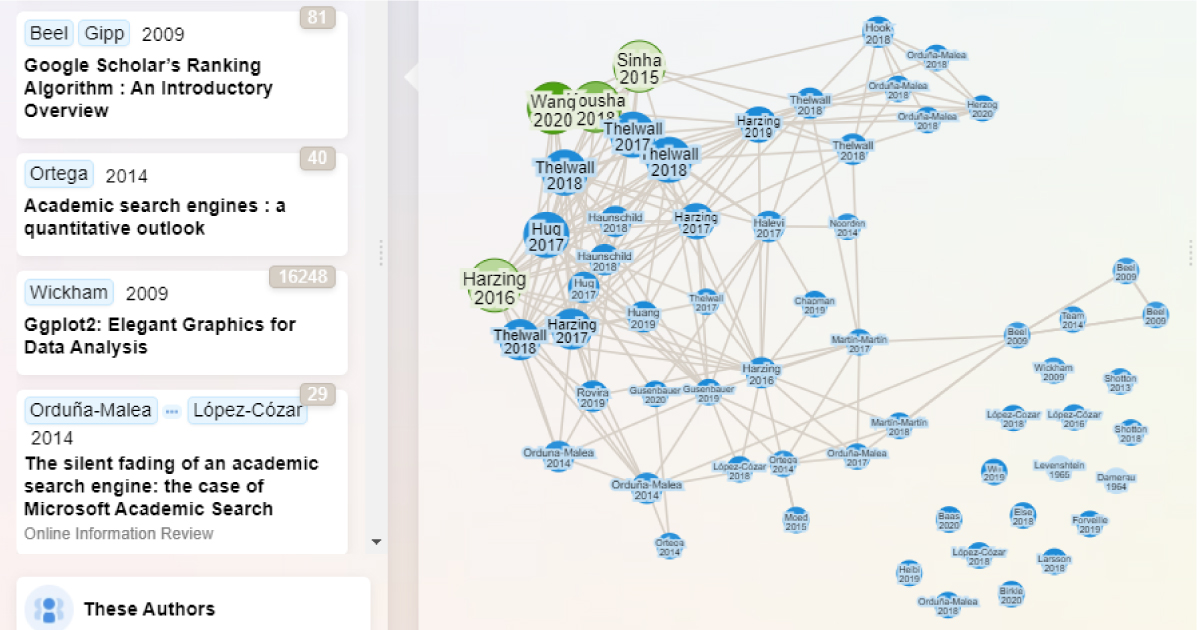

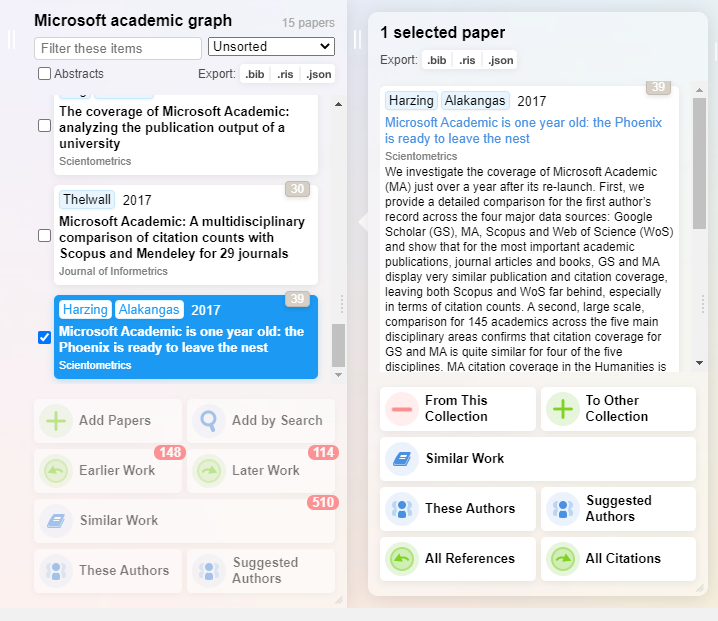

You also get a visualisation graph which each paper represented by a node and links between them represent citations. More importantly, papers that are already in your collection are coloured in green and those which are not are in blue.

This helps you see how connected the papers you are currently considering with those already in your collection.

If any of the papers listed in this new column are of interest, you can drag them to your collection or click on them and select “To this collection”.

From here, you can repeat this process, and select one of these papers and click on say “All references” to generate yet another column of papers that are references of that paper and continue drilling down further.

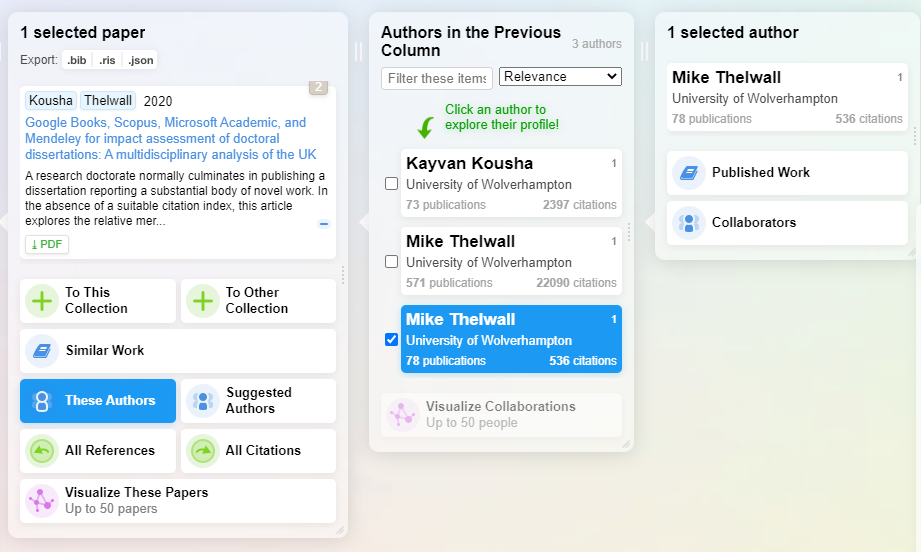

But instead, let’s try clicking on one of the paper’s- Kousha & Thelwall (2020) and let’s say we are interested in the authors of these papers and we want to see what else they have written.

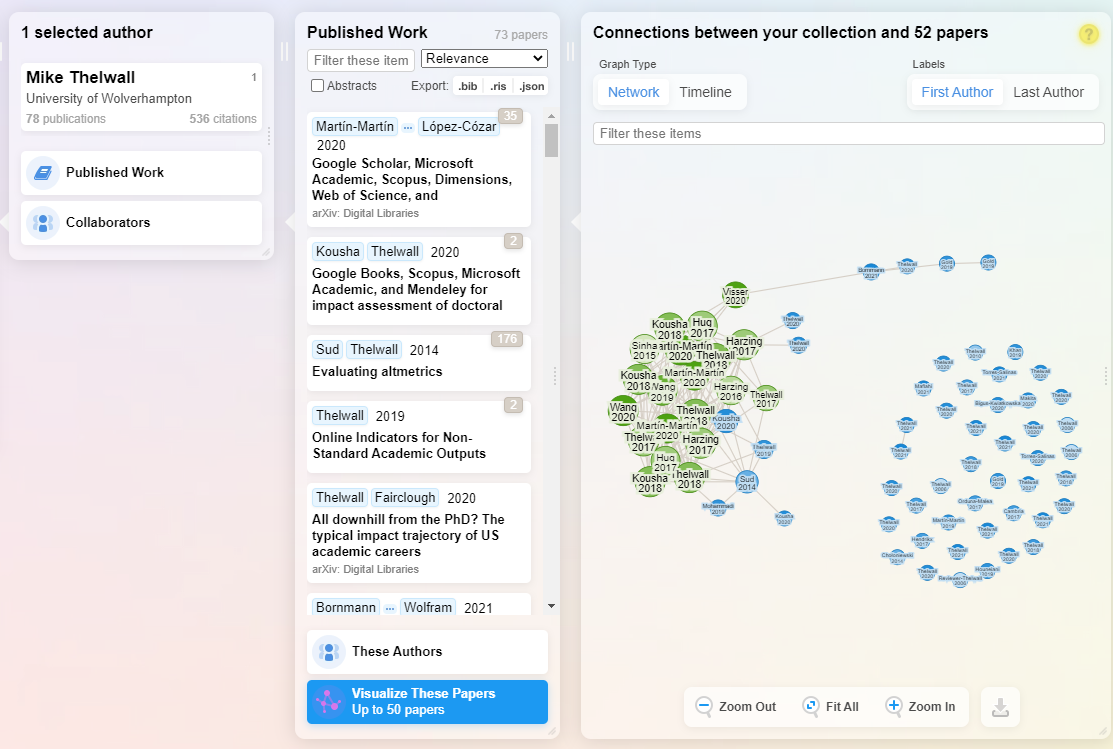

We can select the paper, select “these authors” and for the selected author, we can click on “Published work”.

This will generate as you probably expected yet another new column of papers and the same visualisation you have seen earlier.

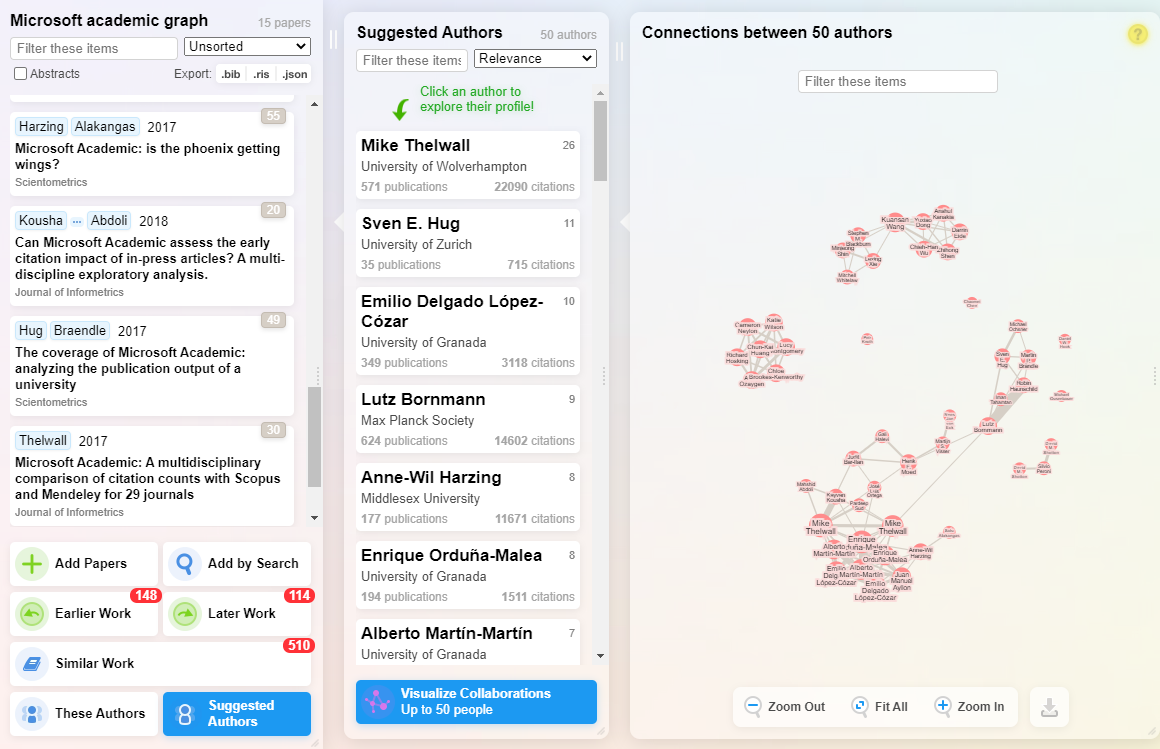

Finally, clicking on “Suggested authors” will prompt Research Rabbit to produce a new column of suggested authors as well as visualisation. This time, the visualisation will be a co-authorship graph showing which authors have co-authored together.

This allows you to tell which suggested authors have worked together before helping you detect possible clusters of research.

As you add more papers to the collection, you will be given options like “Similar paper”, “Later work”, “Earlier work” that are recommendations based on multiple papers in the collection. Clicking on them will give you the same panels as seen earlier.

Like most similar tools, ResearchRabbit allows you to export what you have found in your collection to reference managers via RIS, Bibtex files. You will get weekly updates as the algorithm finds new papers that might be of interest.

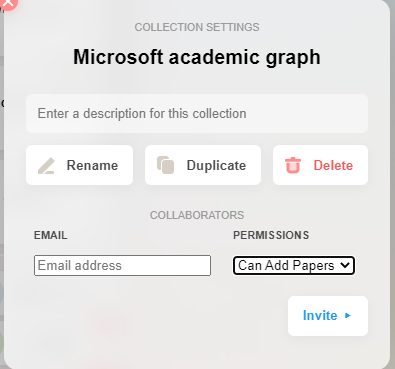

ResearchRabbit also promotes collaboration. You can share collection with other users in either read-only mode, or allow them to add papers.

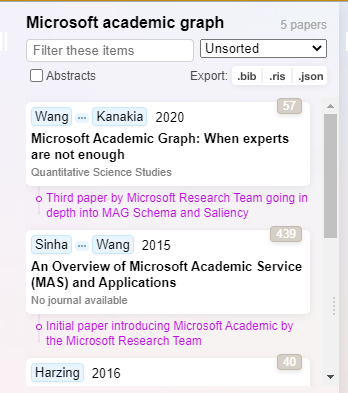

ResearchRabbit allows you to add notes, which can be helpful if you have collaborators adding to the collection.

Summary

Good literature review involves the use of a combination of techniques such as keyword searching, using relevant papers as seeds to find more related papers by either looking at citations, references or works by relevant authors and to repeat iteratively until you have exhausted the possibilities. Research Rabbit reduces the friction of doing such steps and allows you to navigate the landscape with visualisation graphs to quickly populate your collection with relevant papers.

How does Research Rabbit compare to the other 3 tools mentioned in the earlier Research Radar piece on tools to help with literature mapping?

It’s still early days, but in terms of simplicity, low effort investment , one shot tool , this librarian feels Connected Papers is still the one to the beat, as in my experience it works very well with just one paper. In comparison while ResearchRabbit can work with one paper, it starts to really shine when you have more papers to your collection.

ResearchRabbit has a higher learning curve due to the variety of options available, however, if you are in the market for a tool that allows you to do intensive custom literature review and are willing to spend the time, ResearchRabbit is a very promising tool, once you get the hang of it.

Do note that like all the other literature mapping tools reviewed, the quality of ResearchRabbit recommendations depends heavily on the metadata available (title, abstract, citations) from the index used. For example, the tool may not have information (or have incomplete information due to extraction errors) on the references of a paper and relying on it to drill down to references could mean missing relevant references you might discover if you have done so by hand.