By Aaron Tay, Lead, Data Services

In past Research Radar articles, we discussed the drawbacks of using ChatGPT and other large language models for information retrieval, namely the high possibility of hallucination with no way to verify the answer and the fact that these models would often be using data that is months ago because it is costly to train them.

The proposed solution was to combine a search engine to find relevant content which was then fed to the large language model to extract/summarise the answer, and in the first article, we discussed two general web search engines enhanced in such a way – Bing Chat and Perplexity.

In the next article, we discussed Elicit.org the academic-only search cousin of Bing Chat and Perplexity that searches only over Semantic Scholar data. However, as large as Semantic Scholar open data is, it limited to only open access full-text. Can we do better with a similar tool but that searches over more than open access full-text?

The answer is the newly launched Scite assistant by Scite.

SMU first subscribed to Scite.ai in 2019, and we discussed this new tool in a past Research Radar report back then and since then it has gathered a small passionate group of users.

What is Scite.ai - a refresher

To refresh your memory, Scite.ai is a new generation citation index. At first glance, it looks similar to any citation index like Web of Science, Scopus or Google Scholar, and you can get citation counts from scite.ai

However, Scite.ai goes further in that it only classifies citations into three citation types

- Supporting cite

- Contrasting cite

- Mentioning cite

This is done by Scite collecting citation statements or “citances”. What is a citation sentence? It is

the sentence extracted from the full-text article that includes the in-text citation. The citation statement shows how a citation was used by allowing users to easily read it. Scite also includes the sentence before and after the citation statement, which they collectively call the citation context. Seeing the citation context can help users easily see how an article or topic has been cited without having to open every full-text of the article that cites it, saving users a lot of time while providing them with extra information and nuance.

While the rise of open scholarly metadata means Scite has pretty complete metadata (e.g., title, author, reference list) on over 179 million articles, book chapters and preprints, access to citation statements/context is more difficult. Scite has managed to sign indexing partnerships with various publishers like Wiley, Sage and a few others to increase its collection of citation statements beyond open access papers. Currently, it covers over 1.2 billion citation statements, probably the biggest collection of citation statements available in the system so far.

Adding ChatGPT to Scite

Given that Scite has additional scholarly data that goes beyond open access, it is a natural idea to add a search engine over Scite with ChatGPT allowing ChatGPT to generate answers from the content it finds from Scite.ai and this is indeed what Scite assistant is.

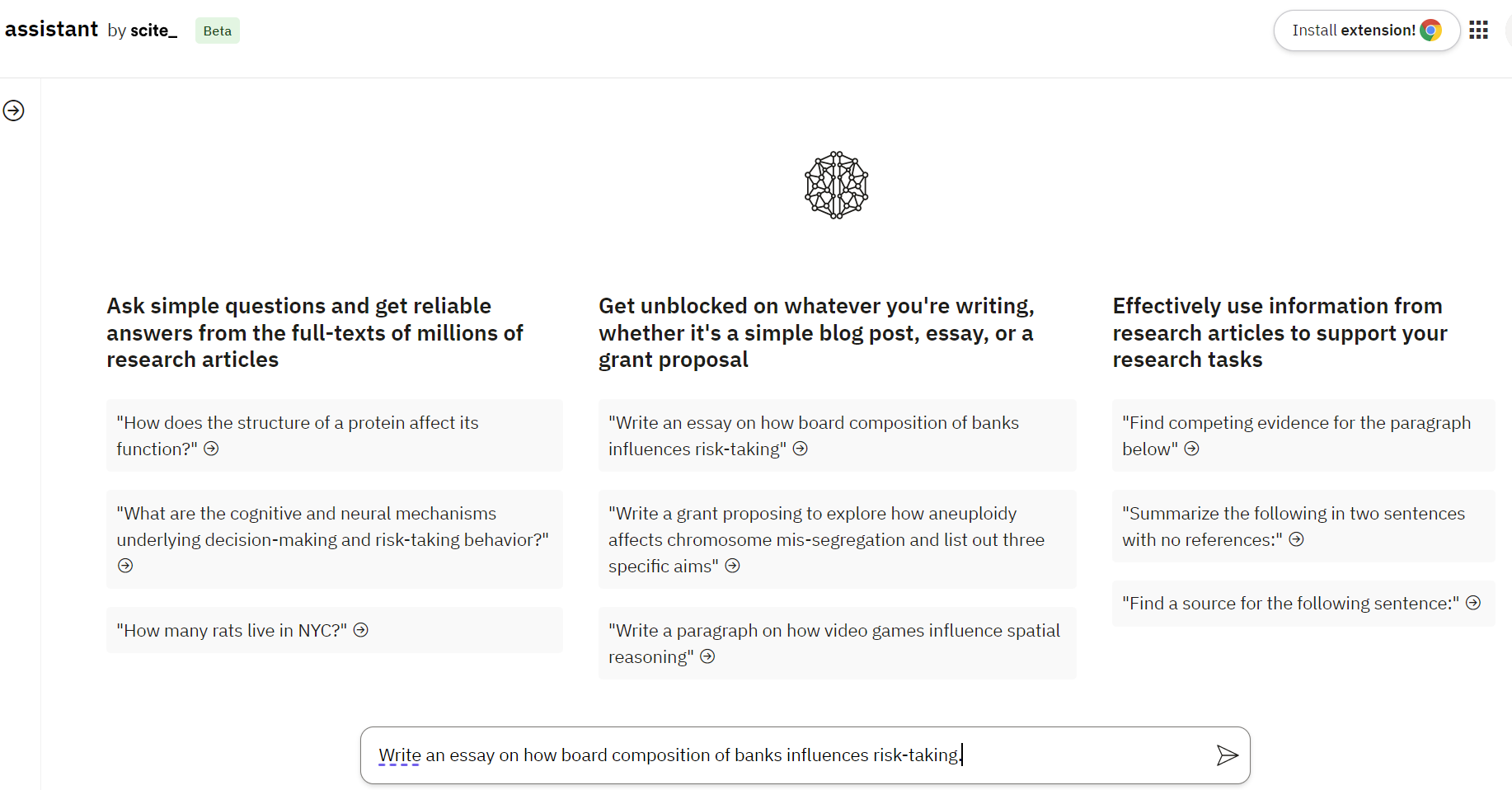

The Scite assistant starting page provides some examples prompts to try but you are not limited to those.

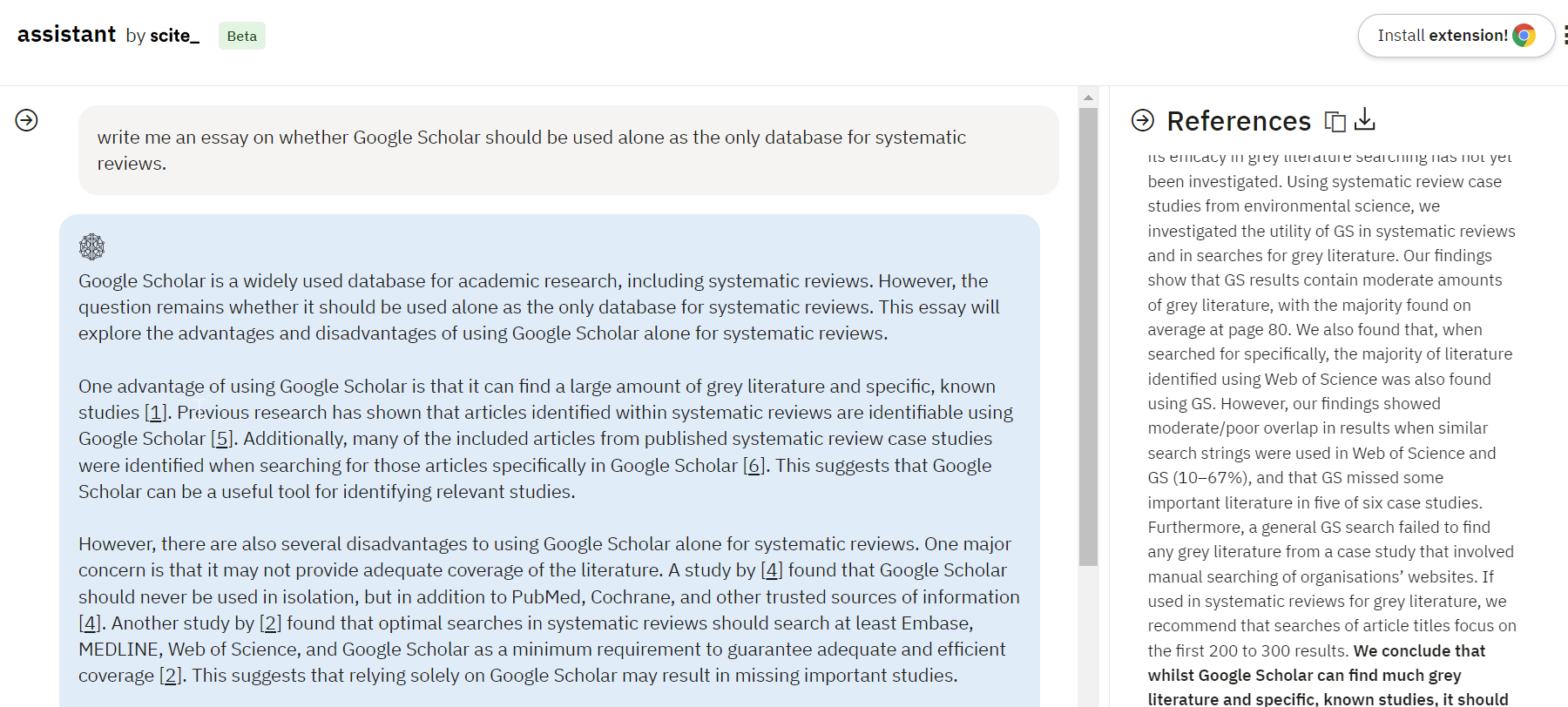

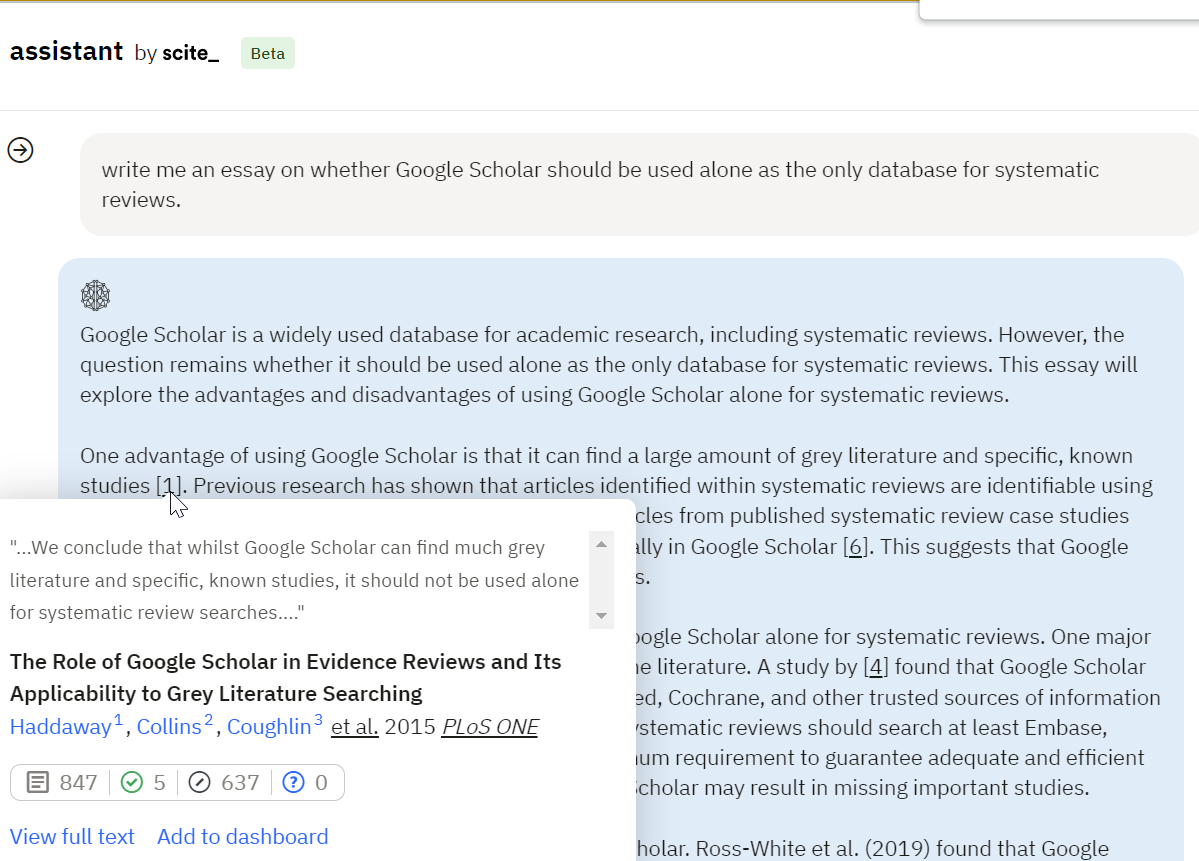

In the example below I prompt it with - “write me an essay on whether Google Scholar should be used alone as the only database for systematic reviews” and it produces a text below.

Similar to Bing Chat, Perplexity and Elicit.org, it searches through Scite.ai for evidence/text that is used to support the generated sentences.

There is however some nuance to how these citations are interpreted. When you mouse over the citations, you can see the text that is used and the paper it is found in that is used to generate the sentence.

For example, in the image above the sentence

One advantage of using Google Scholar is that it can find a large amount of grey literature and specific, known studies [1].

Is supported by the sentence (as seen in the mouseover)

...We conclude that whilst Google Scholar can find much grey literature and specific, known studies, it should not be used alone for systematic review searches...."

Which is from the paper, The Role of Google Scholar in Evidence Reviews and Its Applicability to Grey Literature Searching or (Haddaway et. al, 2015).

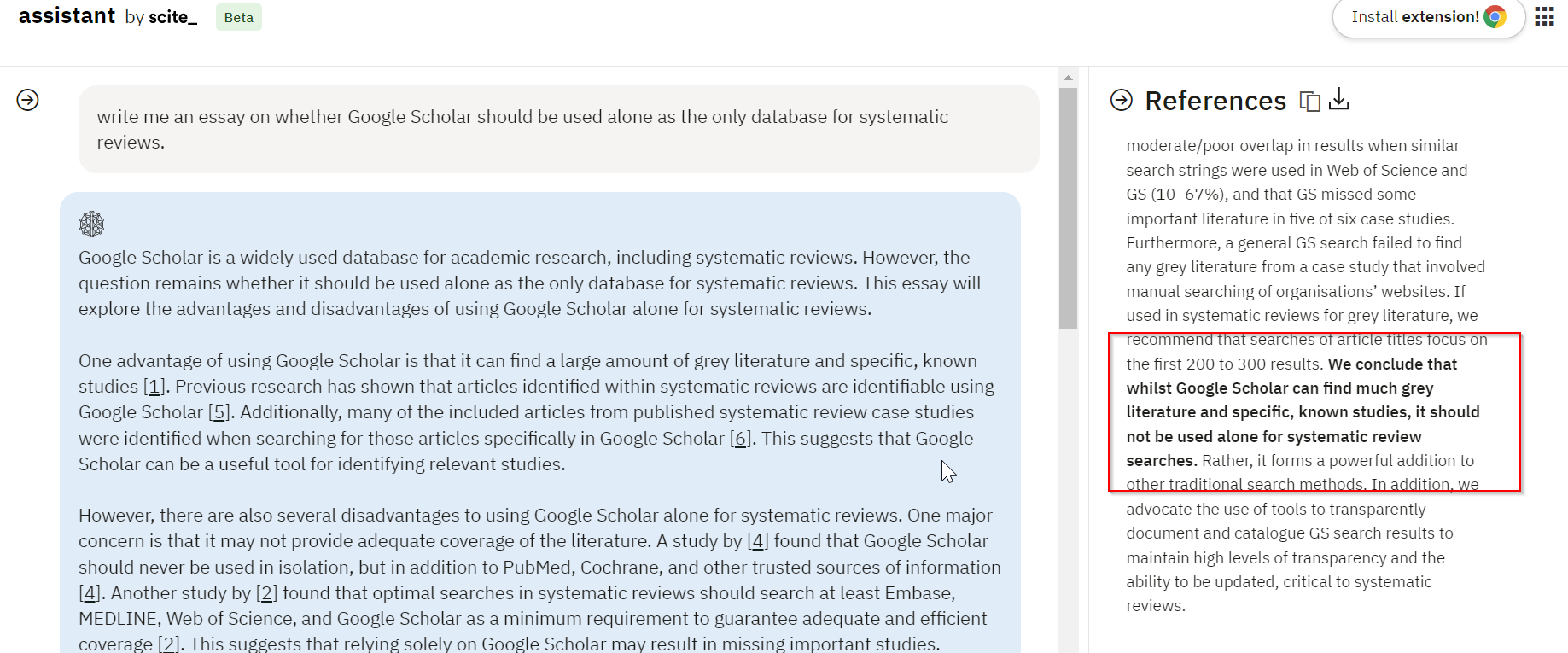

In fact, if you click on the link, the sidebar shows the citation comes from text in the abstract of the paper.

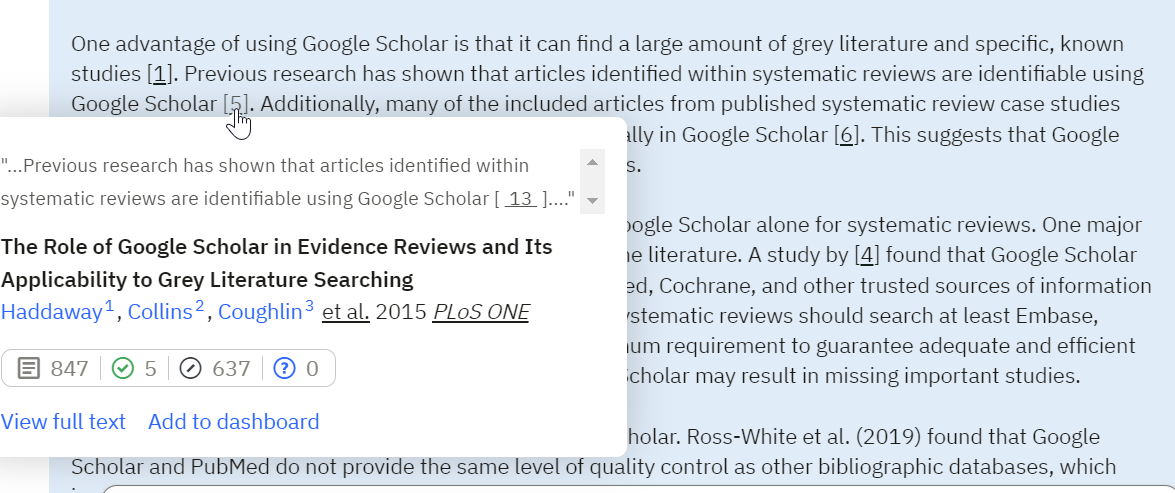

Somewhat trickier is when the citation is actually from a citation sentence. For example, in the image below you can see that [5] appears to be a cite of the paper as before - The Role of Google Scholar in Evidence Reviews and Its Applicability to Grey Literature Searching or (Haddaway et. al, 2015).

However, when you mouse over you see the evidence is actually coming from a sentence in the cited paper that says

"...Previous research has shown that articles identified within systematic reviews are identifiable using Google Scholar [ 13 ]...."

In other words, Haddaway et. al,(2015) does not itself support the statement but it refers to another paper – the “previous research”. It seems to me citing Haddaway et.al (2015) in this example is the same as doing an indirect citation and should be avoided. Instead, the correct citation should be whatever is referenced as [13] in that paper.

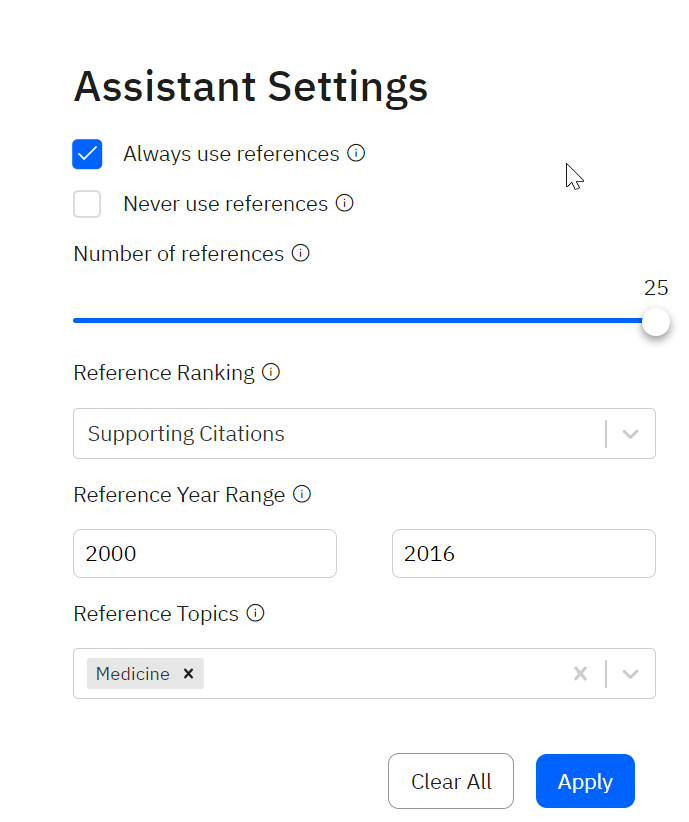

Not happy with the citations chosen? Scite assistant now provides some settings to control this a little better. You can set scite assistant to always reference papers, never reference (this mimics ChatGPT) or something in between. You can control number of references made, year of publication of the papers cited, topic of the referenced paper and relevance ranking used to rank the references used.

Reasons to be cautious

As mentioned many times, tools like Bing Chat, Elicit.org, and Scite assistant will never completely make up a reference. However, it is not uncommon for them to cite a real reference in a way that does not reflect what the reference says which is just as bad.

What does the evidence say so far?

Scite assistant is a very new tool, even newer than Bing Chat and Perplexity.ai or Elicit.org and there have been no formal tests that I’m aware of on how accurate the citations are.

However, formal tests of Bing Chat and Perplexity have started to appear in the literature and the preliminary results suggest caution.

For example, in Evaluating Verifiability in Generative Search Engines, the authors conducted a study across 150 randomly selected queries of varying formats and difficulties. They found that for Bing Chat, on average, 89.5% of the citations that supported their associated sentences are correct (citation precision).

On the other hand, for each sentence, only 58.7% of them had a valid citation that fully supported the sentence (citation recall). The same figures were 72.7% and 68.7% respectively for Perplexity.ai.

While these results are not horrendously bad, particularly given the test suite included a lot of extremely difficult questions where the search struggled to find a suite page to cite (which explains the low citation recall), it should give one pause when using tools like Scite.ai, even if Bing Chat and Perplexity are general web search, not academic specific tools.

Conclusion

As always, these tools are developing fast. Scite has already announced they are adding features to give some control over the types of papers used to generate the answer. Do give it a try.