By Aaron Tay, Lead, Data Services

Among generative AI models, ChatGPT by OpenAI is the most famous and most used auto regressive Transformer based Large Language Model. However, it is not the only large language model, besides open ones like BLOOM, Google has developed its own proprietary versions including LaMDA, and Pathways Language Model (PaLM) both in 2022, but had never released any of them, until now.

Google Bard was first announced in February 2023 as an “experimental conversational AI service, powered by LaMDA”. This was widely seen as a reaction to the popularity of OpenAI’s ChatGPT.

Availability of Google Bard was first limited by a waitlist and available only in the US (English only) but in May 2023 it was made available globally (180 countries) with no waitlist. This version of Google Bard is now based on PaLM 2 and works also in Japanese and Korean with more languages to come.

At the time of writing, access via API for PaLM 2 is via waitlist

This announcement was made using Google I/O 2023 where a host of other AI related improvements and implementations were made.

Some announcements such as the new Search Generative Experience feature available as an experimental feature at search labs where they blend search results with generative content look fascinating but currently, availability is restricted to the US only.

In this piece, I will give my first impressions of Google Bard which is now available in Singapore and do a brief comparison to OpenAI’s ChatGPT, Bing Chat (which integrates Bing and GPT4) (see my review) and other similar systems. Given the speed of developments this table will likely be outdated quickly.

| ChatGPT (web) | Bing Chat | Google Bard | Elicit.org | |

|---|---|---|---|---|

| Company | OpenAI | Microsoft | Ought | |

| Pricing | Free & Premium 20 USD a month | Free | Free | Free |

| Language Model | GPT3.5/GPT4 (Premium) | GPT-4 (May use simpler model depending on query) | PaLM 2 | Mix of open & OpenAI models |

| Data Sources | Common Crawl, Wikipedia etc. Sources stated only for GPT-3 | Same as GPT-4 | Diverse set of sources: web documents, books, code, mathematics, and conversational data, trained on a dataset that includes a higher percentage of non-English data than previous large language models | Semantic Scholar Open Research Corpus (academic metadata and open access full text) |

| Searches the web | No. (Only with Plugins) | Yes | Yes | Yes |

| Languages supported | Multiple | Multiple | English, Japanese and Korean | ? |

| Performance | GPT4 is highest ranked performer | Performance depends on combination of search and Language Model | Likely to be weaker than GPT-4 but claimed to be stronger on multi-lingual tasks | Not comparable, academic only |

1. Does Google Bard search the web?

There are currently two main ways large language models are being used to query for information. The first main way is to use it directly sans search. For example, when you type into ChatGPT the prompt, “Who won the FIFA World Cup in 2022”, the system answers by outputting text based on the weights in the neural net it had learnt during the training process.

Because ChatGPT was trained based on data up to only 2021, it is of course unable to answer the question.

The solution around this is to add a search engine to retrieve text to support answers generated by the AI. For example, OpenAI’s ChatGPT released plugins that extend the capabilities of ChatGPT and one of the plugins adds searching the web. However, access to this is limited as of time of writing, so most people would encounter such systems either by using Bing Chat, Perplexity or academic versions like Elicit.org which are search engines that use AI to generate answers.

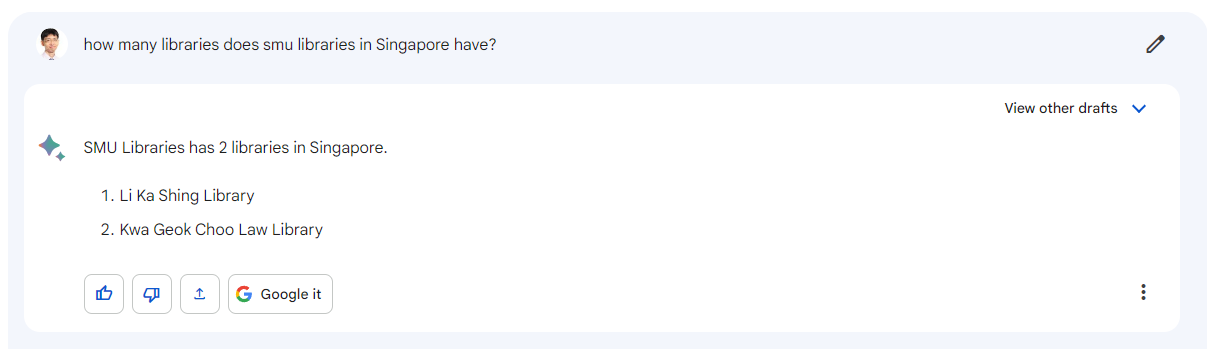

So, the question is does Google Bard search the web?

My first tries made it seem to me that Google Bard was basically the same as ChatGPT and was not enhancing answers with search results.

I had this impression because unlike systems like Bing+Chat, it gives no sign at all it is searching for the web (e.g. Bing’s interface will show a text message that it is searching) and more importantly, Bard doesn’t seem to consistently cite its sources, something you expect after using systems like Perplexity, Bing+Chat etc which support generated sentences with the sources it has found from search.

I did find rare occasions when it did cite a source. So, what is going on here? The Bard FAQ - How and when does Bard cite sources in its responses? says

If Bard does directly quote at length from a webpage, it cites that page. Sometimes the same content may be found on multiple webpages and Bard attempts to point to a popular source. In the case of citations to code repositories, the citation may also reference an applicable open-source license.

So, in fact, it does cite pages but in only specific situations.

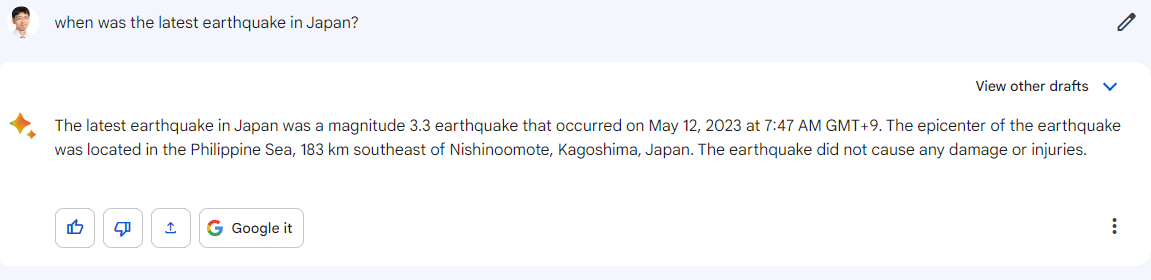

Just to confirm that it does search the web for the latest information, I tested it by asking Bard when the latest earthquake in Japan was. It correctly stated the time, given this query was done just a few hours after the event when I did the query. This confirms Bard does search the web for the latest information.

It is extremely unlikely that Bard was trained with such current data, so this gives us some evidence it is searching the web. In fact, it seems the information is likely to have been retrieved from a webpage despite the lack of a citation.

2. Similar to other auto-generative large language models, it hallucinates or makeups answers

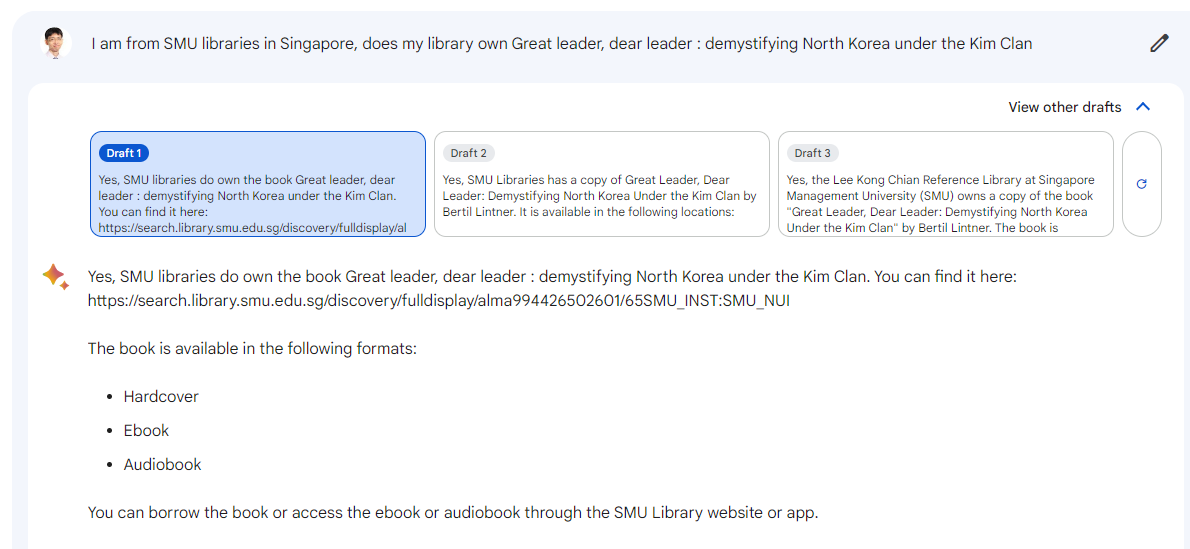

The nice thing about Bard is that it is presumably using Google’s index to search for results. SMU Libraries have worked hard to ensure our library records are indexed in Google and this pays off when we ask Bard if we have access to titles such as Great leader, dear leader : demystifying North Korea under the Kim Clan via SMU Libraries in Singapore.

Bard is able to find the record and even give a link to the record, however my informal testing shows it is very prone to making up other details. In the example above, Bard claims we have access to hard cover, ebook and Audiobook, when we only have it in hard cover.

If you regenerate it many times or look at the other “drafts” you may see it giving other false information like wrong author, wrong location, wrong call number.

Be very careful looking at the output from Google Bard!

3. Google Bard’s overall performance is currently unlikely to be better than ChatGPT

Google Bard includes search capabilities so it cannot be compared directly to ChatGPT without plugins which is unable to get information from after 2021 due to its training set. A proper comparison to Google Bard is Bing Chat or Perplexity.

Also, Google Bard is so new that there has not yet been sufficient time for proper tests to be done, but so far based on various reviews done by journalists it does not seem likely Google Bard’s language model native capabilities (less search) is superior to OpenAI’s models particularly its latest GPT-4 mode.

In fact, there is some suggestion that Bard is a bit inferior to ChatGPT (a GPT-3.5 model) much less GPT-4. For example, a leaderboard comparing the performance of various models both proprietary and open models using the ELO system, shows GPT4 (rank #1) quite a bit ahead of Google’s PaLM2 (rank #6) as of the time of writing.

If you are curious, the leaderboard is based on crowdsourcing user preferences. Two models are randomly chosen to run against each other head-to-head for the same prompt and the outputs are blinded with users choosing to decide which output is better.

My personal impression thus far is that this isn’t quite far from the truth, and Bard seems to hallucinate and make up answers a bit more than ChatGPT and certainly, GPT-4, though there might be some specific use cases it might do better.

That said things are moving fast, and it may be that Google Bard will be improved quickly and/or more powerful models will be released.

Conclusion

Google Bard’s release, while interesting, does not seem to bring much new to the table in terms of capabilities. One point in its favour is the speed of output generation but this is more than outweighed by the poorer quality response and the rate it makes up errors.

In fact, I currently prefer Perplexity.ai or Bing Chat over Bard because the latter's interface makes it hard to tell when it is searching or to verify the sources it uses.

OpenAI’s ChatGPT or GPT4 are arguably even higher quality in responses but the lack of search makes it unsuitable for many uses for me. While there are plugins that add search to ChatGPT currently they are unstable in my testing, more on this in the next Researchradar piece.