By Aaron Tay, Lead, Data Services

In the field of evidence synthesis and systematic reviews, there is a need to ensure as thorough, unbiased and complete a search is being done to ensure relevant papers are being selected. This is usually done via some of the following methods:

- Careful search and screening of results from appropriate bibliometric databases using a well-tuned Boolean search strategy that maximises recall as far as possible (taking into account time available)

- A search of selected organisation websites for grey literature

- Soliciting suggestions from experts

- Citation Chasing

Citation chasing (also known under a host of names such as pearl growing, citation or reference searching, citation mining and more) is a supplementary search technique which involves looking at either:

- the references of identified relevant articles/target papers (backwards citation chasing)

or - the citations of identified relevant articles/target papers (forward citation chasing)to screen for relevant papers.

While the Collaboration for Environmental Evidence (CEE) mentions the use of citation chasing but provides no official guidance, the Cochrane Handbook for Systematic Reviews of Interventions requires backward citation chasing of included studies, while forward citation chasing is suggested as an option for reviews on complex and public health interventions.

So, the question is what tools exist to do citation chasing efficiently in bulk? If you have around 30-50 included studies/relevant papers in your review, how do you efficiently extract all the references of these 30-50 target papers, dedupe and provide a list of unique references at one go? Similarly, how do you do the same for the citations of these 30-50 target papers?

Depending on the bibliometric database/search engine, some of them have interfaces that provide a way to do so for selected papers in their index. This librarian is aware of the following possibilities:

- Clarivate’s Web of Science – via marked list feature (forward citation chasing only)

- Elsevier’s Scopus – via lists (forward and backward citation chasing)

But what if you want to go beyond the index available from these databases?

In one of the previous ResearchRadar article, we have introduced and explored innovative tools like ResearchRabbit, Connected Papers, Litmaps and Inciteful that help you with literature mapping. All these tools rely on citation relationships to find and recommend papers. However, they tend to be not as transparent in their processes on what is found and recommended and may not be appropriate for evidence synthesis processes that focus on reproducibility of search.

This is where the open-source CitationChaser by Neal Haddaway comes in. CitationChaser is available as Open Source R package and works primarily with Lens.org index via an API.

Most users will probably use the free and easy to use web-based CitationChaser via the shiny app. While you can run Citation Chaser via the R package, you will need to negotiate with Lens.org for access to their API.

For more of the inner workings of Citation Chaser and future plans refer to paper, Citationchaser: A tool for transparent and efficient forward and backward citation chasing in systematic searching.

Why use CitationChaser?

One of the major reasons to use Citation Chaser is that it uses Lens.org as an underlying index. This is one of the largest academic sources of content, drawing from

- Microsoft Academic Graph (now defunct)

(Read the ResearchRadar article on the implications of the closure of Microsoft Academic) - CrossRef

- PubMed

- PubMed Central

- CORE

While it would be nice to use Google Scholar as the underlying index, Google Scholar does not support bulk exports on purpose and as an anti-web scraping measure. The best you can do is to use Harzing's Publish or Perish tool on a limited scale to extract citations for a set of papers (maximum 1000 output).

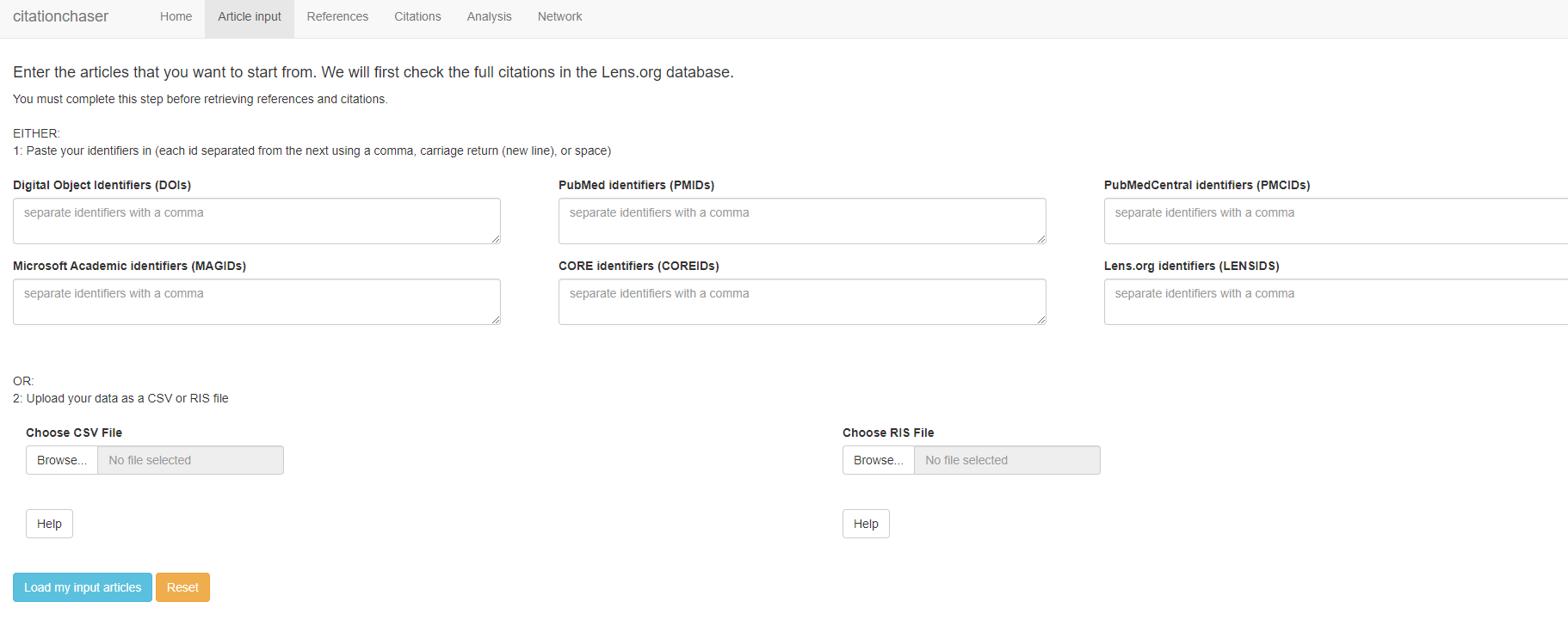

Citation Chaser is simple to use. Firstly, you start on the “Article input” tab to select papers of interest to load into the system.

You can specify the articles you want by entering DOIs (separated by commas) into the form. CitationChaser is flexible enough that you can also specify articles in combination with other article identifiers such as PMIDs, PubMed Central IDs or even Lens.org IDs.

Chances are if you are doing a large-scale analysis, you probably want to specify the articles you are interested in via CSV or via RIS files.

The fact that you can import articles into CitationChaser via RIS opens many possibilities. For example, you can export articles from your Reference Manager, Zotero, EndNote, Mendeley etc, assuming they have article identifiers or from popular literature mapping tools like ResearchRabbit that allow exports in RIS format.

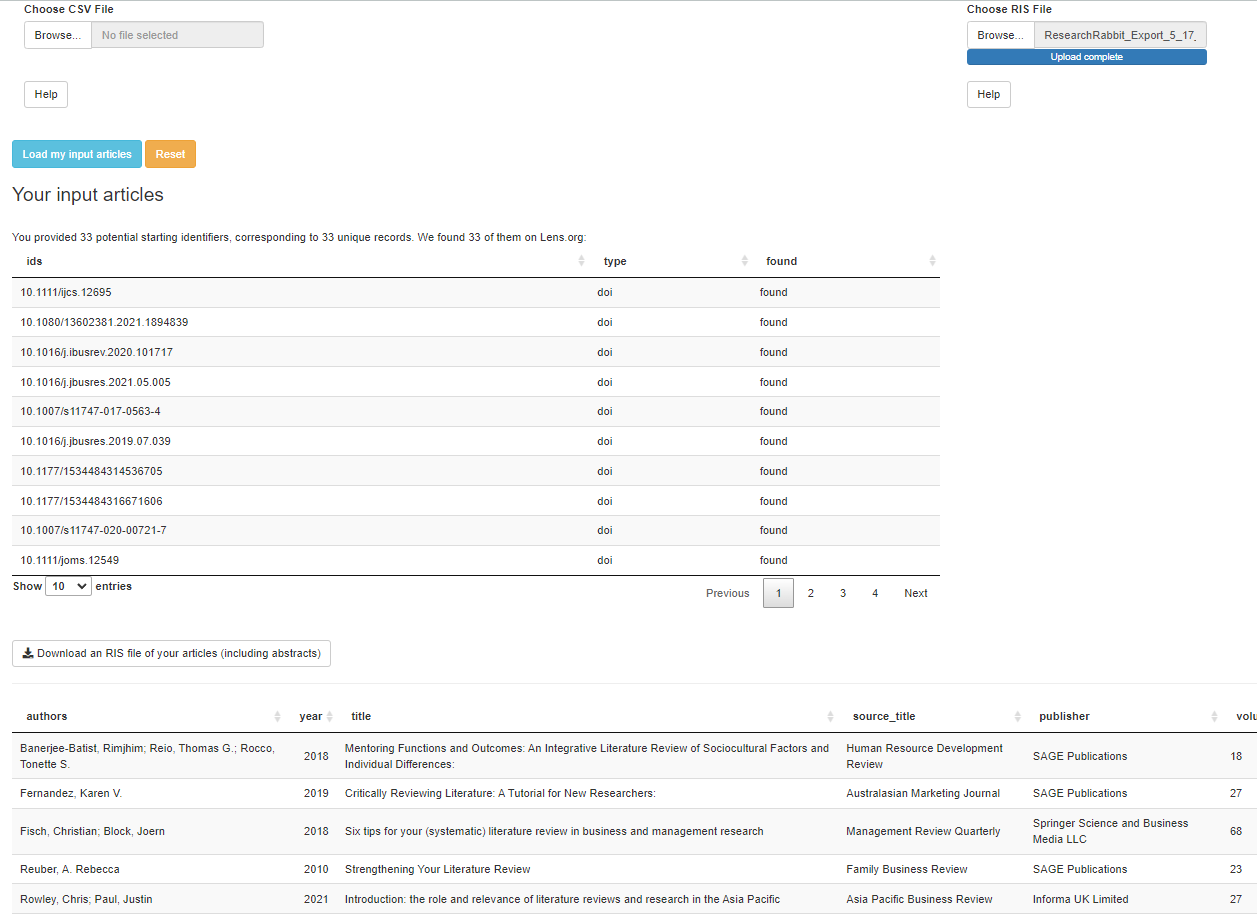

Whichever method you choose, once you click on “Load my input articles”, CitationChaser will start looking up the articles and finding matches in Lens.org. In the example below, I have chosen to upload a RIS file generated by ResearchRabbit that includes 33 papers.

Do note that while Lens.org is very comprehensive, you may occasionally find that it is unable to find an article you have specified for various reasons (e.g., error in the identifier, the article is not indexed in Lens.org because it’s too new etc.).

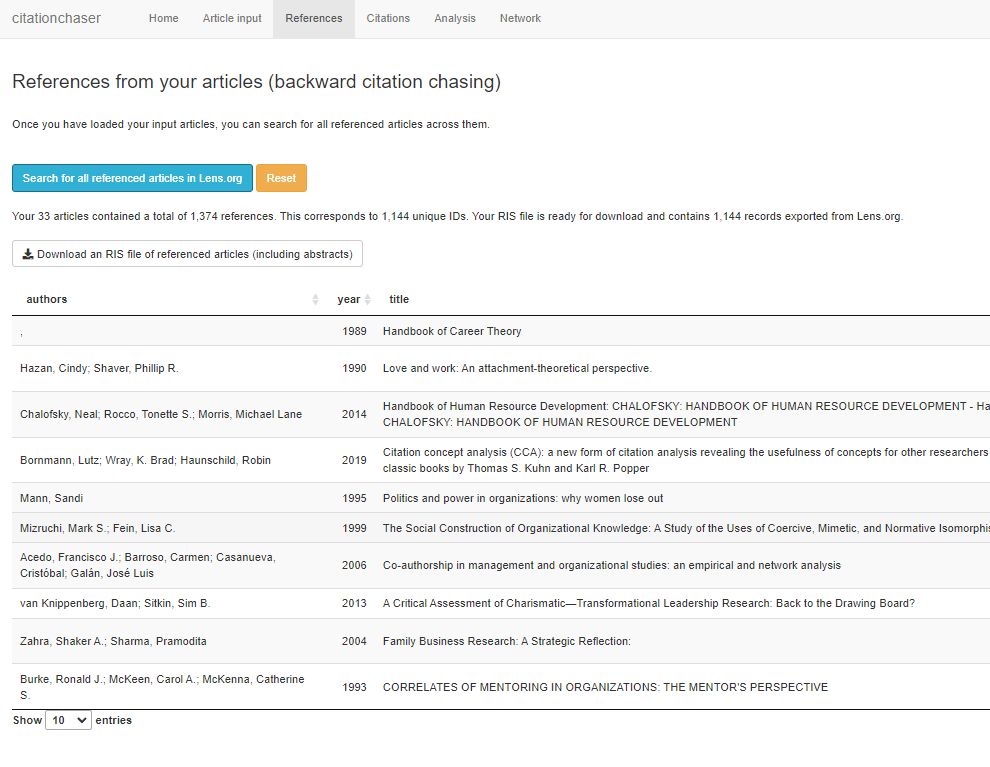

Once this step is done, click on the “References” tab and click on “Search for all referenced articles in Lens.org” to start searching for references of the input papers. This will take some time depending on the number of papers you have included.

In the example above, CitationChaser found 1,374 references in total from the 33 inputted papers. Some were duplicates and this results in 1,144 Unique references. You can then click on “Download an RIS files of referenced articles (including abstracts)” to get the references in RIS format.

Typically, you would start to screen these papers for potentially relevant papers either manually or using a screening tool such as ASReview (that uses machine learning to assist with the screening), which we introduced in a past ResearchRadar piece.

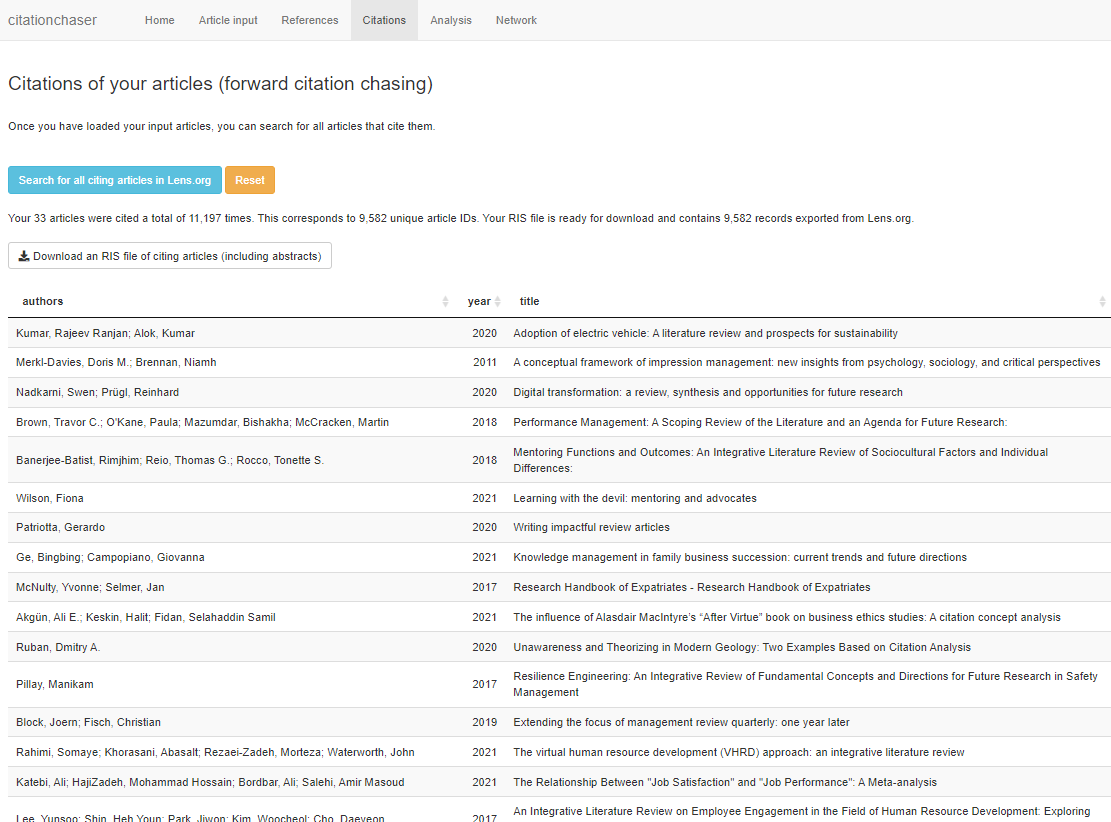

You then click on the “Citations” tab to repeat the process, but for citations of course.

In my example, this resulted in a whomping 9,582 unique papers! Of course, the search also took a bit longer.

You may think 9,582 is too much to export. Is there a way to focus down on fewer papers? How about only exporting papers that have been referenced or cited multiple times by the input papers (and hence have a higher chance of being relevant)?

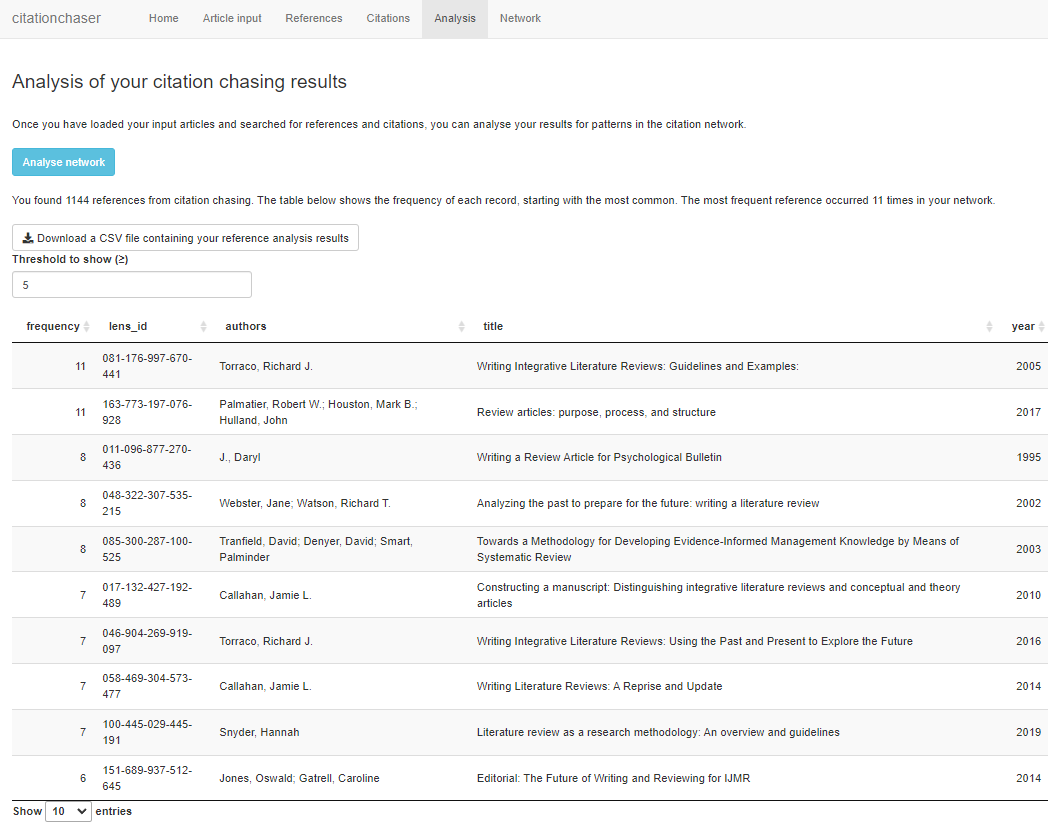

This is where the “Analysis” tab comes in handy. After clicking on “Analyse Network”, you will get the sections appearing, one covering the references and one citations.

As mentioned earlier, Citationchaser found 1,144 unique references but by entering a threshold you can limit papers to only those that are referenced by N papers. In the example, I have chosen the threshold of five, so I see only papers referenced by five or more papers from the original 33 I inputted.

You can do the same for citations in the section further down.

Librarian’s Take :

CitationChaser was first launched in 2021 and has since advanced by leaps and bounds. I’ve found it to be fairly stable working well even when I put in 50 papers and it will be able to find and export thousands of citations.

I do not find the Network tab feature currently useful, it is a bit undeveloped currently, and you are better off using a proper bibliometric analysis tool such as VOSviewer, CiteSpace or Bibliometrix to visualize the relationships between the papers found.

In terms of the comprehensiveness of CitationChaser, there has been concern that because the underlying index Lens.org relies heavily on Microsoft Academic Graph (though it does use Crossref heavily as well), the closure of Microsoft Academic Graph on Dec 2021 will affect the coverage of Lens.org going forward. Fortunately, a replacement OpenAlex (as covered in ResearchRadar) has launched and it is likely that Lens.org will be using them eventually.

The developers of CitationChaser are not resting on their laurels and have started experimenting with using OpenAlex directly as a complementary source.

My own personal take is that besides issues with Microsoft Academic Graph, Lens.org being an aggregator of multiple sources can have lags in updates. As such, this means the results from CitationChaser may not reflect the latest developments which can lead to CitationChaser not recognising the newest paper that is inputted or it may miss out on citations from the latest papers of inputted papers.