By Aaron Tay, Lead, Data Services

What is Google’s Search Generative Experience (SGE)?

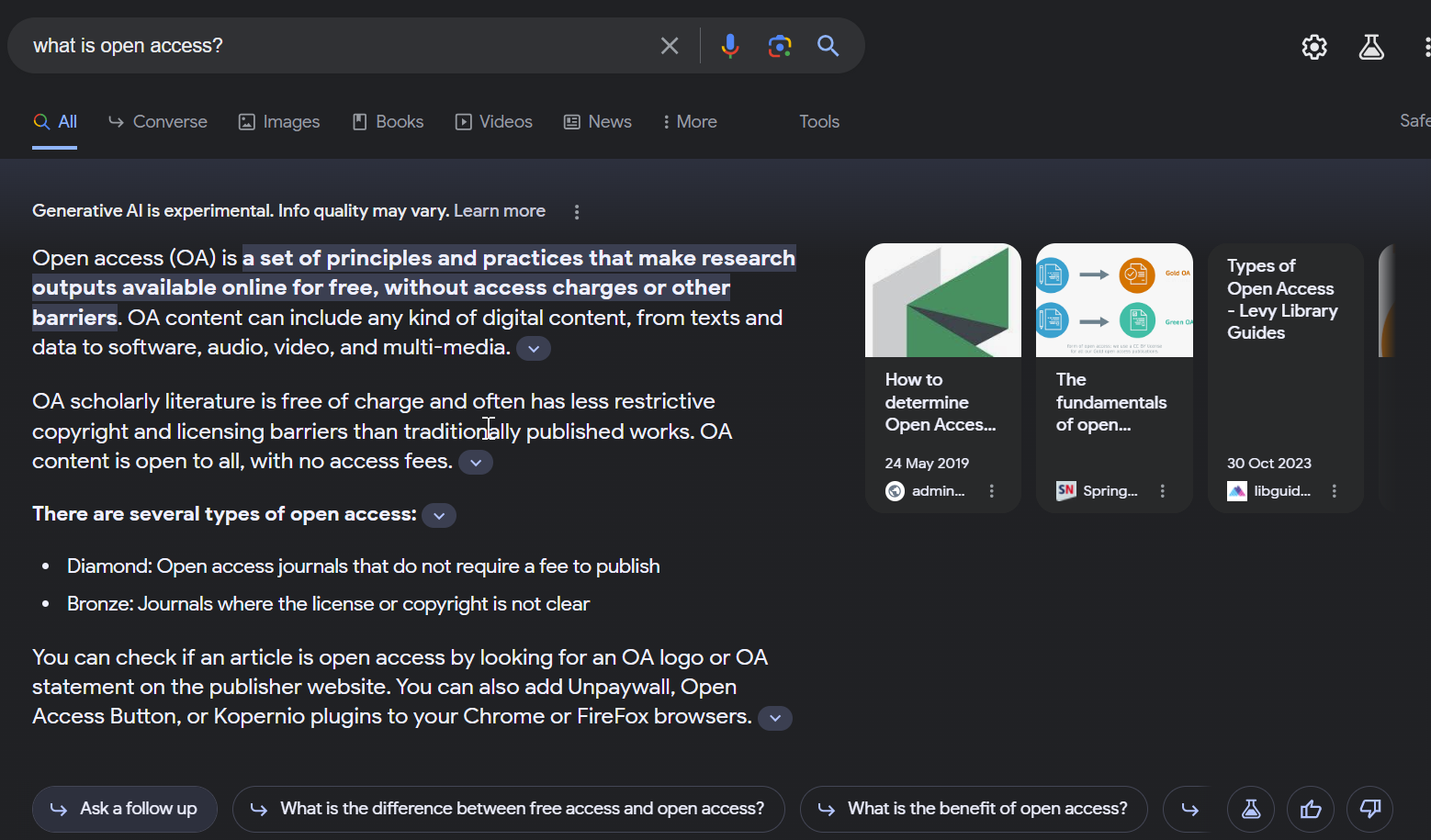

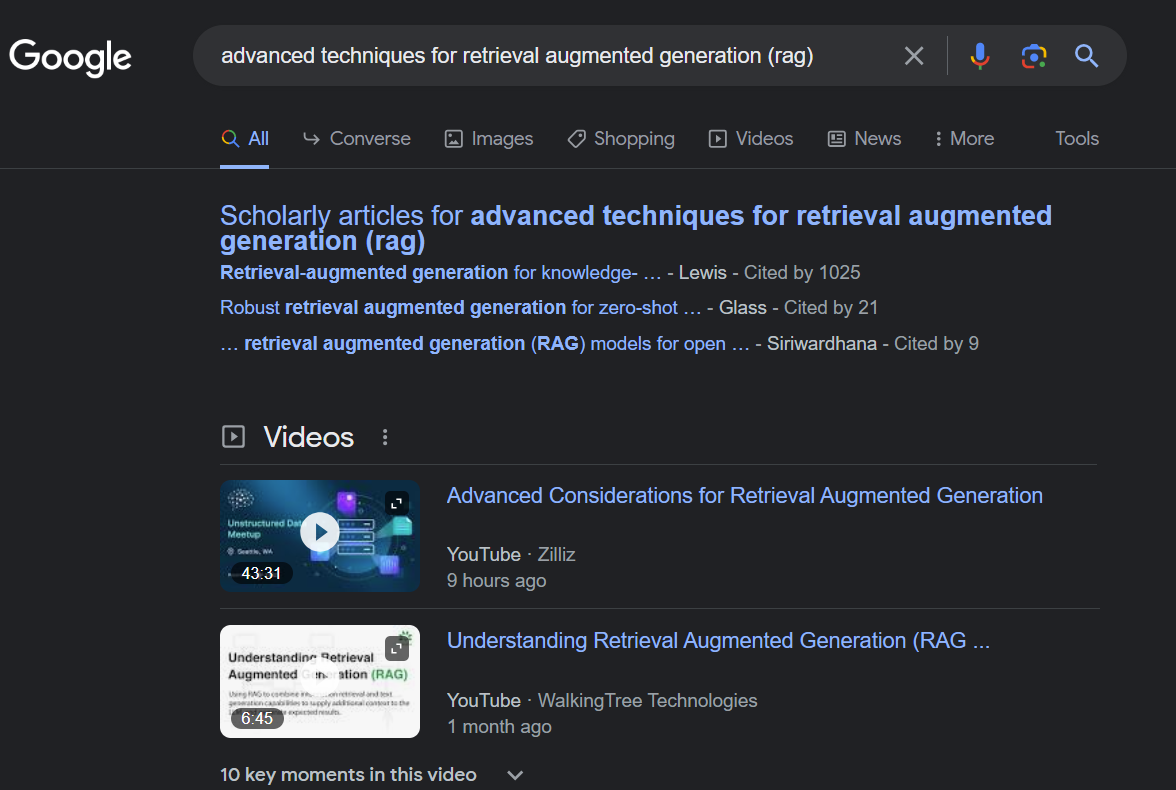

In past ResearchRadar pieces, we have discussed about how search engines both general (e.g. Bing Chat, Perplexity) and academic (e.g Elicit, Scite Assistant, Scopus (upcoming)) are integrating search with generative AI (via Large Language Models) using techniques like RAG (Retrieval Augmented Generation).

But what about Google? They launched – Bard an experimental conversational AI service, initially powered by LaMDA (later updated to PaLM2) in Feb 2023 which was believed to allow them to keep pace with OpenAI’s smash hit ChatGPT.

As our earlier review of Bard noted, unlike the free version of ChatGPT, Google Bard integrates search with the language model. However, it is important to note that unlike Bing, these generative AI features were not integrated into their flagship Google search.

This changed with the launch of the Google Search Generative Experience (SGE) in May 2023. An opt-in feature open to the US only, they slowly expanded to more countries until they made it available to over 120 countries including Singapore on November 8, 2023.

How do I gain access to these features in Singapore?

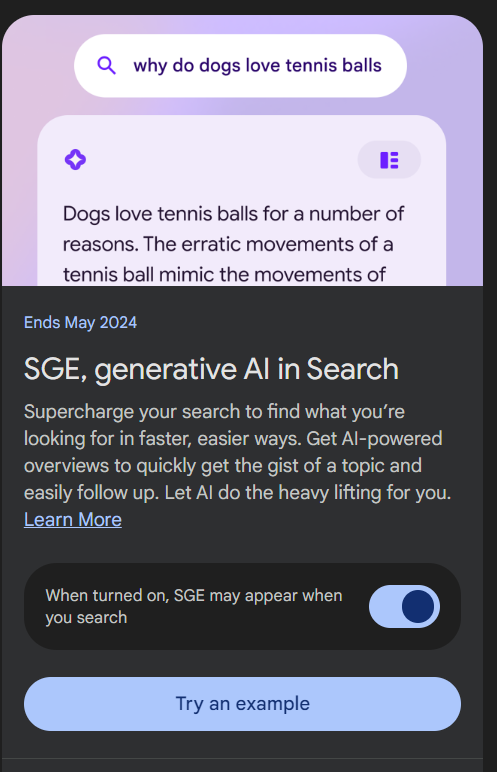

You will need to login to your Google Account and turn on the SGE feature.

Then search with Google while signed in. It is important to note that the SGE feature only works in Chrome Desktop or in mobile on Google app of Android/IOS. There is a reason for that as we will see later.

Are the answers from SGE accurate?

Like any other search engine that uses generative AI, (e.g., Bing Chat, Perplexity) and academic (e.g., Elicit, Scite Assistant, Scopus (upcoming)) the answer generated might be wrong.

However, given that these systems now are integrating search with generative AI (via Large Language Models) using techniques like RAG (Retrieval Augmented Generation). Each generated sentence typically comes with a link or citation so you can check the source to verify the answer.

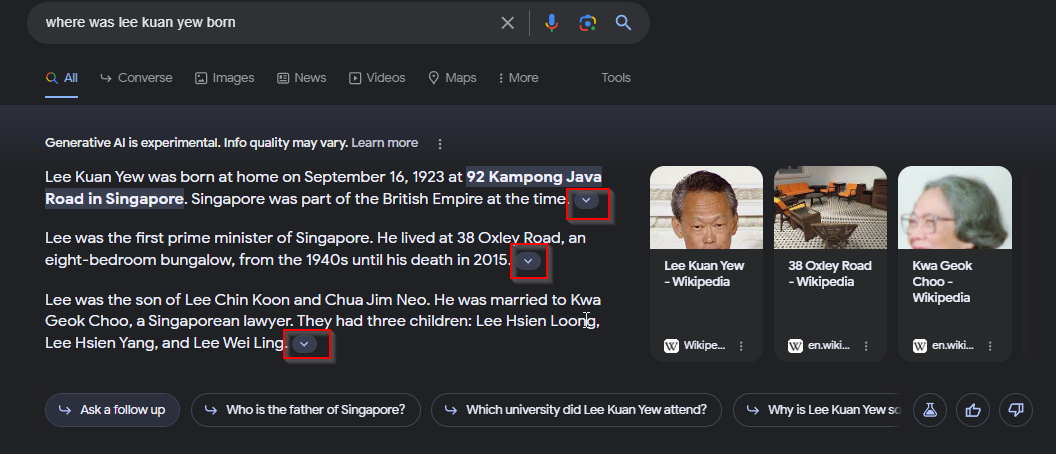

In the example below, the query was where was Lee Kuan Yew born?

For each generated paragraph in the answer, you can click on the dropdown arrow to find a link/citation that supports the sentence.

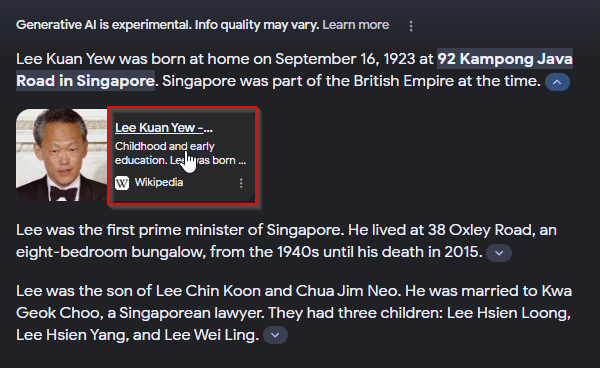

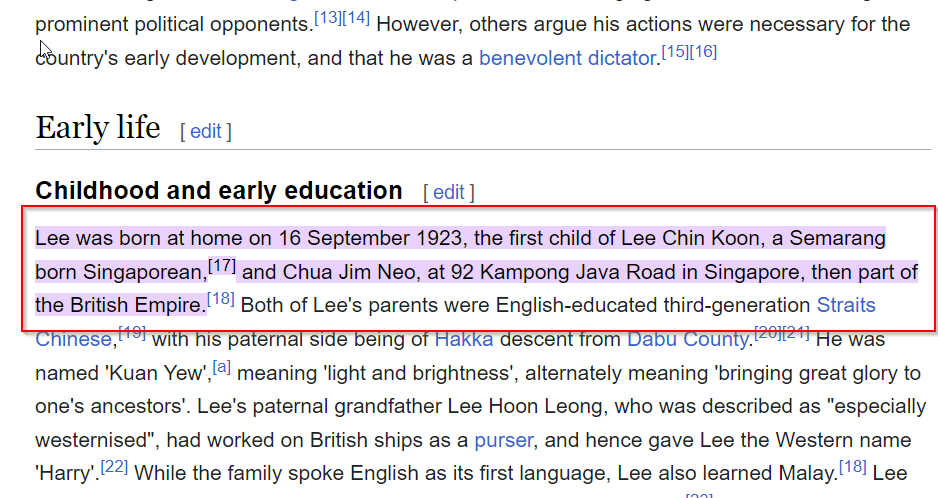

In our case, I will click on the first dropdown to reveal the link. This is because I want to verify if the answer “Lee Kuan Yew was born at home on September 16, 1923 at 92 Kampong Java Road in Singapore.”

The link itself brings you to a Wikipedia article. It is a special type of link that not only brings you to the webpage or source but also brings you directly to the part of the webpage that was used to answer the question.

This type of link works only in Chrome Desktop and Google Mobile app, which is partly why SGE currently works only in these environments. I have found this feature doesn’t work sometimes, for example if it tries to bring you to a PDF for example, you just get to the PDF without the highlighting.

Assuming you believe the source is trustworthy, you can confirm that the generated answer is indeed supported by the source and the answer is likely to be correct.

However, you may sometimes doubt whether the source is correct (this is the internet after all) but even if this is not an issue, you may notice the highlighted section in the source does not support the generated answer! Another way of saying this is that the citation for the generated text is not valid. This is why you should always verify the answer.

Studies of similar tools like Bing Chat, Perpexity (e.g., Evaluating Verifiability in Generative Search Engines) show that depending on the type and difficulty of question given, the error rate can be high. In that particular study, when analysing a variety of questions that were challenging and open-ended, Bing Chat only had valid citations to support 58.7% of its generated sentences.

There are other features like “See definitions within AI-generated responses”, a special shopping mode that will give “a snapshot of noteworthy factors to consider and products that fit the bill.” based on the Google’s Shopping Graph and the ability to ask follow-up questons.

What are some differences between the SGE experience and Bing Chat or similar implementations

For many search queries, with the SGE experience turned on it behaves like many other similar implementations you see in Perplexity, Bing Chat.

It will briefly flash a “generating...” notice and then very quickly generate a direct answer with links/citations.

However, this does not always happen. I have seen two other behaviors.

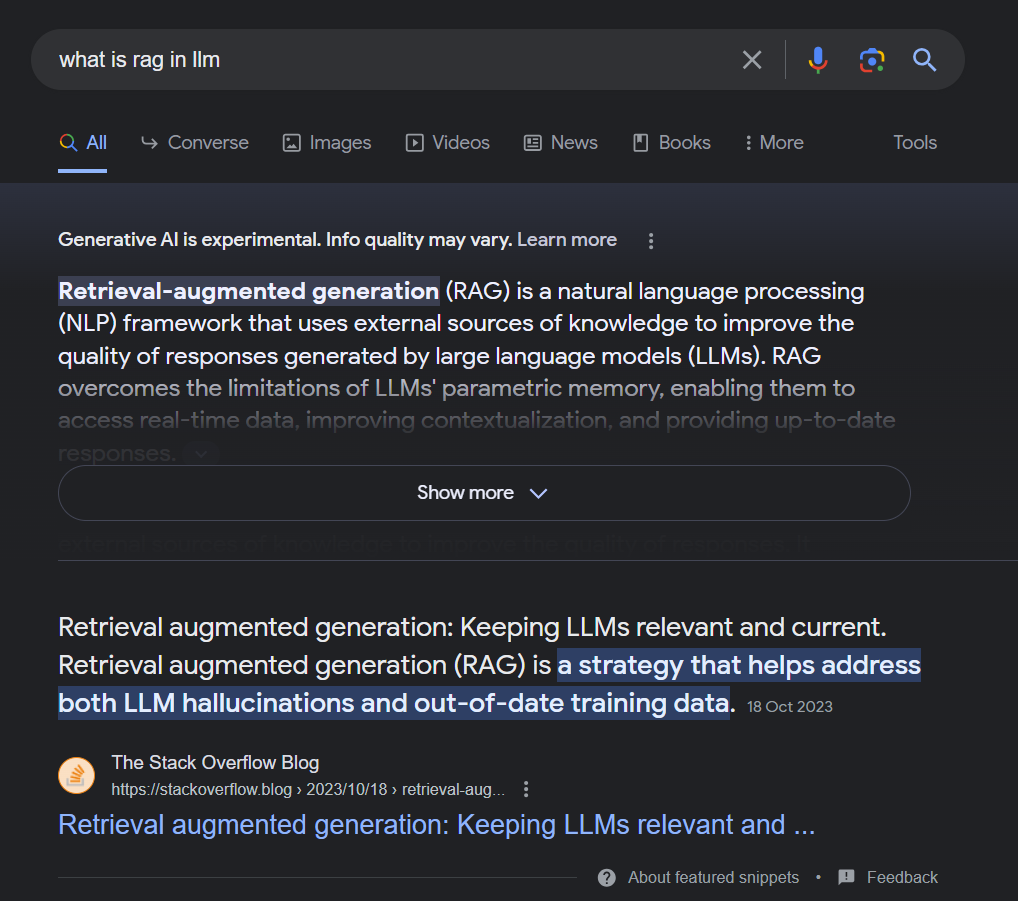

Sometimes it does not automatically generate an answer but displays a message “Get an AI-powered overview for this search” with a “Generate” button that you have to push before it generates an answer.

For some search queries, it does not even provide an option to generate the “AI-powered overview”.

This is unlike Bing Chat and others which will almost always generate some kind of answer.

Clearly Google is being very cautious here and choosing to not show generated answers for all questions.

We do not know what criteria it is using to decide when to automatically generate an answer, offer to generate an article or even choosing not to answer (though a little testing suggests the longer the search query the less likely it will automatically generate an answer).

However, we are told

There are topics for which SGE is designed not generate a response. For some of the topics, there might simply be a lack of quality or reliable information available on the open web. For these areas – sometimes called “data voids” or “information gaps” – where our systems have a lower confidence in our responses, SGE aims not to generate an AI-powered snapshot.

And like all generative AI based systems, there is ring fencing so the model will not generate answers for “explicit or dangerous topics” such as topics on self-harm. There are also explicit disclaimers when generating answers relating to medical and health.

SGE quality standards are higher when it comes to generating responses about certain queries where information quality is critically important. On Search, we refer to these as “Your Money or Your Life” (YMYL) topics – such as finance, health, or civic information – areas where people want an even greater degree of confidence in the results.

All in all, while its rivals like Bing Chat and Perplexity.ai do include AI safety features, Google’s SGE appears to be even more conservative. Given the immense reach and influence of Google Search, I applaud this cautious and careful approach.

What’s the difference between Google SGE and Bard?

On the surface, Google SGE and Bard seem very similar. But there are a few major differences.

It seems trite to say this, but Google SGE is designed to be a search engine first and foremost and is less like a chatbot (though it can sometimes work that way since you can ask follow-up questions).

when it comes to generating responses about certain queries where information quality is critically important. On Search, we refer to these as “Your Money or Your Life” (YMYL) topics – such as finance, health, or civic information – areas where people want an even greater degree of confidence in the results

Moreover

we were intentional in constraining conversationally. What this means, for example, is that people might not find conversational mode in SGE to be a free-flowing creative brainstorm partner — and instead find it to be more factual with pointers to relevant resources.

Indeed, comparing Google SGE with Bing Chat or Bard, I found that Google SGE is definitely not meant to be a chatbot.

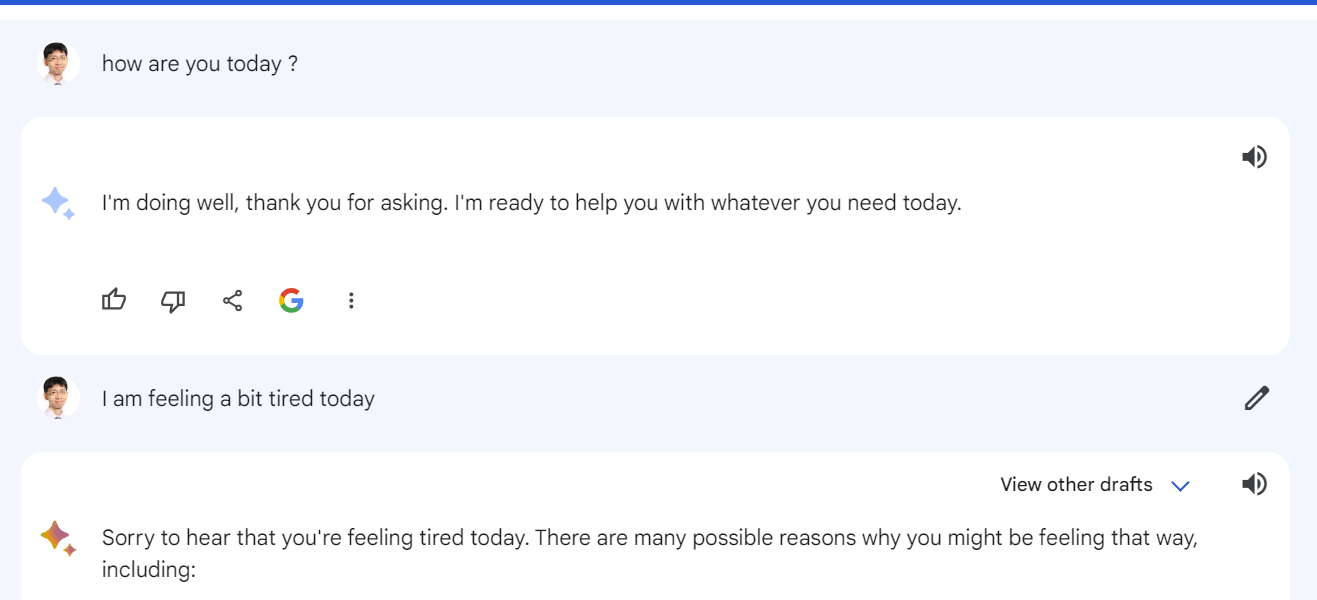

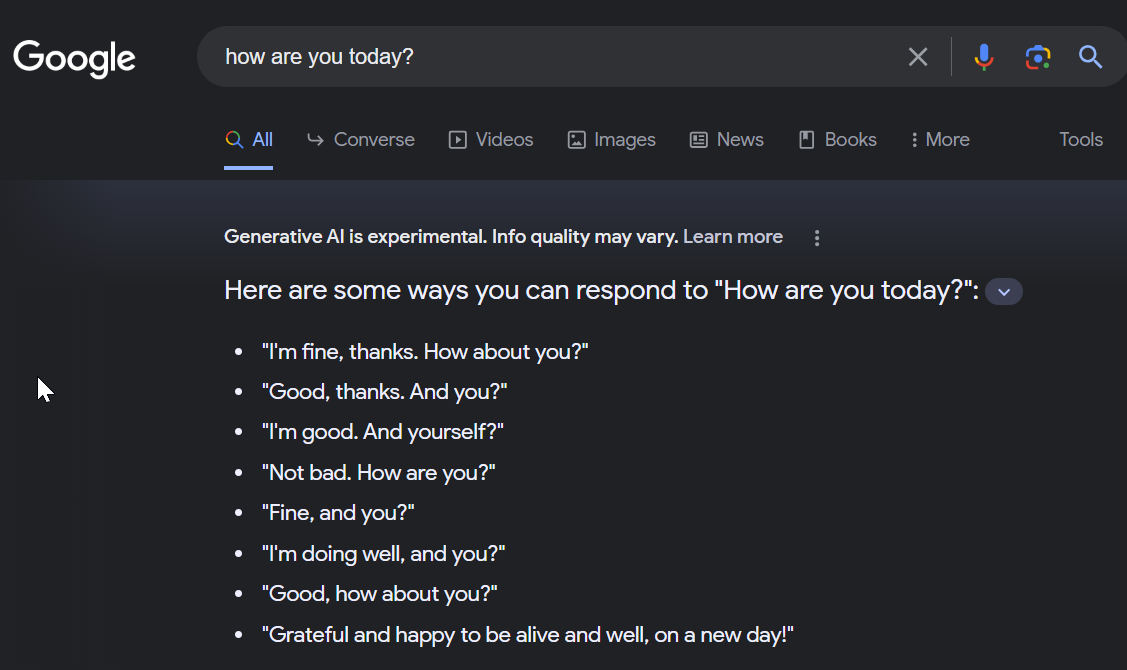

For example, unlike Bard or Bing Chat, you cannot have a casual conversation about life and the weather. See below a conversation with Bard.

Trying to chat with Google SGE gets an odd literal answer.

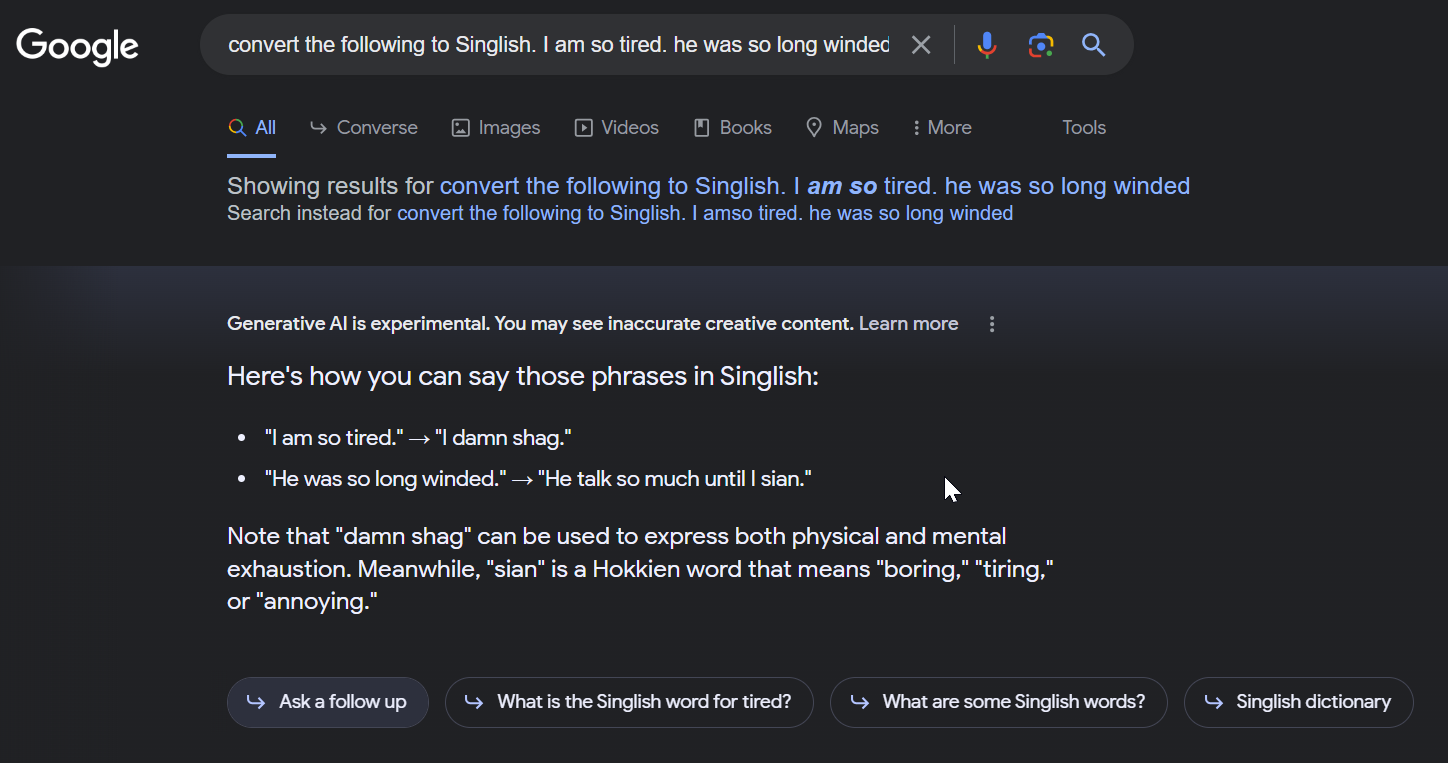

Interestingly, while it typically cannot converse causally with you, this does not mean Google SGE cannot follow instructions and do various tasks. For example, when I ask it to convert a certain phrase into Singlish and convert a string of characters all into upper case, it does so readily.

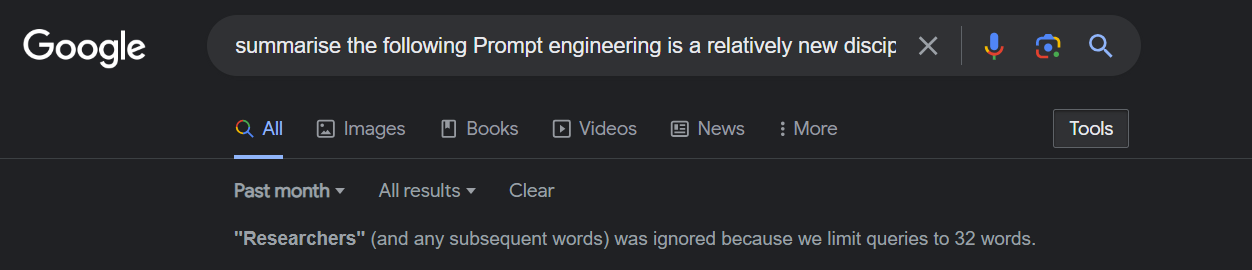

Though typing in natural language seems fine, I would not recommend trying to do prompt engineering e.g., with few/multiple shot prompting as there is a limit of 32 words to query.

This is a huge limitation compared to using ChatGPT, Bard or even Bing Chat so you cannot do common use cases like asking it to summarise a chunk of text.

This further reinforces the idea that you are using a search engine where you type in a limited number of keywords or words rather than give long instructions.

Didn’t Google already give direct answers to some search queries in the past?

Yes, even without SGE turned on, regular Google searches will occasionally give you direct answers to questions.

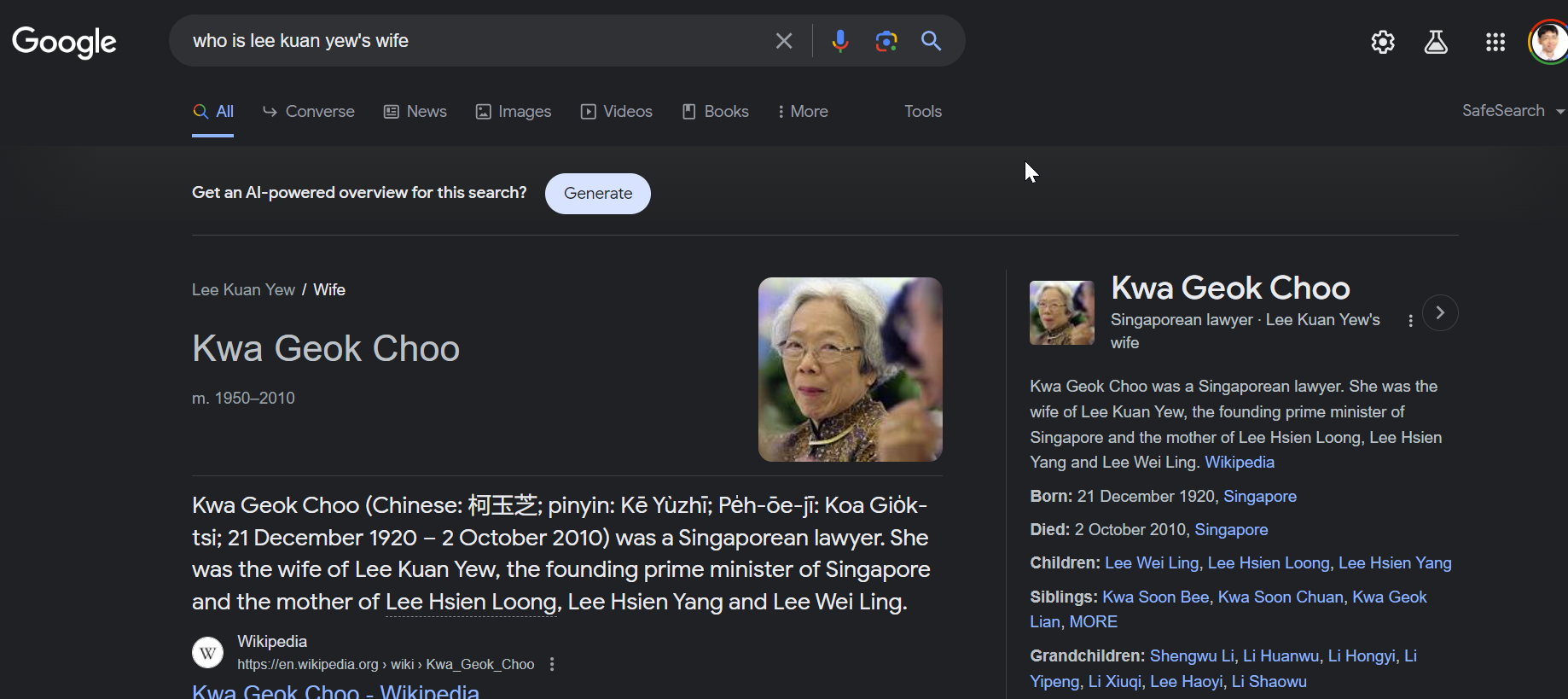

For example, even without SGE, if you ask certain factual questions, Google is able to produce a direct answer using information from the Google Knowledge Graph.

Notice in the example above, I also have the option to generate an “AI-powered overview” because I have SGE option switched on. If I click “generate” I will in fact get two direct answers – one from SGE , one from the Knowledge Graph.

The other type of direct answer you may answer come from a feature called “featured snippet”.

Both are independent of the SGE feature and it is possible you can see both SGE AND Google Knowledge Graph/Featured Snippet results for the same query.

More information about Google SGE